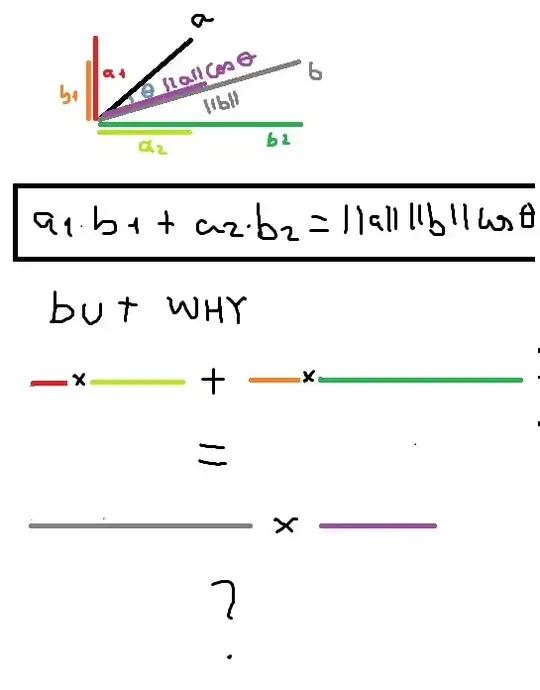

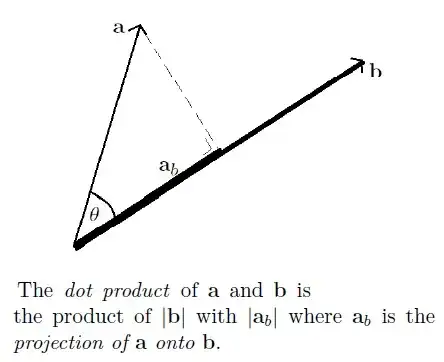

Before we begin, I would like to slightly reframe the question. If we rotate a vector, its length remains the same, and if we rotate two vectors, the angle between them stays the same, so from your formula, it is easy to show that $a\cdot b = R_{\theta}(a)\cdot R_{\theta}(b)$, where $R_{\theta}(v)$ means rotating $v$ by an angle of $\theta$. I assert that this property is the conceptual geometric property that you care most about. Further, by combining this property with the fact that you can pull scalars out of the dot product, you reduce your formula to computing the dot product where $a=(1,0)$.

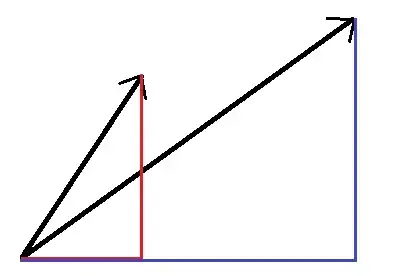

So how do we show $a\cdot b = R_{\theta}(a)\cdot R_{\theta}(b)$? The first step is to observe that rotation is a linear transform. Since the column vectors of the matrix of a linear transform are just where it sends the basis vectors, and it is easy to rotate the vectors $\pmatrix{1 \\ 0}$ and $\pmatrix{0 \\ 1}$, and thus to compute $$R_{\theta}=\pmatrix{\cos \theta & -\sin \theta \\ \sin \theta & \cos \theta}.$$

Further, since $\cos (-\theta) = \cos \theta$ and $\sin (-\theta) = -\sin \theta$, we have that $R_{-\theta}=R_{\theta}^T$, the transpose. We remark that we will need to observe how matrix multiplication interacts with matrix transposition, namely $(AB)^T=B^TA^T$. Additionally, we observe that since rotating by $-\theta$ undoes rotation by $\theta$, if $M$ is the matrix for rotation by $\theta$, we have $M^TM=I$, where $I$ is the identity matrix.

Now, we are almost done. If $v$ and $w$ are vectors, written as column vectors, we can write their dot product in terms of matrix multiplication $v\cdot w = v^Tw$. Therefore, with $M$ as the matrix for rotation by $\theta$, we have

$$R_{\theta}(v)\cdot R_{\theta}(w)=(Mv)\cdot (Mw)=(Mv)^T(Mw)=v^T(M^TM)w=v^TIw=v^Tw=v\cdot w.$$

Of course, this proof raises a few questions, like "Why should the transpose of a rotation matrix be the inverse?" or even more fundamental, "What does a transpose mean geometrically?" but hopefully the actual computations involved were transparent.

After writing my response, I was thinking about how to generalize the proof to higher dimensions. The key fact of the proof is that rotation matrices are orthogonal, which is obvious from the form of rotation matrices in two dimensions, but which is less obvious in three dimensions. We can prove it in three dimensions by showing that every rotation is a product of rotations about the coordinate axes, but this is less obvious for higher dimensions. However, there is another approach which does generalize more easily.

Polarization identity: $4(x\cdot y)=\left\|x+y \right\|^2-\left\|x-y \right\|^2$.

Proof. Using bilinearity, expand out $(x+y)\cdot (x+y)$ and $(x-y)\cdot (x-y)$.

Therefore, the dot product is completely determined by knowing the length of vectors. This gives us the following result.

Corollary: A linear map that preserves lengths also preserves dot products.

Corollary: The dot product is invariant under rotations in all dimensions.

That is the result that we wanted, arrived at simply, with no matrix multiplication, and no explicit calculations, just the observations that (1) dot products are bilinear, (2) length is defined in terms of dot products, (3) rotations are linear, and (4) rotations preserve length.

While unnecessary for our present purposes, this also fixes the hole in generalizing our original proof by giving us the following result.

Corollary: All rotation matrices are orthogonal for any reasonable definition of higher dimensional rotation.