I am reading the Wikipedia article about Support Vector Machine and I don't understand how they compute the distance between two hyperplanes.

In the article,

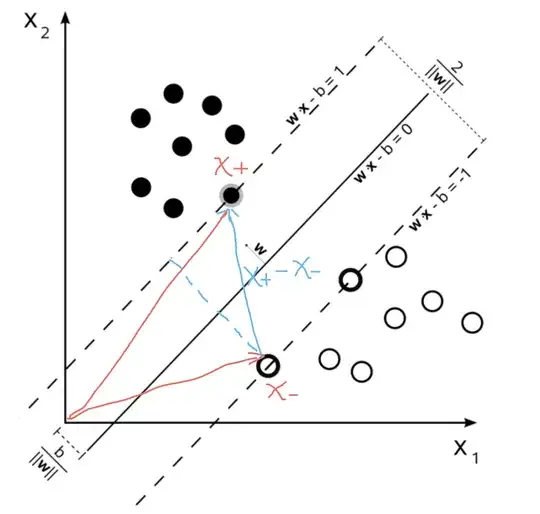

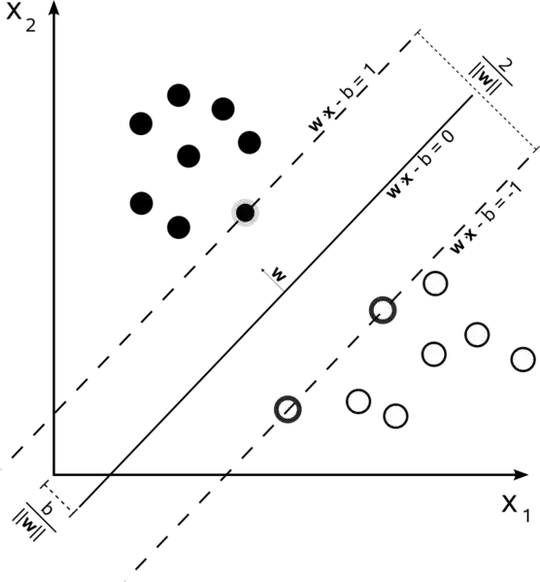

By using geometry, we find the distance between these two hyperplanes is $\frac{2}{\|\mathbf{w}\|}$

I don't understand how the find that result.

What I tried

I tried setting up an example in two dimensions with an hyperplane having the equation $y = -2x+5$ and separating some points $A(2,0)$, $B(3,0)$ and $C(0,4)$, $D(0,6)$ .

If I take a vector $\mathbf{w}(-2,-1)$ normal to that hyperplane and compute the margin with $\frac{2}{\|\mathbf{w}\|}$ I get $\frac{2}{\sqrt{5}}$ when in my example the margin is equal to 2 (distance between $C$ and $D$).

How did they come up with $\frac{2}{\|\mathbf{w}\|}$ ?