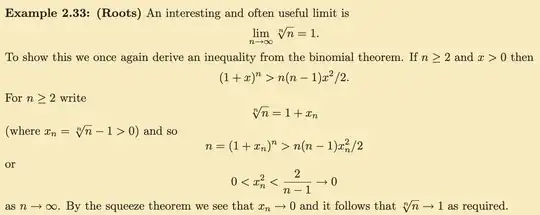

Thomson et al. provide a proof that $\lim_{n\rightarrow \infty} \sqrt[n]{n}=1$ in this book (page 73). It has to do with using an inequality that relies on the binomial theorem:

I have an alternative proof that I know (from elsewhere) as follows.

Proof.

\begin{align} \lim_{n\rightarrow \infty} \frac{ \log n}{n} = 0 \end{align}

Then using this, I can instead prove: \begin{align} \lim_{n\rightarrow \infty} \sqrt[n]{n} &= \lim_{n\rightarrow \infty} \exp{\frac{ \log n}{n}} \newline & = \exp{0} \newline & = 1 \end{align}

On the one hand, it seems like a valid proof to me. On the other hand, I know I should be careful with infinite sequences. The step I'm most unsure of is: \begin{align} \lim_{n\rightarrow \infty} \sqrt[n]{n} = \lim_{n\rightarrow \infty} \exp{\frac{ \log n}{n}} \end{align}

I know such an identity would hold for bounded $n$ but I'm not sure I can use this identity when $n\rightarrow \infty$.

Question:

If I am correct, then would there be any cases where I would be wrong? Specifically, given any sequence $x_n$, can I always assume: \begin{align} \lim_{n\rightarrow \infty} x_n = \lim_{n\rightarrow \infty} \exp(\log x_n) \end{align} Or are there sequences that invalidate that identity?

(Edited to expand the last question) given any sequence $x_n$, can I always assume: \begin{align} \lim_{n\rightarrow \infty} x_n &= \exp(\log \lim_{n\rightarrow \infty} x_n) \newline &= \exp(\lim_{n\rightarrow \infty} \log x_n) \newline &= \lim_{n\rightarrow \infty} \exp( \log x_n) \end{align} Or are there sequences that invalidate any of the above identities?

(Edited to repurpose this question). Please also feel free to add different proofs of $\lim_{n\rightarrow \infty} \sqrt[n]{n}=1$.