The simplest finite element shape in two dimensions is a triangle.

In a finite element context, any geometrical shape is endowed with an interpolation,

which is linear for triangles (most of the time), as has been explained in

this answer :

$$

T(x,y) = A.x + B.y + C

$$

Here $A$ and $B$ can be expressed in coordinate and function values

at the vertices (nodal points) of the triangle:

$$

\begin{cases}

A = [ (y_3 - y_1).(T_2 - T_1) - (y_2 - y_1).(T_3 - T_1) ] / \Delta \\

B = [ (x_2 - x_1).(T_3 - T_1) - (x_3 - x_1).(T_2 - T_1) ] / \Delta

\end{cases} \\ \Delta = (x_2 - x_1).(y_3 - y_1) - (x_3 - x_1).(y_2 - y_1)

$$

Consider the simplest finite element shape in two dimensions except one:

the quadrilateral. Function behavior inside a quadrilateral is approximated

by a bilinear interpolation between the function values at the vertices

or nodal points (most of the time.

Wikipedia

is rather terse about it)

Let $T$ be such a function, and $x,y$ coordinates. Then try:

$$

T = A + B.x + C.y + D.x.y

$$

Giving:

$$

\begin{cases}

T_1 = A + B.x_1 + C.y_1 + D.x_1.y_1 \\

T_2 = A + B.x_2 + C.y_2 + D.x_2.y_2 \\

T_3 = A + B.x_3 + C.y_3 + D.x_3.y_3 \\

T_4 = A + B.x_4 + C.y_4 + D.x_4.y_4

\end{cases} \quad \Longleftrightarrow \quad

\begin{bmatrix} T_1 \\ T_2 \\ T_3 \\ T_4 \end{bmatrix}

\begin{bmatrix} 1 & x_1 & y_1 & x_1 y_1 \\ 1 & x_2 & y_2 & x_2 y_2 \\

1 & x_3 & y_3 & x_3 y_3 \\ 1 & x_4 & y_4 & x_4 y_4 \end{bmatrix}

\begin{bmatrix} A \\ B \\ C \\ D \end{bmatrix} \\ \Longleftrightarrow \quad

\begin{bmatrix} A \\ B \\ C \\ D \end{bmatrix}

\begin{bmatrix} 1 & x_1 & y_1 & x_1 y_1 \\ 1 & x_2 & y_2 & x_2 y_2 \\

1 & x_3 & y_3 & x_3 y_3 \\ 1 & x_4 & y_4 & x_4 y_4 \end{bmatrix}^{-1}

\begin{bmatrix} T_1 \\ T_2 \\ T_3 \\ T_4 \end{bmatrix}

$$

Provided that we have a non-singular matrix in the middle.

But now we have a little problem.

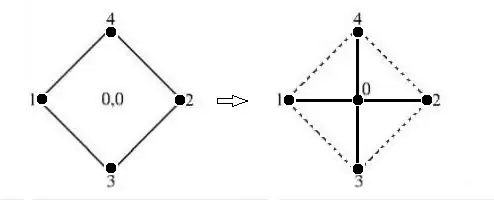

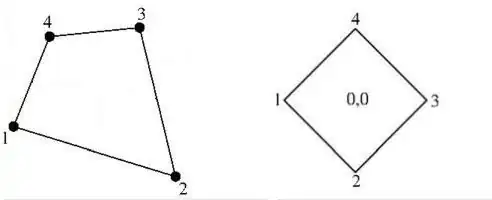

Consider the quadrilateral as depicted in the above picture on the right.

The vertex-coordinates of this quadrilateral are defined by the second and the

third column of the matrix below. This matrix is formed by specifying $T$

vertically for the nodal points and horizontally for the basic functions

$ 1,x,y,xy $ :

$$

\begin{bmatrix} T_1 \\ T_2 \\ T_3 \\ T_4 \end{bmatrix} =

\begin{bmatrix} 1 & -\frac{1}{2} & 0 & 0 \\

1 & 0 & -\frac{1}{2} & 0 \\

1 & +\frac{1}{2} & 0 & 0 \\

1 & 0 & +\frac{1}{2} & 0 \end{bmatrix}

\begin{bmatrix} A \\ B \\ C \\ D \end{bmatrix}

$$

The last column of the matrix is zero. Hence it is singular, meaning that

$A,B,C$ and $D$ cannot be found in this manner. Though with a unstructured grid

there may seem to be not a great chance that a quadrilateral is exactly positioned

like this, experience reveals that it cannot be excluded that Murphy comes by.

That alone is enough reason to declare the method for triangles not done for quadrilaterals.

Two questions:

-

Why in the first place would a bilinear interpolation be associated

with a quadrilateral?

Why not some other finite element shape? And why not some other interpolation? -

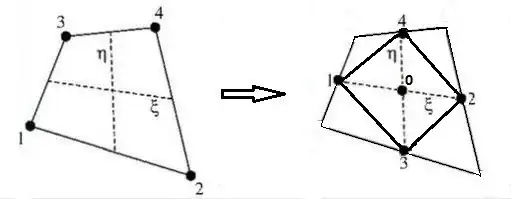

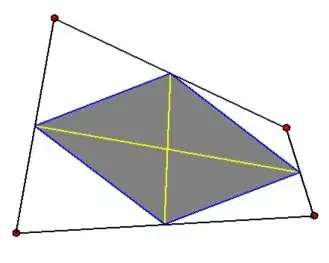

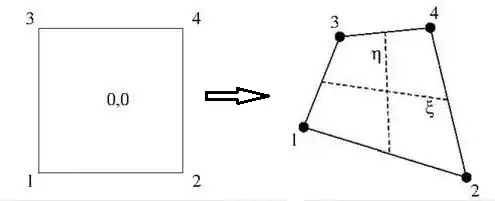

How can a bilinear interpolation be defined for an arbitrary quadrilateral (assumed convex),

i.e. without running into singularities?

EDIT. The comment by Rahul sheds some light. Let the finite element shape be "modified" by an affine transformation (with $a,b,c,d,p,q$ arbitrary real constants) and work out for the term that is interesting: $$\begin{cases} x' = ax+by+p \\ y' = cx+dy+q \end{cases} \quad \Longrightarrow \\ x'y'=acx^2+bdy^2 + (ad+bc)xy+(cp+aq)x+(dp+bq)y+pq $$ So the interpolation remains bilinear only when the following conditions are fulfilled: $$ ac=0 \; \wedge \; bd=0 \; \wedge \; ad+bc\ne 0 \quad \Longleftrightarrow \\ \begin{cases} a\ne 0 \; \wedge \; d\ne 0 \; \wedge \; b=0 \; \wedge \; c=0 \\ a=0 \; \wedge \; d=0 \; \wedge \; b\ne 0 \; \wedge \; c\ne 0 \end{cases}\quad \Longleftrightarrow \\ \begin{cases}x'=ax+p\\y'=dy+q\end{cases} \quad \vee \quad \begin{cases}x'=by+p\\y'=cx+q\end{cases} $$ This means that a (parent) quadrilateral element, once it has been chosen, can only be translated, scaled (in $x$- and/or $y$- direction), mirrored in $\,y=\pm x$ , rotated over $90^o$. Did I forget something?

Update.

Why a quadrilateral with bilinear interpolation?

Little else is possible with polynomial terms like $\;1,\xi,\eta,\xi\eta\,$ , if four nodal points are needed (one degree of freedom each) for obtaining four equations with four unknowns. Then stil there remain some issues, such as not self-intersecting and being convex. The former issue has been covered in the answer by Nominal Animal. The latter may be stuff for a separate question.

Other issues covered in the answer by Nominal Animal are the following.

- Perhaps the simplest heuristics is to take the direct product of one-dimensional case: the line segment as well as the linear interpolation. With the notations by Rahul and Nominal Animal that is: $[0,1]\times[0,1]$ and $\{1,u\}\times\{1,v\}$ . In the end, we have a square as the standard parent bilinear quadrilateral.

- For a non-degenerate paralellogram the bilinear interpolation is reduced to a linear one, which makes it simple to express the local coordinates $(u,v)$ into the global coordinates $(x,y)$.

LATE EDIT. Continuing story at: