An easy way is proposed by Han de Bruijn, which basically tries to find the minimum location per dimension (correct me if I am wrong). However, in that case you are not using all information you have, so it is definitely not optimal in that sense. On the other hand, I don't think there is an easy way if you want to use all data points. Below, I present the easiest way I could come up with to compute the minimum (apologies, but I find it way easier to think of a minimum instead of a maximum).

Let $y \in \mathcal{R}$ be the output of the function for which you want to find a parabolic fit and let $\bar{x} \in \mathcal{R}^d$ be the location of the optimum, i.e. where the gradient is zero. The dimension is denoted by $d$. In that case, we can approximate $y$ using our parabolic function:

$$

y = (x - \bar{x})^T A (x - \bar{x}) + c

$$

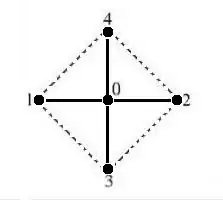

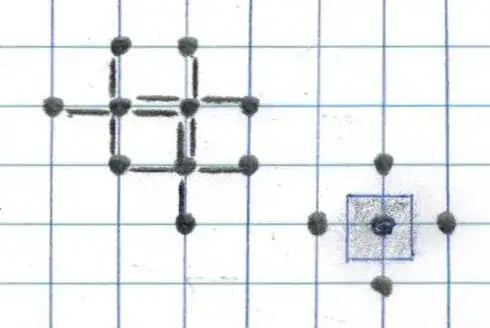

Here, $x\in\mathcal{R}^d$ denotes the input vector, $A\in\mathcal{R}^{d\times d}$ is a symmetric positive definite matrix (in case of maximization, A has to be negative definite) and $c$ is the value of $y$ at the optimum (i.e., if $x=\bar{x}$). We have $(d+1)(d+2)/2$ unknowns.

You can see that this is not straightforward to solve, as we end up with a cubic term $\bar{x}^TA\bar{x}$. For example, with least squares you can still solve this, given your datapoints $(x_1, y_1), ..., (x_N, y_N)$, with $N=3^d$. To this end, let this be the cost function you want to minimize:

$$

J(\bar{x}, A, c)=\sum_{i=1}^N \left( (x_i-\bar{x})^TA(x_i-\bar{x})+c-y_i\right)^2

$$

Through minimization of $J(\bar{x}, A, c)$, you can find the location of the optimum $\bar{x}$ and the minimum value of $y$, i.e. $c$.

I tried to write a simple function in python, which takes as input the data and the dimension $d$. I tried it for $d=4$ and it comes instantly with a solution, so I think it should also handle $d=6$ well enough. I hope it helps you.

import numpy as np

from scipy import optimize

class ParabolicInterpolation:

def __init__(self, ndim):

"""Initialize parabolic interpolation in n dimensions"""

self.n = ndim

self.datax = np.array([])

self.datay = np.array([])

self.r, self.c = np.triu_indices(self.n) # Will be used to construct the A-matrix

self.ahat = np.zeros((self.n, self.n))

def get_x_a_c(self, x):

"""Return the offset x, the A matrix and the offset c"""

xhat = x[:self.n]

self.ahat[self.r, self.c] = x[self.n:-1]

self.ahat[self.c, self.r] = x[self.n:-1]

chat = x[-1]

return xhat, self.ahat, chat

def f(self, x):

"""Return the error between model and data"""

xhat, ahat, chat = self.get_x_a_c(x)

xoff = self.datax - xhat

yhat = np.einsum('in,in->i', np.einsum('in,nj->ij', xoff, ahat), xoff) + chat # Estimated output

return yhat - self.datay

def fit(self, datax, datay):

"""Compute the unknowns using Least Squares"""

self.datax = datax

self.datay = datay

init = np.zeros(((self.n+1) * (self.n+2)) // 2)

i = -1 # Make sure we start with identity matrix

for j in range(self.n):

i += self.n + 1 - j

init[i] = 1

xopt = optimize.leastsq(self.f, init)[0]

xhat, ahat, chat = self.get_x_a_c(xopt)

print("Minimum at: [", end="")

for i in range(self.n):

print("{:.2e}{:s}".format(xhat[i], ', ' if i+1 < self.n else ''), end="")

print("]")

print("Minimum value: {:.3e}".format(chat))

return xopt

# Small demo

A = np.array([[1, 0.5, 0.25, 0.1], [0.5, 2, 0.25, -0.2], [0.25, 0.25, 1.5, -0.1], [0.1, -0.2, -0.1, 1]])

c = 0.5 # This is the minimum value we want to find

xstar = np.array([0.5, 0.25, -0.2, 0.6]) # This is the location of the minimum value

# Create some fake data

n = len(xstar)

X = np.zeros((3 ** n, n)) # Create the lattice square points

X[0, :] = -1

for ii in range(1, 3 ** n):

X[ii] = X[ii-1]

X[ii, 0] += 1

jj = 0

while X[ii, jj] == 2:

X[ii, jj] = -1

jj += 1

X[ii, jj] += 1

y = np.zeros(X.shape[0])

for ii in range(X.shape[0]):

y[ii] = np.dot(np.dot(X[ii]-xstar, A), X[ii]-xstar) + c

y += np.random.randn(X.shape[0])*0.05 # Add some noise, otherwise it is too simple ;)

# Perform the parabolic interpolation

pi = ParabolicInterpolation(n)

pi.fit(X, y)