I know that this question is very old but wanted to contribute nonetheless as I found it very hard to find a proof online that was satisfying enough, yet easy enough to follow. Proofs by induction are not constructive and do not tell you where the expression comes from in the first place.

While user940's answer is helpful, some parts are not very obvious, especially the first line and the later lines involving counting.

I have come up with a solution that is easier to follow and intuit, and I hope it helps others.

Let $\mu := \mathop{\mathbb{E}}\left(X_{i}\right)$ denote the population mean and $\mu_{k} := \mathop{\mathbb{E}}\left[(X_{i} - \mu)^{k}\right]$ denote the $k$th centered population moment. Notice that with this notation, $\mu_{2}$ is the population variance (what you are used to notating with $\sigma^2$, so $\mu_{2}^{2}$ would be the square of this (or $\sigma^{4}$).

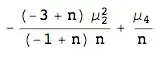

Then, if the kurtosis exists,

$$\begin{align}

\boxed{\mathop{\mathrm{Var}}\left(S^{2}\right) = \frac{1}{n}\left(\mu_{4} - \frac{n - 3}{n - 1}\mu_{2}^{2}\right).}

\end{align}$$

Beginning from the definition of sample variance:

$$

S^{2} := \frac{1}{n - 1} \sum_{i = 1}^{n} (X_{i} - \bar{X})^{2},

$$

let us derive the following useful lemma:

Lemma (reformulation of $S^{2}$ as the average distance between two datapoints).

Let $\mathbf{X}$ be a sample of size $n$ and $S^{2}$ be the sample variance. Then

$$

S^{2} \equiv \frac{1}{2n (n - 1)} \sum_{i=1}^{n}\sum_{j=1}^{n} (X_{i} - X_{j})^{2}.

$$

Proof.

Pick some $X_{j}$ and note that:

$$\begin{align}

S^{2} &\equiv \frac{1}{n- 1} \sum_{i=1}^{n}(X_{i} - X_{j} + X_{j} - \bar{X})^{2} \\

\implies (n - 1)S^{2} &= \sum_{i=1}^{n}(X_{i} - X_{j})^{2} + 2 \sum_{i=1}^{n} (X_{i} - X_{j})(X_{j} - \bar{X}) + \sum_{i=1}^{n}(X_{j} - \bar{X})^{2}.

\end{align}$$

Now sum this over $j$:

$$\begin{align}

n(n - 1)S^{2} &= \sum_{j=1}^{n}\sum_{i=1}^{n}(X_{i} - X_{j})^{2} + 2 \sum_{j=1}^{n} \sum_{i=1}^{n} (X_{i} - X_{j})(X_{j} - \bar{X}) + \sum_{j=1}^{n} \sum_{i=1}^{n}(X_{j} - \bar{X})^{2}.

\end{align}$$

The final term is simply $n(n - 1)S^{2}$ again, so we have:

$$\begin{align}

\sum_{i=1}^{n}\sum_{j=1}^{n}(X_{i} - X_{j})^{2} &= - 2 \sum_{i=1}^{n} \sum_{j=1}^{n} (X_{i} - X_{j})(X_{j} - \bar{X}) \\

&= 2 \sum_{i=1}^{n} \sum_{j=1}^{n} (X_{j} - \bar{X} + \bar{X} - X_{i})(X_{j} - \bar{X}) \\

&= 2 \sum_{i=1}^{n} \sum_{j=1}^{n} (X_{j} - \bar{X})^{2} + 2 \sum_{i=1}^{n} (\bar{X} - X_{i}) \underbrace{\sum_{j=1}^{n} (X_{j} - \bar{X})}_{0} \\

&= 2n(n - 1)S^{2},

\end{align}$$

giving the result.

Using this Lemma, we can now find the sample variance. Let's begin by using a small trick: Let $Z_{i} := X_{i} - \mu$. This means that $\mathop{\mathbb{E}}\left(Z_{i}^{k}\right) = \mu_{k}$ gives you the $k$th central moment. This makes the algebra much easier.

Proceed as so:

$$\begin{align}

S^{2} &\equiv \frac{1}{2n(n - 1)}\sum_{i=1}^{n}\sum_{j=1}^{n} \left( \overbrace{(X_{i} - \mu)}^{Z_{i}} - \overbrace{(X_{j} - \mu)}^{Z_{j}}\right)^{2} \\

\implies \mathop{\mathrm{Var}}\left(S^{2}\right) &\equiv \mathop{\mathrm{Var}}\left[\frac{1}{2n(n - 1)} \left(\sum_{i=1}^{n} \sum_{j=1}^{n} \left(Z_{i}^{2} + Z_{j}^{2} - 2 Z_{i} Z_{j}\right)\right) \right] \\

&= \mathop{\mathrm{Var}}\left[\frac{1}{2n(n - 1)} \left(2n \sum_{i=1}^{n} Z_{i}^{2} - 2 \sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right)\right] \\

&= \mathop{\mathrm{Var}}\left[\frac{1}{n(n - 1)}\left(n \sum_{i=1}^{n} Z_{i}^{2} - \sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right)\right] \\

&= \frac{1}{n^{2}(n - 1)^{2}} \Bigg[ n^{2} \mathop{\mathrm{Var}}\left(\sum_{i=1}^{n}Z_{i}^{2}\right) + \mathop{\mathrm{Var}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n} Z_{i} Z_{j}\right)\\ &\phantom{=} - 2 n \mathop{\mathrm{Cov}}\left(\sum_{i=1}^{n}Z_{i}^{2}, \sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i} Z_{j}\right)\Bigg]. \tag{\(\bigstar\)}

\end{align}$$

Now we must calculate each of these variances and covariances. For the first one,

$$\begin{align}

\mathop{\mathrm{Var}}\left(\sum_{i=1}^{n}Z_{i}^{2}\right) &= n \mathop{\mathrm{Var}}\left(Z_{1}^{2}\right) \\

&= n\left(\mathop{\mathbb{E}}\left(Z_{1}^{4}\right) - \mathop{\mathbb{E}}\left(Z_{1}^{2}\right)^{2}\right) \\

&= n(\mu_{4} - \mu_{2}^{2}). \tag{\(\blacktriangle\)}

\end{align}$$

(Note that $\mu_{2}^{2}$ is the variance squared.)

For the second variance,

$$\begin{align}

\mathop{\mathrm{Var}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i} Z_{j}\right) &= \mathop{\mathbb{E}}\left(\left(\sum_{i=1}^{n}\sum_{j=1}^{n} Z_{i}Z_{j}\right)^{2}\right) - \mathop{\mathbb{E}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n} Z_{i}Z_{j}\right)^{2}.

\end{align}$$

To find the first expectation, we write:

$$\begin{align}

\mathop{\mathbb{E}}\left(\left(\sum_{i=1}^{n}\sum_{j=1}^{n} Z_{i}Z_{j}\right)^{2}\right) &= \sum_{i=1}^{n}\sum_{j=1}^{n}\sum_{k=1}^{n}\sum_{l=1}^{n} \mathop{\mathbb{E}}\left(Z_{i}Z_{j}Z_{k}Z_{l}\right).

\end{align}$$

To compute this, we must consider all the possible ways that the indices $(i,j,k,l)$ could be different. If there is any index that is different from all the other indices, then the expectation is zero. Thus, the only way for $\mathop{\mathbb{E}}\left(Z_{i}Z_{j}Z_{k}Z_{l}\right)$ to be non-zero is if all indices are the same, or if there are two distinct pairs of equal indices (e.g. $i = j \neq k = l$).

In the first case, the expectation becomes $\mathop{\mathbb{E}}\left(Z_{i}^{4}\right) = \mu_{4}$, and there are $n$ ways that this happens.

In the second case, suppose $i = j$ and $k = l$ and $k > i$ (We take one to be strictly larger to avoid double counting in what follows). Then the expectation becomes $\mathop{\mathbb{E}}\left(Z_{i}^{2} Z_{k}^{2}\right) = \mathop{\mathbb{E}}\left(Z_{i}^{2}\right) \mathop{\mathbb{E}}\left(Z_{k}^{2}\right) = \mu_{2}^{2}$. The number of ways for $i = j > k = l$ is ${n \choose 2} = \frac{1}{2}n(n - 1)$. But there are other ways of choosing two pairs. In particular, there are ${4 \choose 2} = 6$ ways of choosing two pairs of indices from 4 indices, giving a total of $3n(n - 1)$ ways that the expectation equals $\mu_{2}^{2}$. Thus, $\mathop{\mathbb{E}}\left(\left(\sum_{i=1}^{n}\sum_{j=1}^{n} Z_{i}Z_{j}\right)^{2}\right) = n \mu_{4} + 3n(n - 1)\mu_{2}^{2}$.

Now we must calculate the expectation $\mathop{\mathbb{E}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right) = \sum_{i=1}^{n}\sum_{j=1}^{n}\mathop{\mathbb{E}}\left(Z_{i}Z_{j}\right)$. Since $Z_{i}$ and $Z_{j}$ are independent, $\mathop{\mathbb{E}}\left(Z_{i}Z_{j}\right) = 0$ whenever $i \neq j$. Thus, the expectation becomes $\mathop{\mathbb{E}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right) = \mathop{\mathbb{E}}\left(\sum_{i=1}^{n}Z_{i}^{2}\right) = n \mu_{2}$.

Subtracting $\mathop{\mathbb{E}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right)^{2} = n^{2}\mu_{2}^{2}$ from the previous expectation, we have:

$$\begin{align}

\mathop{\mathrm{Var}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right) &= n \mu_{4} + 2 n^{2} \mu_{2}^{2} - 3n \mu_{2}^{2}. \tag{\(\spadesuit\)}

\end{align}$$

Finally, we calculate the covariance:

$$\begin{align}

\mathop{\mathrm{Cov}}\left(\sum_{i=1}^{n}Z_{i}^{2}, \sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right) &= \mathop{\mathbb{E}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n}\sum_{k=1}^{n}Z_{i}^{2}Z_{j}Z_{k}\right) - \mathop{\mathbb{E}}\left(\sum_{i=1}^{n}Z_{i}^{2}\right)\mathop{\mathbb{E}}\left(\sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right).

\end{align}$$

We have already found that the final two expectations are equal to $n \mu_{2}$, so the second term becomes $n^{2} \mu_{2}^{2}$.

For $\sum_{i=1}^{n}\sum_{j=1}^{n}\sum_{k=1}^{n} \mathop{\mathbb{E}}\left(Z_{i}^{2}Z_{j}Z_{k}\right)$, notice that the expectation is $0$ whenever $j \neq k$. When $i = j = k$, the expectation is $\mathop{\mathbb{E}}\left(Z_{1}^{4}\right) = \mu_{4}$ and there are $n$ ways of achieving this. When $i \neq j = k$, the expectation is $\mu_{2}^{2}$ and there are $n(n - 1)$ ways of achieving this (now the order of $i, j$ matters, so we do double count).

We therefore get:

$$\begin{align}

\mathop{\mathrm{Cov}}\left(\sum_{i=1}^{n}Z_{i}^{2}, \sum_{i=1}^{n}\sum_{j=1}^{n}Z_{i}Z_{j}\right) &= n(\mu_{4} - \mu_{2}^{2}). \tag{\(\clubsuit\)}

\end{align}$$

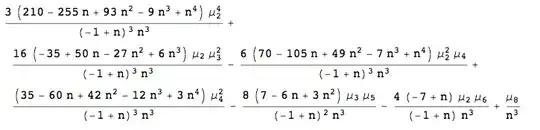

Substituting $\clubsuit, \spadesuit, \blacktriangle$ the values we just found into $\bigstar$, we get

$$\begin{align}

\mathop{\mathrm{Var}}\left(S^{2}\right) &= \frac{1}{n^{2}(n - 1^{2})} \left[(n^{3} - 2n^{2} + n)\mu_{4} - (n^{3} - 4n^{2} + 3n) \mu_{2}^{2}\right] \\

&= \frac{1}{n^{2}(n - 1)^{2}} \left[n(n - 1)^{2} \mu_{4} - n(n - 1)(n - 3)\mu_{2}^{2}\right] \\

&= \frac{1}{n} \left(\mu_{4} - \frac{n - 3}{n - 1} \mu_{2}^{2}\right)

\end{align}$$