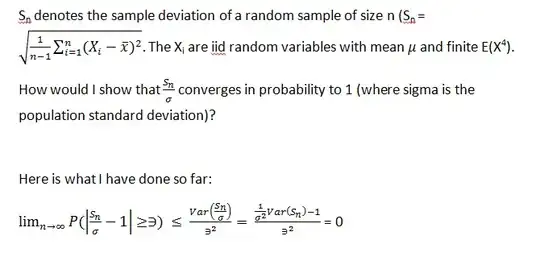

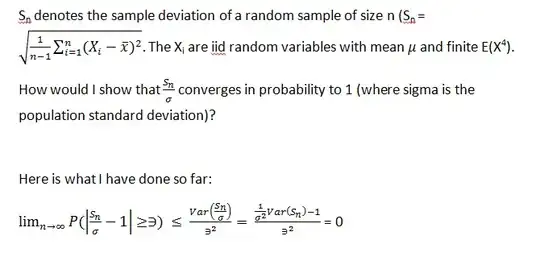

Can you please help verify if what I have done is correct for the question below? I applied Chebyshev's theorem in the first step, but I am worried if there are any mathematical errors or misapplied theorems in my solution. Thanks for the help.

Can you please help verify if what I have done is correct for the question below? I applied Chebyshev's theorem in the first step, but I am worried if there are any mathematical errors or misapplied theorems in my solution. Thanks for the help.

One way to do this is show that $\frac{S_n^2}{\sigma^2}$ converges in probability to 1 using the same method you tried (invoking Chebyshev's Inequality), and then noting (or proving if you didn't know already) that if $X_1, X_2, \ldots$ converge in probability to $X$ and $g$ is a continuous function, then $g(X_{1}), g(X_{2}), \ldots$ converge in probability to $g(X)$.