Reading this first,

Divergence (div) is “flux density”—the amount of flux entering or leaving a point. Think of it as the rate of flux expansion (positive divergence) or flux contraction (negative divergence). If you measure flux in bananas (and c’mon, who doesn’t?), a positive divergence means your location is a source of bananas. You’ve hit the Donkey Kong jackpot.

Wikipedia has:

In physical terms, the divergence of a three dimensional vector field is the extent to which the vector field flow behaves like a source or a sink at a given point. It is a local measure of its "outgoingness"—the extent to which there is more exiting an infinitesimal region of space than entering it.

What puzzles me is, what's the direction? Doesn't divergence need a direction too?

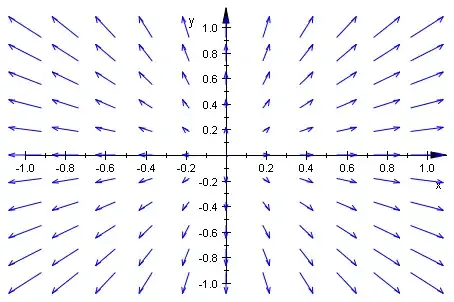

For example, say you have this 2d vector field

$$ F(x,y) = (x,y) $$

This field is everywhere "spreading" from the origin:

The divergence is:

$$ \nabla \cdot (x,y) = 2 $$

Ie everywhere positive. Everywhere is a "source". At every point, if you draw an infinitesimally small circle, as the Wikipedia definition has, no matter how small it is, that circle will see more "stuff" leaving it than entering it -- ie everywhere is a source. But that only makes sense if you are talking as measured using a vector from the origin. But nowhere in the definitions of divergence do I see this last bolded comment mentioned, making me uneasy.

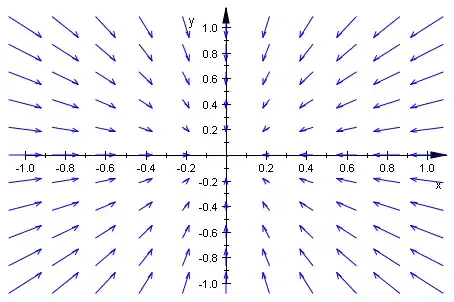

Take a vector field with values in the opposite direction:

$$ F(x,y) = (-x,-y) $$

The divergence everywhere is

$$ \nabla \cdot (-x,-y) = -2 $$

Ie everywhere negative. Everywhere is a "sink". Ie the field "slows down" at every point. If you are talking about every point, and the direction of flow is actually towards the origin. Then and only then, is the function everywhere "slowing down".

So like, what exactly is the divergence at a point? Does it really make sense? Am I right about having to consider the direction as towards the origin in order for the "sources/sinks" idea to make sense? Where is this stated?