An attempt for approximating the logarithm function $\ln(x)$: Could be extended for big numbers?

PS: Thanks everyone for your comments and interesting answers showing how currently the logarithm function is numerically calculated, but so far nobody is answering the question I am asking for, which is related to the formula \eqref{Eq. 1}: Is it correctly calculated?, Could a formula for the logarithm of large numbers be found with it? Here with "big/large numbers" I am meaning in the same sense of how the Stirling's approximation formula approximates the factorial function at large values.

Intro__________

On a previous question I found that the following approximation could be used: $$\ln\left(1+e^x\right)\approx \frac{x}{1-e^{-\frac{x}{\ln(2)}}},\ (x\neq 0) \quad \Rightarrow \quad \dfrac{\ln\left(1+x^{\ln(2)}\right)}{\ln\left(x^{\ln(2)}\right)} \approx \frac{x}{x-1}$$

And later I noted that I could do the following: $$\dfrac{\ln\left(1+x^{\ln(2)}\right)}{\ln(2)} \approx \frac{x\ln\left(x\right)}{x-1}$$ by differentiating both sides: $$\frac{x^{\ln(2)-1}}{1+x^{\ln(2)}} \approx \frac{1}{x-1}-\frac{\ln(x)}{(x-1)^2}$$

From where I could isolate the logarithm as an approximation function: $$\ln(x) \approx f_1(x) = \frac{\left(x-1\right)\left(x+x^{\ln(2)}\right)}{x\left(1+x^{\ln(2)}\right)}$$

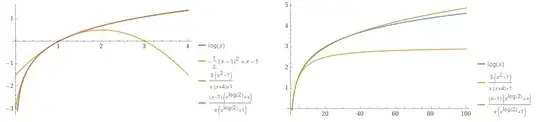

which end been a quite good approximation: sacrificing little accuracy it follows the logarithmic curve for longer than Taylor expansions or Padé approximants of similar order: as example the 2nd order Taylor series center at $x=1$ equals $T(x)=(x-1)-\frac12 (x-1)^2$, and the Pade approximant of 2nd order in both numerator and denominator at $x=1$ is $P(x)=\frac{3(x^2-1)}{x(x+4)+1}$ are shown in the following figures 1 and 2 compared with $f_1(x)$:

It follows the graph quite good and way longer that the classic approximations just with some little crossovers, but as can be seen at the end, it still detaches from the logarithmic curve as $x$ increases.

Looking at how the approximation was obtained, you can notice that further differentiation could be used to obtain other approximations for the logarithm function, since for every differentiation I will get the form: $$\mathbb{P}_1(x) \approx \mathbb{P}_2(x)+\ln(x)\cdot \mathbb{P}_3(x) \Rightarrow \ln(x) \approx \frac{\mathbb{P}_1(x)-\mathbb{P}_2(x)}{\mathbb{P}_3(x)}$$

with $\mathbb{P}_i(x)$ some polynomial-alike functions (with roots and fractions of combination of roots and polynomials).

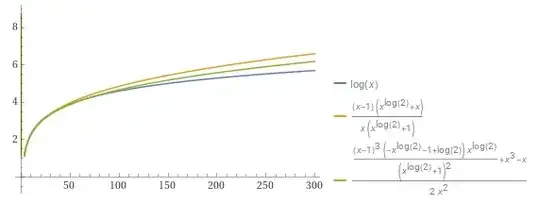

As example, the next step of differentiation leads to the approximation: $$\ln(x)\approx f_2(x) = \frac12 (x-1)^3 \left( \frac{x}{(x-1)^3}-\frac{1}{x(x-1)^3}+\frac{x^{\ln(2)-2}\left(\ln(2)-1-x^{\ln(2)}\right)}{\left(1+x^{\ln(2)}\right)^2}\right)$$

which can be seen in the following figure 3, sacrificing accuracy at the beginning, looks like it is converging for larger values, so I wonder if this process could be indeed giving approximations for the logarithmic function.

Main question_______________

Considering that the derivative of the logarithm is $\ln'(x) = \frac1x$, that $\frac{d^n}{dx^n}\left(\frac{x}{x-1}\right) = \frac{n!}{(x-1)(1-x)^n}$ and $\frac{d^n}{dx^n}\left(\frac{1}{x}\right) = \frac{(-1)^{n}\ n!}{x^{n+1}}$, and the product rule for higher derivatives $d^{(n)}(uv) =\sum\limits_{k=0}^n {n \choose k}d^{(n-k)}(u)\ d^{(k)}(v)$, if I didn't messed up the nth-order approximation is given by:

$$\ln(x) \approx f_n(x) = \dfrac{ \sum\limits_{k=0}^{n-1}{{n-1}\choose k} \dfrac{d^{k}}{dx^{k}}\left(\dfrac{1}{x}\right)\dfrac{d^{n-1-k}}{dx^{n-1-k}}\left(\dfrac{1}{1+x^{-\ln(2)}}\right) - \sum\limits_{k=1}^{n-1}{{n-1}\choose k} \dfrac{d^k}{dx^k}\left(\dfrac{1}{x}\right)\dfrac{d^{n-1-k}}{dx^{n-1-k}}\left(\dfrac{x}{x-1}\right)}{\dfrac{n!}{(x-1)(1-x)^n}} \label{Eq. 1} \tag{Eq. 1}$$

But here I got stuck:

- It is possible to use this for finding a formula for large $x$ values? I was aiming to form a fraction keeping only the higher order polynomials in $n$ and later replace it with $x$, but I couldn't find a formula clearer than this one. Here assuming the formula is right, hope you can review it. I don't know if is possible to do factorization on the $\{n-1-k\}$ terms, as example.

- It is converging to the logarithm for large $x$ values as $n$ increase? I don't really know if this is true.

Motivation____________

After receiving a comment criticizing this answer, I realized that approximating $\ln(x) \approx x(x^{\frac1x}-1)$ for large numbers don't going to be useful since looks like $x^{\frac1x}$ is even harder to calculate (even when it looks accurate at large values). So assuming that $x^b$ with $b>0$ is not difficult in this sense (I hope it is not, I am not really sure if make sense the use of $x^{\ln(2)}$), I was aiming to find some ration of polynomials $\ln(x) \approx f_n(x) = \dfrac{\mathbb{P}_1(x^n)}{\mathbb{P}_2(x^n)}$ and later see how behaves replacing $n \leftarrow x$ such $\ln(x) \approx f(x) = \dfrac{\mathbb{P}_1(x^x)}{\mathbb{P}_2(x^x)}$.

Added later

Using Stirling's approximation I could make the following: $$\begin{array}{r c l} \ln(x+1) & = &\ln\left((x+1)!\right)- \ln(x!) \\ \overset{\text{Stirling's}}{\Rightarrow} \ln(x+1)\left(x+\frac12\right) & \approx & 1 + \ln(x)\left(x+\frac12\right) \\ \Rightarrow \ln(x+1)-\ln(x) = \ln\left(1+\frac{1}{x}\right) &\approx & \frac{2}{2x+1} \end{array}$$

But unfortunately I haven't found yet a better simple approximation than $f_1(x)$, neither something useful for large $x$ values.

2nd later comments

The formula \eqref{Eq. 1} was corrected since it was originally wrong. I still without found the formula at $n$, but by mistake I recovered in Wolfra-Alpha other known expansion that works better than Taylor's: $$\ln(x) = \sum\limits_{k=1}^\infty \frac{1}{k}\left(\dfrac{x-1}{x}\right)^k$$ and I am afraid that the second sum of \eqref{Eq. 1} lead to that canceling the logarithm on the approximation "equality", but I still missing with constants matching.

Last update

I give up, after trying much more I have should I found, no after complications because of $\dfrac{d^n}{dx^n} \ln(x)$ broke its form when $n=0$ compared with $n>0$, that the approximation take the form: $$n=0:\qquad \dfrac{\ln(1+x^{\ln(2)})}{\ln(2)}\approx \ln(x)\dfrac{x}{x-1}$$ $$n=1:\qquad \dfrac{1}{x+x^{1-\ln(2)}}\approx \dfrac{1}{x-1}-\dfrac{\ln(x)}{(x-1)^2}\quad \Rightarrow f_1(x)$$

$$\begin{array}{r c l} n>1: \ln(x) & \approx & \sum\limits_{k=1}^n \frac{1}{k}\left(\frac{x-1}{x}\right)^k +\frac{1}{n}\left(\frac{x-1}{x}\right)^n \frac{(x-1)}{(1+x^{\ln(2)})}\sum\limits_{q=0}^{n-1} \sum\limits_{k=0}^q \sum\limits_{j=0}^k \dfrac{(-1)^{j+k-q}{k \choose j}}{(1+x^{-\ln(2)})^k}{(k-j)\ln(2) \choose q} \\ \Rightarrow n\geq 1: \ln(x) & \approx & \sum\limits_{k=1}^{n-1} \frac{1}{k}\left(\frac{x-1}{x}\right)^k + \frac{1}{n}\left(\frac{x-1}{x}\right)^n \left(\dfrac{x+x^{\ln(2)}}{1+x^{\ln(2)}}\right) + \frac{1}{n}\left(\frac{x-1}{x}\right)^n \frac{(x-1)}{(1+x^{\ln(2)})}\sum\limits_{q=1}^{n-1} \sum\limits_{k=0}^q \sum\limits_{j=0}^k \dfrac{(-1)^{j+k-q}{k \choose j}}{(1+x^{-\ln(2)})^k}{(k-j)\ln(2) \choose q} \end{array}$$

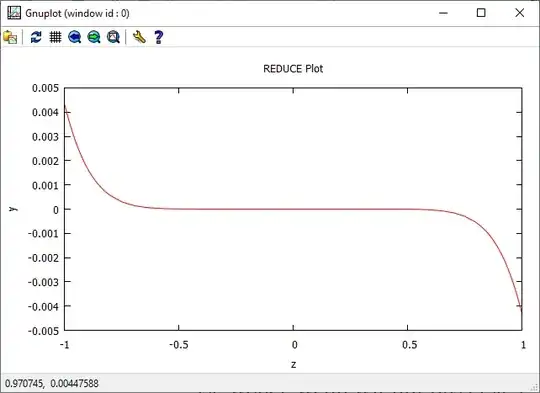

Unfortunately I wasn't able to reduce it more neither related it to $f_1(x)$ (which works amazing for small $n$), and also from the graph in Desmos looks like the approximation works good at low $n$ values for $x<1000$ but later it don't gain too much power, but I believe it is converging to the logarithm: the first part do approach the logarithm, and the awful second part, if I am not mistaken, should go to zero for large $n$ and $x$ since are proportional to $\frac{1}{n}$ and because the degree of the polynomials on each term's numerator and denominator matches (hope someone could prove it - I wasn't able to show it).

Another interesting thing is that using the approximation: $$\ln(x) \approx \frac{(x-1)}{x^{\ln(2)}}\ln(1+x^{\ln(2)})$$

and introducing there the classic approximation $\sum_{k=1}^n \frac{1}{k}\left(\frac{x-1}{x}\right)^k$ you get something that arrives a bit faster than the original one: $$\ln(x) \approx \frac{(x-1)}{x\ln(2)}\sum\limits_{k=1}^n \frac{1}{k}\left(\dfrac{x^{\ln(2)}}{1+x^{\ln(2)}}\right)^k$$