I was learning confidence intervals progressing slowly with few hiccups 1, 2, and wrapping up while found few more issues, one of which I have detailed here. Requesting your kind help.

I created a list containing 1s and 0s, 1 representing yellow ball and 0 otherwise, with yellow ball proportion being 60%. Then I randomly sample from this population (sample size n), take mean value and plot. I do this N no of times, so eventually I get a sampling distribution which is normal.Then I proceed to calculate Confidence Interval (CI) and see how it fares (CI calculated for 95%, so checking if CI has pop.mean 95% of the time)

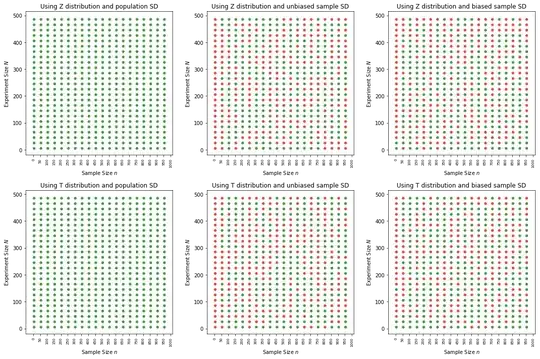

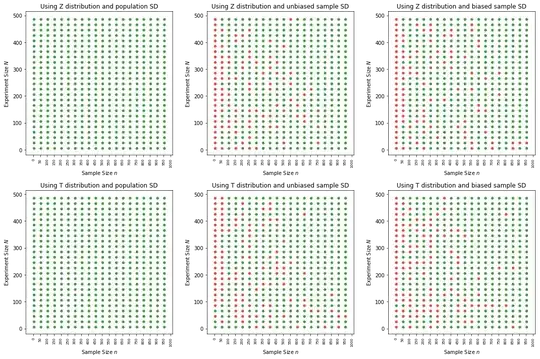

Now I have multiple variables to play with. Population size T, No of experiments N, sample size n, and every time I sample, should I replace the sample back in to population or not. And then while calculating CI, to try Z or t, population or biased or unbiased SD etc. This is my premise for my first test.

Environment:

1. Population size T, fixed

2. Sample size n, varied

3. Experiment size N, varied

Applied methods:

1. Z distribution and population SD

2. Z distribution and unbiased sample SD

3. Z distribution and biased sample SD

4. T distribution and population SD

5. T distribution and unbiased sample SD

6. T distribution and biased sample SD

With above setup, I conducted test in 2 phases.

Green dots indicate, 95% or more of the set of CIs for the respective particular N, n combination has population mean, red otherwise.

Phase 1:Sampling with replacement

Every time I sample I replace.

Phase 2:Sampling without replacement

Every time I sample, I do not replace. I got below result.

As can be seen above, strangely, sampling with replacement, does not give good CI performance. We get mixed results. But sampling without replacement performs much better as sample size increases. Why is this?

Intuitively, I thought replacement would always give better results in any case (samples become independent irrespective of sample size). Is there any underlying theory am missing that explains the weird behaviour I got or the output I got is wrong?

Please find the MWE here

Dependent file: ci_helpers.py

Update: 22nd Sept 2018 We were looking at the problem with a wrong perspective. We were wondering why Sampling with replacement was doing poorly compared to Sampling without replacement. Instead if we look at why Sampling without replacement does a far better job, we get a key (thanks to siong-thye-goh) that, in our code, for Sampling without replacement, we did not use FPC (Finite Population Correction) which was thus resulting in bigger variance, so wider CIs. Once FPC introduced, both Sampling with replacement and without are behaving poorly(?!)

I am closing this and creating another question as the narrative is now changed: Why we get such a poor performance when we do not know population mean, or is that the poor performance the result of using sample SDs in each CIs?

Special thanks to Quinto whose answers were giving deeper insights in to the problem, and is still with me investigating the new issue at hand.