BUGGY proof of $n=1$ special case There is a bug in the proof, which I am trying to fix when I have more time...

Disclaimer: I am not an expert (by a long shot), so you're most welcome to point out errors, loopholes, clarifications, etc. Thanks!

First, some simple "pre-processing" of the antecedents:

For clarity I will write $B(l) = B(\bar{x}, l) = [\bar{x} - l, \bar{x} + l]$, i.e. the center of the neighborhood will always (implicitly) be $\bar{x}$.

Assume $f$ is differentiable. Since $\bar{x}$ is a local minimum, $f'(\bar{x}) = 0$.

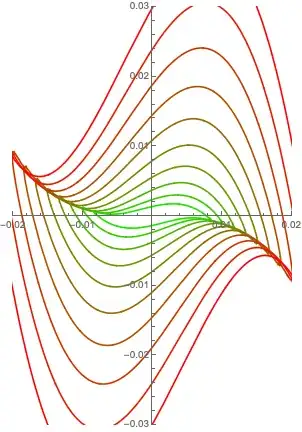

Let $\epsilon > 0$ denote the upperbound for a "sufficiently small norm". I.e. $\forall v \in (-\epsilon, \epsilon)$ (equivalently, $|v| < \epsilon$): $f_v(x) = f(x) + vx$ has a unique local minimum in $B(r)$.

Lemma 1: there exists a neighborhood $B(a) = [\bar{x} - a, \bar{x} + a]$ for some $a > 0$ s.t. $\forall x \in B(a), | { f(x) - f(\bar{x}) \over x - \bar{x}} | < \epsilon$. Note that ${ f(x) - f(\bar{x}) \over x - \bar{x}}$ is the slope from $(x, f(x))$ to $(\bar{x}, f(\bar{x}))$. So this claim says there is a neighborhood where the absolute slope (from $\bar{x}$ to any other point) is bounded below $\epsilon$.

Proof of Lemma 1: (I think) this follows directly from the definition of derivative $f'(\bar{x}) = \lim_{x \rightarrow \bar{x}} { f(x) - f(\bar{x}) \over x - \bar{x}}$. Specifically, for any positive constant (here we choose $\epsilon$) there must be a neighborhood $B(a)$ s.t. the fraction ${ f(x) - f(\bar{x}) \over x - \bar{x}}$ stays entirely within $(f'(\bar{x}) -\epsilon, f'(\bar{x}) + \epsilon)$, which equals $(-\epsilon, \epsilon)$ because $f'(\bar{x}) = 0$. $\square$

At this point, we are dealing with two neighborhoods. The given $B(r)$ where the "unique local minimum" conditions apply, and the new $B(a)$ where the absolute slopes $< \epsilon$. Let $b = \min(r, a)$, s.t. $B(b)$ is the smaller of the two neighborhoods $B(r)$ and $B(a)$.

Main Result: $f$ is locally convex in $B(b)$.

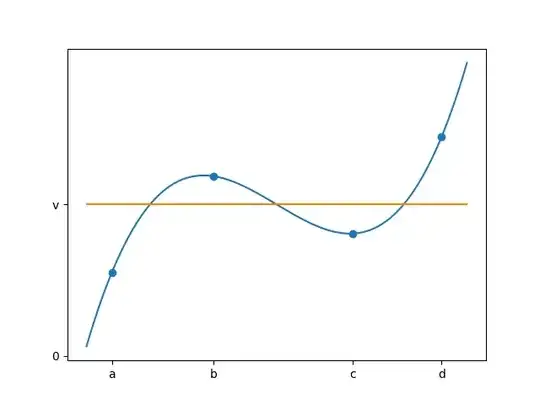

Main Proof: Assume for later contradiction that $f$ is not locally convex in $B(b)$. This means $\exists c, d$ s.t. $\bar{x} - b \le c < \bar{x} < d \le \bar{x} + b$ and the line segment $L$ connecting $(c, f(c))$ and $(d, f(d))$ does not lie entirely above $f$. [Bug alert: it is not OK to assume $c,d$ lie on different sides of $\bar{x}$.] Let the equation of the line segment $L$ be $L(x) = mx + q$ where $m$ is the slope and $q$ the intercept.

Lemma 2: $|m| = | {f(d) - f(c) \over d - c} | < \epsilon$.

Proof of Lemma 2: Since $\bar{x}$ is a unique local minimum in $B(r)$, it is also a unique local minimum in $B(b)$. Without loss, assume $f(d) > f(c)$. Then:

$|f(d) - f(c)| < |f(d) - f(\bar{x})|$ since $f(d) > f(c) > f(\bar{x})$, and,

$|d - c| > |d - \bar{x}|$ since $ d > \bar{x} > c$,

therefore: ${ |f(d) - f(c)| \over |d - c| } < { |f(d) - f(\bar{x})| \over |d - \bar{x}| } < \epsilon$ since $d \in B(b) \subset B(a)$.

For the case of $f(c) > f(d)$, simply swap $c$ and $d$ in all 3 bullets above. $\square$

Continuing the main proof, we apply the perturbation antecedent condition with $v = -m$. Note that Lemma 2 proves that $|v| = |m| < \epsilon$, i.e. this chosen $v$ is of sufficiently small norm. Therefore, $f_v(x) = f(x) - mx$ has a unique local minimum in $B(r)$, which means it has 0 or 1 local minimum in $B(b) \subset B(r)$.

Recall that $L(x)$ does not lie entirely above $f(x)$ in the interval $[c,d]$, i.e. $\exists e \in (c,d)$ s.t. $f(e) > L(e)$. Consider $g(x) = f(x) - L(x)$. We have $g(c) = g(d) = 0$ and $g(e) > 0$. Since $f, L$ are continuous, so is $g$. Now, by the extreme value theorem:

$g$ has a minimum in $[c,e]$, and since $g(e) > g(c)$, the minimum is actually in $[c,e)$.

Similarly, $g$ has a minimum in $(e,d]$.

Therefore, $g$ has two minima in $[c,d]$. Since $f_v$ and $g$ only differ by a constant $q$, this means $f_v$ also has two minima in $[c,d] \subset B(b) \subset B(r)$. This is the desired contradiction.

Author's note: Again, I am no expert, so suggestions, comments, corrections most welcome!