I'm just learning about differential equation separability. I understand what a derivative is. One notation for derivative is $\frac{dy}{dx}$, which - misleadingly - is not a fraction. Since it's not a fraction, why are we "separating" differential equations by treating it as if it were a fraction? For example:

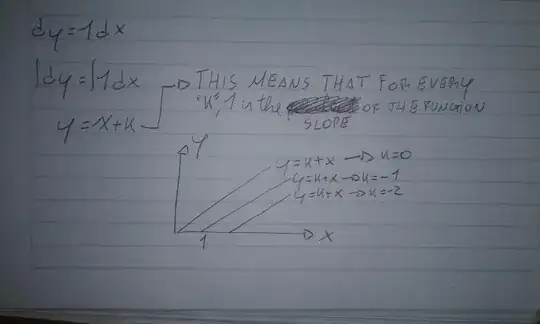

We have the following differential equation: $$\frac{dy}{dx} = y.$$

Then we separate the... whatever they are: $$\frac{dy}{y} = x\cdot dx.$$

What do $dy$ and $dx$ even represent when they are detached from each other? How is this valid math?

Then we integrate both sides of the equation. Even though we are integrating one side with $dy$ and the other side with $dx$, the equality is somehow magically not broken.

Also, somehow integrating $dy$ yields $y$, but integrating $dx$ doesn't yield $x$.

So my question is how do you make sense of $dy$ and $dx$ variables as they are separated from each other?