I hope this will clarify it.

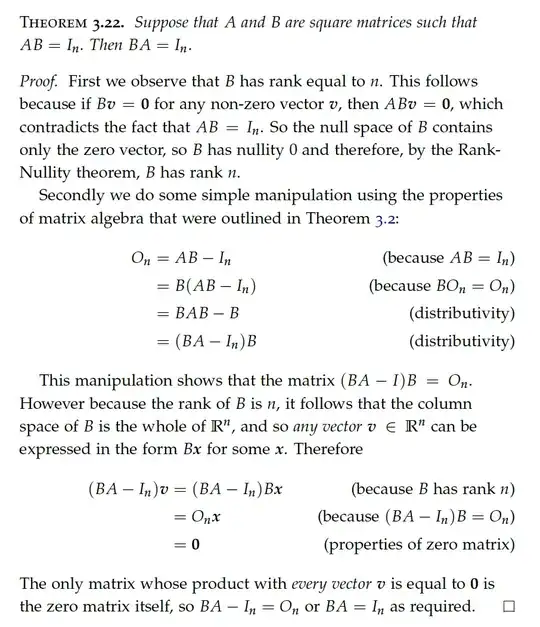

Let $AB=I.$

(1). $Bv=Bw\implies v=w.$ Because$ Bv=Bw\implies B(v-w)=0\implies 0=A(B(v-w))=(AB)(v-w)=I(v-w)=v-w.$

(2). Let $\{v_1,...,v_n\}$ be a linearly independent set of vectors. Then $S=\{Bv_1,...,Bv_n\}$ is a linearly independent set of vectors. Because if $a_1,...,a_n$ are scalars with not all of them $0,$ then $0\ne\sum_{j=1}^na_jv_j $ implies (by (1) ) that $B(0)\ne B(\sum_{j=1}^na_jv_j).$ That is, $0=B(0)\ne \sum_{j=1}^n a_jBv_j.$

(3). Therefore $S$ is a vector-space basis, so every vector $v$ is of the form $\sum_{j=1}^na_jBv_j=B(\sum_{j=1}^na_jv_j)=B(x_v).$

(4). Finally,since for any vector $v$ there exists $x_v$ such that $v=Bx_v,$ we have, for every $v$, $$(BA-I)v=(BA-I)(Bx_v)=(BAB-B)x_v=(B(AB-I))x_v=$$ $$=(B\cdot O)x_v=(O)\cdot x_v=0.$$ So $(BA-I)x_v=0$ for all $v,$ so $BA-I=O.$

(5). We can also do step (4) as follows: Suppose, by contradiction,that $v\ne BAv$ for some $v.$ Then $Bx_v\ne BA(Bx_v)=B(ABx_v)=B(Ix_v)=Bx_v,$ which is absurd.

Remark. It is necessary to use the fact that we have a finite-dimensional vector space. In an infinite-dimensional vector space $V$ there are linear functions $A:V\to V$ and $B:V\to V$ with $ABv=v$ for all $v\in V$ but $BAv\ne v$ for some $v\in V.$