Here's a useful generalization of the limit definition of $e$ from the OP:

Given

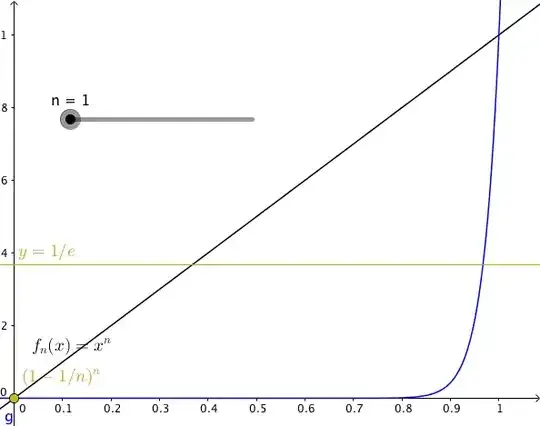

$$e = \lim_{n\to\infty}\left(1+\frac{1}{n}\right)^n$$

Raise both sides to the power of $x$:

$$e^x = \lim_{n\to\infty}\left(1+\frac{1}{n}\right)^{nx}$$

This is trivially true when $x = 0$, as both sides evaluate to 1

Assume $x \ne 0$ and let $m = nx$, i.e., $n = \frac{m}{x}$

As $n\to\infty, \, m\to\infty$

$$e^x = \lim_{m\to\infty}\left(1+\frac{x}{m}\right)^{m}$$

[Note the similarity between this and the first limit in Marc van Leeuwen's answer].

In particular, for $x = -1$

$$e^{-1} = \lim_{m\to\infty}\left(1+\frac{-1}{m}\right)^{m}$$

or

$$e^{-1} = \lim_{m\to\infty}\left(1-\frac{1}{m}\right)^{m}$$

As mathmandan notes in the comments, my derivation is flawed when $x < 0$, since then $n\to\infty \implies m\to -\infty$ :oops:

I'll try to justify my result for negative $x$ without relying on the fact that $e^x$ is an entire function and that there is only a single infinity in the (extended) complex plane.

For any finite $u, v \ge 0$, we have

$$e^u = \lim_{n\to\infty}\left(1+\frac{u}{n}\right)^{n}$$

and

$$e^v = \lim_{n\to\infty}\left(1+\frac{v}{n}\right)^{n}$$

Therefore,

$$e^{u-v} = \lim_{n\to\infty}\left(\frac{1+\frac{u}{n}}{1+\frac{v}{n}}\right)^{n}$$

Let $m = n + v$. For any (finite) $v$ as $n\to\infty, \, m\to\infty$.

$$\begin{align}\\

\frac{1+\frac{u}{n}}{1+\frac{v}{n}}

& = \frac{n + u}{n + v}\\

& = \frac{m + u - v}{m}\\

& = 1 + \frac{u - v}{m}\\

\end{align}$$

Thus

$$\begin{align}\\

e^{u-v} & = \lim_{n\to\infty}\left(1+\frac{u - v}{m}\right)^{n}\\

& = \lim_{m\to\infty}\left(1+\frac{u - v}{m}\right)^{m-v}\\

& = \lim_{m\to\infty}\left(1+\frac{u - v}{m}\right)^m \lim_{m\to\infty}\left(1+\frac{u - v}{m}\right)^{-v}\\

& = \lim_{m\to\infty}\left(1+\frac{u - v}{m}\right)^m\\

\end{align}$$

since

$$\lim_{m\to\infty}\left(1+\frac{u - v}{m}\right)^{-v} = 1$$

In other words,

$$e^{u-v} = \lim_{m\to\infty}\left(1+\frac{u - v}{m}\right)^m$$

is valid for any finite $u, v \ge 0$. And since we can write any finite $x$ as $u-v$ with $u, v \ge 0$, we have shown that

$$e^x = \lim_{n\to\infty}\left(1+\frac{x}{n}\right)^{n}$$

is valid for any finite $x$, so

$$e^{-x} = \lim_{n\to\infty}\left(1+\frac{-x}{n}\right)^{n}$$

And hence

$$e^{-x} = \lim_{n\to\infty}\left(1-\frac{x}{n}\right)^{n}$$