@Claude Leibovici yet again makes a sound argument with solid exposition by pointing out that the linear regression of the logarithmically transformed data does not provide the true solution. He then suggests a Newton-Raphson approach to find the least squares parameters. Another perspective follows which uses least squares to reduce the dimension on the problem and provides an example with solution as demonstration. The difference between the original solution and the solution after the logarithmic transform is amplified.

Original problem

A logarithmic transformation creates a problem with a different solution, and should be avoided. The original trial function is

$$

y(x) = a b^{x}

$$

The input data are the set of points $(x_{k}, y{k})_{k=1}^{m}$. By definition, the method of the least squares minimizes the sum of the squares of the residual errors:

$$

\left[ \begin{array}{c} a \\ b \end{array} \right]_{LS} = \left\{ \left[ \begin{array}{c} a \\ b\end{array} \right] \in \mathbb{R}^{2} \bigg | \sum_{k=1}^{m} \left(y_{k} - a b^{x_{k}}\right)^2 \text{ is minimized } \right\}

$$

As long as the data contains noise, the transformed equation must have a different solution.

Logarithmic transform

A logarithmic transformation yields the trial function $\ln y = \ln a + x \ln b.$ The least squares solution to this problem is

$$

\left[ \begin{array}{c} a \\ b \end{array} \right]_{T} = \left\{ \left[ \begin{array}{c} a \\ b\end{array} \right] \in \mathbb{R}^{2} \bigg | \sum_{k=1}^{m} \left(\ln y_{k} - \ln a -x_{k} \ln b \right)^2 \text{ is minimized } \right\}

$$

The new problem has a distinct solution.

The logarithm doesn't measure residual error uniformly and the least squares fit is defeated in its attempt to balance errors. The preferred property is a linear transform, such as converting centigrade to Fahrenheit. A difference of 3 degrees transforms to the same value whether the thermometer reads 1 or 100 degrees. Certainly $\ln 4 - \ln 1 \ne \ln 103 - \ln 100$.

Example

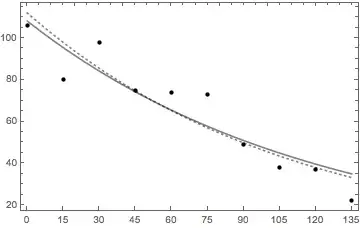

To provide an example and a sense of scale, consider a problem from Data Reduction and Error Analysis, 1e, by Philip Bevington, table 6-2, representing measurement for radioactive decay:

$$

\begin{array}{rr}

time & count\\\hline

0 & 106 \\

15 & 80 \\

30 & 98 \\

45 & 75 \\

60 & 74 \\

75 & 73 \\

90 & 49 \\

105 & 38 \\

120 & 37 \\

135 & 22 \\

\end{array}

$$

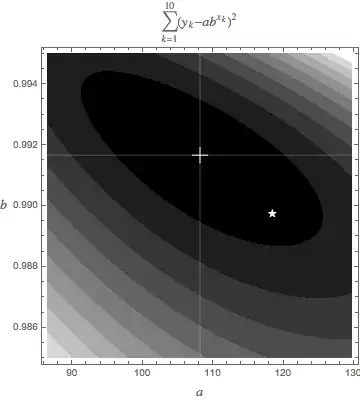

The least squares solution is $\left[ \begin{array}{c} a \\ b \end{array} \right]_{LS}=\left[ \begin{array}{c} 108.132 \\ 0.99167 \end{array} \right]$ with a $r^{2}\left( \left[ \begin{array}{c} a \\ b \end{array} \right]_{LS} \right)=966$, the minimum value.

If the data are transformed logarithmically,

$\left[ \begin{array}{c} a \\ b \end{array} \right]_{T} = \left[ \begin{array}{c} 118.502 \\ 0.9897197 \end{array} \right]$. The value of $r^{2}$ at this point is 1256.

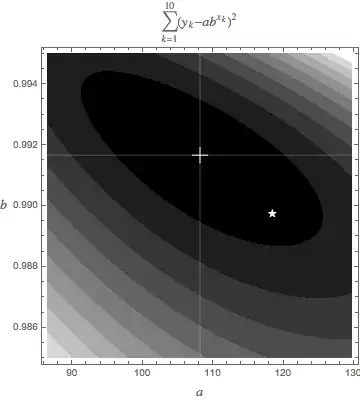

The two solutions are displayed in the plot below show the merit function $r^{2}\left( \left[ \begin{array}{c} a \\ b \end{array} \right] \right)$ using the original trial function. The least squares solution is the central cross at the minimum; the solution for the logarithmically transformed equations is marked by a star.

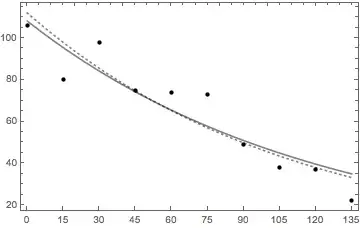

The following figure plots the different solutions against the data points (solid: original problem, dashed: transformed problem. The previous plot is much more useful for distinguishing between solutions.

Back to least squares

There are many ways to find the minimum of this two dimensional surface. But even better, we can reduce the problem to one dimension. Least squares does offer a path to reduce a two parameter minimization problem to that of one parameter which is easier to solve. Start with the minimization criterion for the linear parameter $a$

$$

\frac{\partial} {\partial a} r^{2} = \sum_{k=1}^{m} \left( y_{k} - a b^{x_{k}}\right)^2 = 0.

$$

We can recast this relationship to express $a$ as a function of $b$, $\hat{a}$. This provides a formulation for the best value of $a$ at each value of $b$:

$$

\hat{a}(b) = \frac{y_{k}b^{x_{k}}} {b^{2x_{k}}}

$$

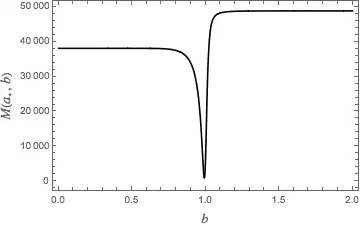

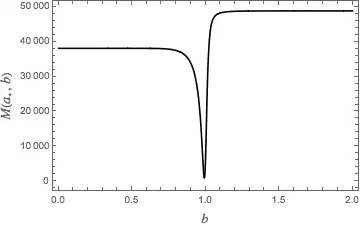

The merit function is reduced to that of a single variable

$$

r^{2}\left( \left[ \begin{array}{c} \hat{a}(b) \\ b \end{array} \right] \right) = r^{2}\left( b \right) = \sum_{k=1}^{m} \left( y_{k} - \frac{y_{k}b^{x_{k}}} {b^{2x_{k}}} b^{x_{k}}\right)^2,

$$

a function with a distinct minimum as seen in the following plot.