I have two uniform random varibles. $X$ is uniform over $[\frac{1}{2},1]$ and $Y$ is uniform over $[0,1]$. I want to find the density funciton for $Z=X+Y$. There are many solutions to this on this site where the two variables have the same range (density of sum of two uniform random variables $[0,1]$) but I don't understand how to translate them to the case where one variable have a different range. Here's what I tried:

$$f_Z(z)=\int_{-\infty}^\infty f_X(x)f_Y(z-x)\,dx.$$

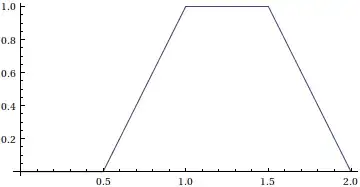

We will have $f_Z(z)=0$ for $z\lt \frac{1}{2}$, and also for $z\ge 2$. Two cases (i) $\frac{1}{2}\lt z\le 1$ and (ii) $1\lt z\lt 2$.

(i) In order to have $f_Y(z-x)=1$, we need $z-x\ge 0$, that is, $x\le z$. So for (i), we will be integrating from $x=\frac{1}{2}$ to $x=z$. $$f_Z(z)=\int_\frac{1}{2}^z 2 * 1\,dx=2z-1.$$ for $\frac{1}{2}\lt z\le 1$.

(ii) Suppose that $1\lt z\lt 2$. In order to have $f_Y(z-x)$ to be $1$, we need $z-x\le 1$, that is, we need $x\ge z-1$. $$f_Z(z)=\int_{z-1}^1 2*1\,dx=4-2z.$$ for $1\lt z\lt 2$.

For $z=1$ these two cases give different results, which doesn't make sense to me. Is there something wrong? How do I know which integration limits to use?