I'm new to matrix calculus, and I'm confused about how to differentiate a vector $x$'s transpose w.r.t. itself. $\left(i.e. \dfrac{\partial(x^T)}{\partial x}\right)$

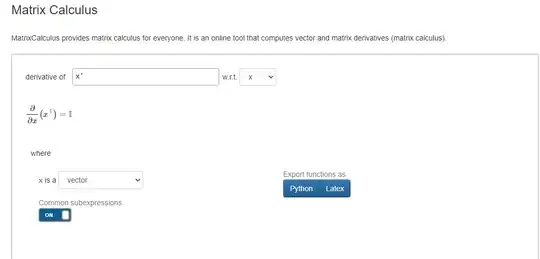

How would one calculate this derivative? From matrix calculator (https://www.matrixcalculus.org/) the result is I (identity matrix), but I can't figure out why. Suppose $x$ is a $n×1$ column vector and $x^T$ is a $1×n$ row vector, since $\frac{\partial }{\partial x}$ = $\left[\frac{\partial }{\partial x_{1}}, \frac{\partial }{\partial x_{2}},\ldots \frac{\partial }{\partial x_{n}}\right]$ , wouldn't $\dfrac{\partial (x^T)}{\partial x} = \dfrac{\partial }{\partial x}⊗x^T$ be a $1×n^2$ row vector instead of the $n×n$ identity matrix? I'm very confused and I don't know which part of my understanding is incorrect.

Thanks!

(P.S. I saw a similar question being asked about

here Derivative of vector and vector transpose product, but it hasn't seem to be resolved.)