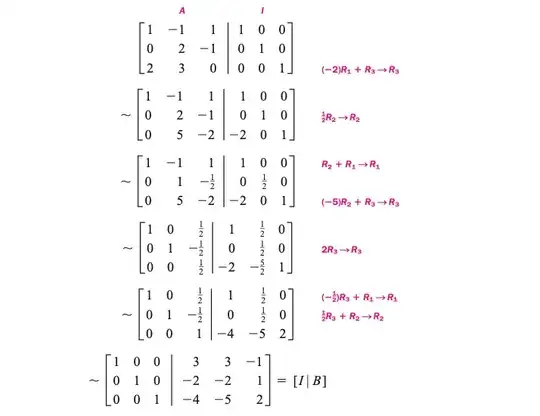

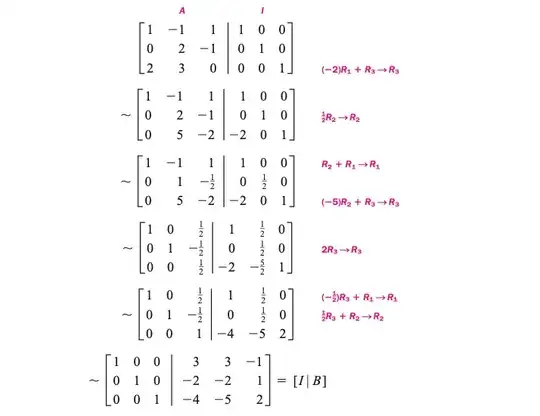

One carefully illustrated explanation can be found in Barnett et. al's College Algebra. The following example from the book describes the process of finding the inverse of matrix $A$ by performing elementary row operations on the augmented (coefficient) matrix $[A|I]$.

Source: Barnett et. al. College Algebra, McGraw Hill, 9th Edition, p.475.

The resulted matrix $B$ is indeed $A$'s inverse. This can be seen by noticing that, for every augmented matrix except the first one, the left component matrix (one is on the left side of the vertical bar) is equal to the right component matrix (of the same augmented matrix) multiplied by $A$. For example, consider the augmented matrix in the 4th row in the image above, we have:

$$

\begin{bmatrix}

1 & 0 & \frac{1}{2}\\

0 & 1 & \frac{-1}{2}\\

0 & 0 & \frac{1}{2}\\

\end{bmatrix}=

\begin{bmatrix}

1 & \frac{1}{2} & 0\\

0 & \frac{1}{2} & 0\\

-2 & -5 & 2\\

\end{bmatrix}

\begin{bmatrix}

1 & -1 & 1\\

0 & 2 & -1\\

2 & 3 & 0

\end{bmatrix}

$$

Thus the following theorem follows naturally:

If $[A | I]$ is transformed by row operations into $[I | B]$, then the resulting matrix $B$ is $A^{-1}$. If, however, we obtain all 0's in one or more rows to the left of the vertical line, then $A^{-1}$ does not exist.