Just to expand on Raymond Manzoni and to partially answer your question with one of the coolest things I ever learned in my early childhood (as a math undergrad),

consider the family of series,

$$\begin{eqnarray}

\sum \frac{1}{n}&=&\infty \\

\sum \frac{1}{n\ln(n)}&=&\infty \\

\sum \frac{1}{n\ln(n)\ln(\ln(n))}&=&\infty

\end{eqnarray}$$

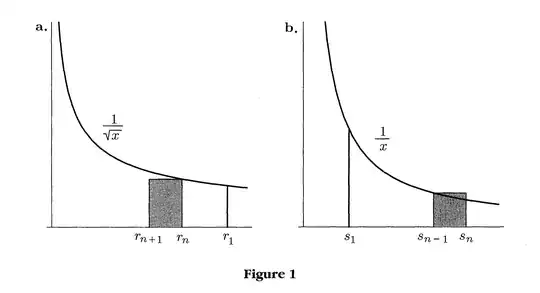

and so on. They all diverge and you can use the integral test to easily prove this. But here is the kicker. The third series actually requires a googolplex numbers of terms before the partial terms exceed 10. It is only natural that if natural log is a slowly increasing function, then log of log diverges even slower.

On the other hand, consider the family,

$$\begin{eqnarray}

\sum \frac{1}{n^2}&<&\infty \\

\sum \frac{1}{n(\ln(n))^2}&<&\infty \\

\sum \frac{1}{n\ln(n)(\ln(\ln(n)))^2}=38.43...&<&\infty

\end{eqnarray}$$

all converge which can be easily verified easily again using the integral test. But the third series converges so slowly that it requires $10^{3.14\times10^{86}}$ terms before obtaining two digit accuracy presented. Talking about getting closer to the "boundary" between convergence and divergence. Using this you can easily make up a series to converge or to diverge as slow as you want. So to answer your question, no there is no such thing as "the slowest diverging series". Any slowly diverging series you pick, we can come up with one diverging even slower.

Reference:

Zwillinger, D. (Ed.). CRC Standard Mathematical Tables and Formulae, 30th

ed. Boca Raton, FL: CRC Press, 1996.