In the arcticle Fixed-Time Stable Gradient Flows: Applications to Continuous-Time Optimization I found an interesting formula and its properties. The screenshot of the page from the article I was led below.

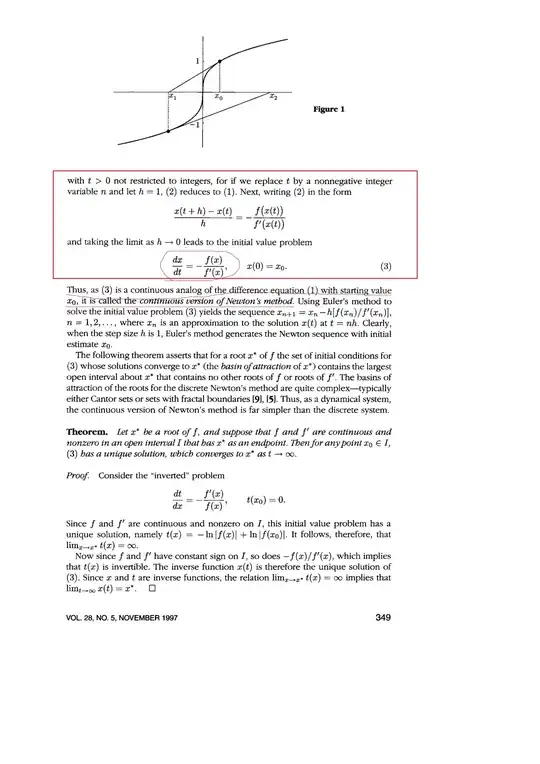

In another article A Continuous Version of Newton's Method, I found a similar formula, but in contrast to the first article, where the ratio of the gradient $G$ to the hessian $H$ is used, i.e. $G/H$, this use $f(x)/G(x)$. Screenshot from the article I also led below.

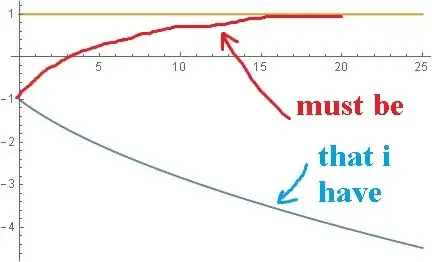

Problem: I decided to "play" with formula

$\frac{dx}{dt}=-(\frac{d^2f}{d^2x})^{-1}\frac{df}{dx}$

and function $f(x)$

$f(x)=e^{-(x-x_*)^2}$

and found that none of them work as it should (you need convergence from the starting point $x(0)$ to the point $x_*$).

Below I give code from Mathematica and what happened.

Clear["Derivative"]

ClearAll["Global`*"]

pars = {xstart = -1, xend = 1}

f = Exp[-(x[t] - xend)^2]

E^-(-1 + x[t])^2

sys =

NDSolve[{x'[t] == -(D[D[f, x[t]], x[t]])^-1 D[f, x[t]],

x[0] == xstart}, {x}, {t, 0, 500}]

Plot[{Evaluate[x[t] /. sys], xend}, {t, 0, 25},

PlotRange -> Full, PlotPoints -> 100]

Question: What's wrong with this formula or where did I make a mistake?

I will be glad any help.

https://www.sciencedirect.com/science/article/abs/pii/s0005109812002324

– dtn Apr 10 '21 at 07:02It is impossible to add this approach to genral. A truly generalized system should converge in extremum from anywhere. Consideration of it as a locally corresponding quadratic this in my opinion is too hard assumption.

– dtn Apr 12 '21 at 06:25