Taylor series can diverge and have only a limited radius of convergence, but it seems that often this divergence is more a result of summation being too narrow rather than the series actually diverging.

For instance, take $$\frac{1}{1-x} = \sum_{n=0}^\infty x^n$$ at $x=-1$. This series is considered to diverge, but smoothing the sum gives $\sum_{n=0}^\infty (-1)^n = \frac{1}{2}$, which agrees with $\frac{1}{1-(-1)}$. Similarly, $$\sum_{n=0}^\infty nx^n = x\frac{d}{dx}\frac{1}{1-x} = \frac{x}{(1-x)^2}$$ normally diverges at $x=-1$, but smoothing the sums gives $-\frac{1}{4}$ which agrees with the function. This process continues to hold for any number of taking the derivative than multiplying by x.

One can extend series of these types even further. Taking $$\frac{1}{1+x} =\sum_{n=0}^\infty (-x)^n = \sum_{n=0}^\infty (-1)^ne^{\ln(x)n} = $$ $$\sum_{n=0}^\infty (-1)^n\sum_{m=0}^\infty \frac{\left(\ln(x)n\right)^m}{m!} = \sum_{m=0}^\infty \sum_{n=0}^\infty (-1)^n\frac{\left(\ln(x)n\right)^m}{m!} = $$ $$\sum_{m=0}^\infty\frac{\ln(x)^m}{m!} \sum_{n=0}^\infty (-1)^n n^m = 1 -\sum_{m=0}^\infty\frac{\ln(x)^m}{m!} \eta(-m)$$

This sum converges for all values of $x>0$ and converges to $\frac{1}{1+x}$

In general, one can transform any sum of the form $$ \sum_{k=1}^\infty a_k x^k = -\sum_{k=0}^\infty \left(\sum_{n=-\infty}^\infty b_k x^k\right) (-1)^n x^n =$$ $$ -\sum_{k=0}^\infty b_k \sum_{m=0}^\infty\frac{\ln(x)^m}{m!}\eta(-(n+k)) $$

In general, how much is it possible to extend the range of convergence, simply by overloading the summation operation (here I assign values based on using the eta functions, but I can imagine using something like Abel regularization or other regularization)? Are there any interesting results that come from extending the range of convergence to the Taylor series?

One theory I had was that the Taylor series should converge for any alternating series which has a monotonic $|a_k|$. Is this true? Are there any series that are impossible to increase their radius of convergence by changing the sum operation? In short, my goal is to find a way of overloading the sum operation that widens the radius of convergence of the Taylor series as far as possible while still agreeing with the function.

Edit: I wanted to add that it might be useful in looking at this question to know that $$ \sum_{m=0}^\infty\frac{\ln(x)^m}{m!}\eta(-(m-w))= -Li_w(-x) $$ where $Li_w(-x)$ is the wth logarithmic integral. So the sum method I provided transforms a sum to an (in)finite series of logarithmic integrals.

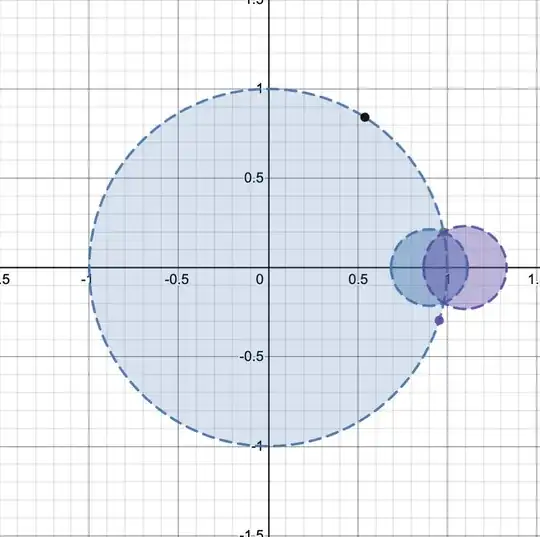

This is sort of tangential, but I was reflecting a bit more on this problem, and it seems to be that a Taylor series has enough information to be able to extend a sum until the next real singularity. For instance, if I graph a function in the complex plane, then the radius of convergence is the distance to the nearest singularity. In this image, I have a few different singularities (shown as dots).  But, since the original Taylor series (call it $T_1(x)$, its shown as the large blue circle in the image) will exactly match the function within this disk, its possible to get another Taylor series (call it $T_2(x)$, its shown as the small blue circle) centered around another point while only relying on derivatives of $T_1(x)$. From $T_2(x)$, its possible to get $T_3(x)$ (the purple circle), which extends the area of convergence even further. I think this shows that the information contained by the Taylor series is enough to end all the way to a singularity on the real line, rather than being limited by the distance to a singularity in the complex plane. The only time this wouldn't work is if the set of points on the boundary circle where the function divergences is infinite and dense enough to not allow any circles to 'squeeze though' using the previous method. So in theory, it seems like any Taylor series which has a non-zero radius of convergence and a non-dense set of singularities uniquely determines a function that can be defined on the entire complex plane (minus singularities).

But, since the original Taylor series (call it $T_1(x)$, its shown as the large blue circle in the image) will exactly match the function within this disk, its possible to get another Taylor series (call it $T_2(x)$, its shown as the small blue circle) centered around another point while only relying on derivatives of $T_1(x)$. From $T_2(x)$, its possible to get $T_3(x)$ (the purple circle), which extends the area of convergence even further. I think this shows that the information contained by the Taylor series is enough to end all the way to a singularity on the real line, rather than being limited by the distance to a singularity in the complex plane. The only time this wouldn't work is if the set of points on the boundary circle where the function divergences is infinite and dense enough to not allow any circles to 'squeeze though' using the previous method. So in theory, it seems like any Taylor series which has a non-zero radius of convergence and a non-dense set of singularities uniquely determines a function that can be defined on the entire complex plane (minus singularities).

Edit 2: This is what I have so far for iterating the Taylor series. If the Taylor series for $f(x)$ is $$T_1(x)=\sum_{n=0}^\infty \frac{f^{(n)}}{n!}\left(0\right) x^n$$ and the radius of convergence is R, then we can center $T_2(x)$ around $x=\frac{9}{10}R$, since that is within the area of convergence. We get that $$T_2(x) = \sum_{n=0}^\infty \frac{T_1^{(n)}}{n!}\left(\frac{9}{10}R\right) x^n = $$ $$T_2(x) = \sum_{n=0}^\infty \left(\frac{\sum_{k=n}^\infty \frac{f^{(k)}\left(0\right)}{k!} \frac{k!}{(k-n)!} \left(\frac{9}{10}R\right)^{k-n}}{n!}\right) x^n = \sum_{n=0}^\infty \left(\sum_{k=n}^\infty f^{(k)}\left(0\right) \frac{1}{(k-n)!n!} \left(\frac{9}{10}R\right)^{k-n}\right) x^n $$ and in general centered around $x_{center}$ $$T_{w+1}(x) = \sum_{n=0}^\infty \frac{T_w^{(n)}\left(x_{center}\right)}{n!} x^n$$ I tested this out for ln(x), and it seemed to work well, but I suppose it could fail if taking the derivative of $T_w(x)$ too many times causes $T_w(x)$ to no longer match $f(x)$ closely.

I tested out this method of extending a function with Desmos, here is the link if you would like to test it out: https://www.desmos.com/calculator/fwvuasolla

Edit 3: I looked in analytic continuation some, and it looks like the method I was thinking of that extends the range of convergence using the old coefficients to get new ones is already a known method, though it uses Cauchy's differentiation formula so it is able to avoid some of the convergence problems that I was worried about before that comes from repeated differentiation. So, it appears there should exist some way to overload the sum operation that achieves this same continuation. I suppose there's the trivial option of defining summation as the thing which returns the same values as continuation would return, but that's a very unsatisfying solution. It would be interesting to see if it's possible to create a natural generalization of summation that agrees with this method of continuation.

Edit 4: I think I may have found a start for how to extend the convergence of all Taylor series which can be extended. Based on my above argument of recursively applying the Taylor series, one algorithm could go as follow:

- Get the Taylor series at the starting point (call it $x_0$)

- Use this Taylor series to get all the points between $[x_0,x_0+dt]$

- Recenter the Taylor series at $x_0 + dt$ to updating the derivatives

- Repeat from 1 to continue expanding the convergence

So long as dt is smaller than the distance of the nearest singularity is to the real line, all Taylor series will converge. Now, this method isn't all that useful itself, since it requires many recursive steps, but it sets the stage for what I think is a natural way to extend summation to work for analytic continuation.

Instead of thinking of the values of the derivative as values to be summed together as a polynomial, instead view the set of derivatives at a point as seed values for applying Euler's Method. My motivation for this is that as $dt$ becomes very small, Euler method should become a successively better and better approximation of the above algorithm. When dt is sufficiently small, the values of the Euler method around $x_0$ should uniformly converge to the values that the Taylor series would give. It seems to me that this should also hold for the derivatives of the Taylor series. My main concern with this method is that each step introduces a small error and that eventually, this error would become impossible to contain, but I'm not sure how to do prove or disprove this.

Based on this, could one view the Taylor series as instead providing a differential equation with infinite initial conditions? Do differential equations say anything about the uniqueness of the existence of equations of this type? Does the Euler method actually work to extend the radius of convergence of a power series?

I've attached some code to be able to run the Euler method on different functions. I've been able to extend the convergence of a number of functions, but it is quite hard to extend it anywhere about 2~3 times further than the regular convergence range since the size of the terms grow with a factorial function, so it takes a very long time to run past that range. In the following code, I'm extending the function with the seed $a_n = n!$, which corresponds to $\frac{1}{1-x}$.

import matplotlib.pyplot as plt

import math

from decimal import Decimal

from decimal import *

getcontext().prec = 1000 #need LOTS of precision to extend the range. Even 1000 accurate places causes a problem with large factorials

def iterate(L, m):

R = []

for i in range(len(L)-1):

R.append(L[i] + m*L[i+1])

R.append(L[len(L)-1])

return R

def createL(S):

L = []

for i in range(S):

L.append(Decimal(math.factorial(i)) )

return L

def createCorrectDeriv(S,x):

L = []

for i in range(S):

L.append( pow(Decimal(1-x),-1-i)* math.factorial(i))

return L

def runEuler():

DT = -.002

dt = Decimal(DT)

W= int(-3/DT)

print(W)

print("range converge should be: "+ str(abs(DT* W) ))

print("Size list: " + str(W))

L = createL(int(W ) )

L_val = []

Y_val = []

Z_val = []

X_val = []

for i in range( W ):

L_val.append(L[0])

L = iterate(L,Decimal(dt) )

X_val.append(-dt*i)

Y_val.append(1.0/(1-(DT*(i)) ))

plt.plot(X_val, L_val)

plt.plot(X_val, Y_val)

plt.show()

runEuler()

Final Edit: I think I figured it out! The result is (for analytic f(x)) that $$f(x) = \lim_{dt \to 0}\sum_{n=0}^\frac{x}{dt} \frac{(dt)^n}{n!} f^{(n)}(0) \prod_{k=0}^{n-1} \left(\frac{x}{dt}-k\right)$$ For $f(x) = \frac{1}{1-x}$ this becomes $$\lim_{dt \to 0} e^{\frac{1}{dt}}dt^{-\frac{x}{dt}}\int_{\frac{1}{dt}}^{\infty}w^{\left(\frac{\left(dt-x\right)}{dt}-1\right)}e^{-w}dw$$ which does indeed converge to $\frac{1}{1+x}$ for $(-1,\infty)$. Thanks for everyone's help in getting here! I'm going to work next on seeing if allowing dt to be a function of the iterations allows one to extend this method to get analytic continuations of functions which have boundaries that are dense but not 100% dense, since my thought is that maybe after an infinite number of steps its possible to squeeze through the 'openings' in the dense set.