I am trying to difference $ \sqrt {8x +1} $. When I use chain-rule I get $\frac{4}{\sqrt{8x+1}}$. I checked it in wolfram alpha and It is correct answer. But when I try to differentiate with limit, I came across with a problem. First, I write basic definition of derivatives:$$ \lim_{h \to 0} \frac{\sqrt{8(x+h) + 1} - \sqrt{8x + 1}}{h} $$ Then I multiply by $ \sqrt{8(x+h) + 1}$ and get:

$$ \lim_{h \to 0} \frac{8(x+h) + 1 - \sqrt{8x + 1}\sqrt{8(x+h) + 1}}{h \sqrt{8(x+h) + 1}} $$ Then I assume that h in $\sqrt{8(x+h) + 1} \;$ equals $0$. (I think, this assumption is wrong, but I really don't know why? )$$ \lim_{h \to 0} \frac{8(x+h) + 1 - (8x + 1)}{h \sqrt{8x + 1}} $$At end I get$$ \lim_{h \to 0} \frac{8h}{h \sqrt{8x + 1}} = \frac{8}{\sqrt{8x + 1}}$$which is twice as much, as what I must get. My question is what I am getting wrong?

- 101

1 Answers

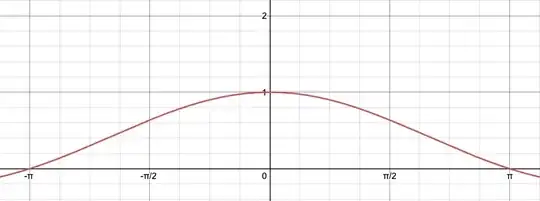

In general, when you have to compute $$ \lim_{h \to 0}\frac{f(x+h)-f(x)}{h} \, , $$ you are not allowed to simply set $h$ equal to $0$ without thinking. This is because limits tell you what a function does as $h$ gets close to $0$, but is not equal to it. Consider the graph of $y=\frac{\sin h}{h}$, where $h$ is measured in radians:

Looking at this plot, it is clear that as $h$ gets very close to $0$, $\frac{\sin h}{h}$ also gets very close to $1$. The graph seems to suggest that $$ \frac{\sin 0}{0}=1 \, , $$ even though that is complete nonesense. Actually, the function is not defined when $h=0$. Nevertheless, we retain a strong urge to talk about the behaviour of the function around that point. Limits allow us to do this. When we write $$ \lim_{h \to 0}\frac{\sin h}{h}=1 \, , $$ what we mean is that $\frac{\sin h}{h}$ gets arbitrarily close to $1$ as $h$ approaches $0$. Although the function $\frac{\sin h}{h}$ never attains the value of $1$, the value of $1$ is the 'anticipated' value.

More formally, the expression $$ \lim_{h \to 0}\frac{\sin h}{h}=1 $$ is a shorthand for

We can make $\frac{\sin h}{h}$ as close to $1$ as we like by requiring that $h$ be sufficiently close to, but unequal to, $0$.

You might need to re-read that last sentence a few times. It is practically the definition of a limit, and I'm not exaggerating when I say that this definition forms the bedrock of the theory behind calculus.

Notice that the definition of a limit explicitly states that we are looking at the behaviour of a function around $0$, not at $0$. Even if a function is defined at $0$, this is still entirely irrelevant. For instance, consider the function $f$ given by $$ f(x) = \begin{cases} 2x &\text{ if $x\neq0$} \\ 42 &\text{ if $x=0$} \, . \end{cases} $$ It is clear that as $x$ shrinks towards $0$, so does $2x$. Hence, $$ \lim_{x \to 0}f(x)=0 \, . $$ This is in spite of the fact that $f(0)=42$.

However, there are still many cases where plugging in the value being approached does work. Consider, for instance, the limit as $h$ approaches $0$ of $2x+h$. This limit is equal to $2x$, and we could got this answer by naively plugging in $h=0$. Functions like this are said to be continuous. In general, a function $f$ is said to be continuous at the point $a$ if $$ \lim_{x \to a}f(x)=f(a) \, . $$ As it turns out, almost all of the functions you are familiar have this property. This includes:

- Polynomials and rational functions (quotients of polynomials)

- Trigonometric and hyperbolic functions, and their inverses

- Exponential and logarithmic functions

And all of the functions you get as a result of adding, multiplying, or composing the above functions.

This means when we consider the limit of one of these functions, then provided that they are defined there, simply plugging in the value being approached does work. This is the reason that the users in the comments are suggesting that you transform your problem into something where you alllowed to simply plug in $h=0$ into the entire expression. This method works precisely because the functions that you are used to dealing with—the elementary functions—are continuous.

There are people who have written about this in the past on this site, and have done so much more concisely than I could manage. I would suggest that you look at the following threads:

- Why does factoring eliminate a hole in the limit?

- Why are we allowed to cancel fractions in limits? (The top answer to this question considers the formal definition of a limit, which is essentially the same as the one given answer, but is formulated more precisely. You might want to look a the Wikipedia article on limits for more information.)

- Why does simplifying a function give it another limit? (The title of this question is misleading, and the answers try to tackle the very source of this confusion.)

- 19,636

Charles Babbage

– John Douma Mar 27 '21 at 20:35