One way to do this without making any assumptions is by starting with the definition of the natural logarithm, and working from there. This approach is outlined by Barry Cipra (+1). I posted an answer to How can we come up with the definition of natural logarithm?, which I will reproduce here. Although it seems unnatural to start with the logarithm and use it to define exponents, this approach is easy to make rigorous and can also be made fairly intuitive. As I mention in the post, the reason we might do choose to do this is because it is fairly difficult to define something like $2^\pi$. It's clear what $2^x$ would mean for positive integers, even for rational numbers, but not for irrational numbers. Starting with the logarithm, we can avoid this issue.

The study of the exponential function and the logarithm is motivated by the desire to find a way of modelling a particular kind of growth, where the rate of change of a quantity is proportional to the value of that quantity at any given time. Consider how the population of a colony of bacteria might change over time—perhaps it doubles every hour. Or how your wealth compounds when you put it into a bank. In both cases, we are dealing with exponential growth. Later, when Calculus makes rigorous the notion of 'instantaneous change', we may define an exponential function as a solution to the differential equation

$$

\frac{dy}{dx}=ky \, .

$$

Solving this equation, we find that

$$

kx+C = \int \frac{1}{y} \, dy \, . \tag{*}\label{*}

$$

It seems like we have hit a roadblock, since we cannot integrate $1/y$ using the rule

$$

\int y^n \, dy = \frac{y^{n+1}}{n+1}+C \, ,

$$

since that would lead to division by zero. However, the fundamental theorem of calculus tells us that the set of antiderivatives of $1/y$ must be

$$

\int_{a}^{y} \frac{1}{t} \, dt \, .

$$

This is a set of functions that differ by a constant as we vary the value of $a$. However, since there is already a constant on the LHS of $\eqref{*}$, we can assume that $a=1$ without loss of generality. This yields

$$

kx+C = \int_{1}^{y} \frac{1}{t} \, dt \, .

$$

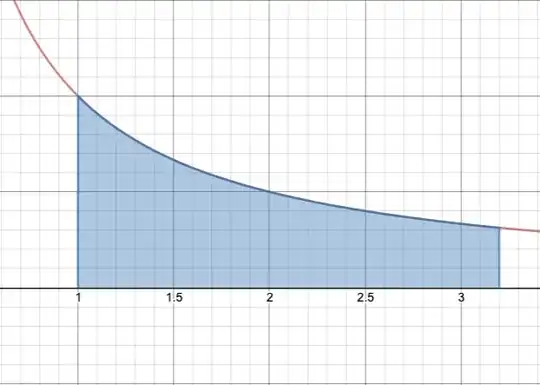

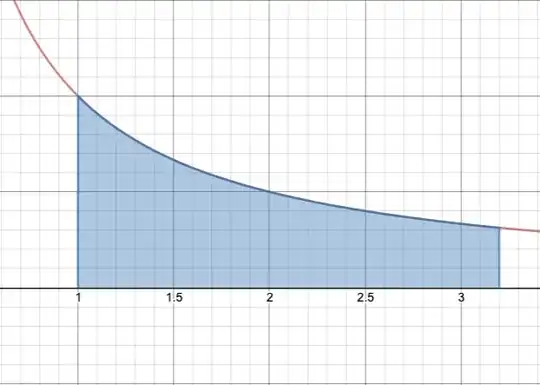

Let $f(y)=\int_{1}^{y} \frac{1}{t} \, dt$. This function represents the area of the region bounded by the hyperbola $1/t$, the horizontal axis, and the vertical lines $t=1$ and $t=y$:

As the value of $y$ gets larger, the area of this region also gets larger. We can then verify that $f$ is strictly increasing, meaning that $f^{-1}$ exists. Hence,

$$

kx+C = f(y) \implies y = f^{-1}(kx+C) \, .

$$

Since growth of this kind is called exponential growth, it only seems right to bestow upon the function $f^{-1}$ the new name $\exp$. The statement reads more nicely as

$$

y=\exp(kx+C) \, .

$$

Of all of the types of exponential growth, the most pleasing is that in which the rate of change of a quantity is equal to the value of that quantity (not just proportional to it). Since the set of solutions to $dy/dx = ky$ is $y=\exp(kx+C)$, the set of solutions to $dy/dx = y$ is

$$

y=\exp(x+C) \, .

$$

And of these solutions, you will no doubt agree that the simplest and most aesthetic is

$$

y=\exp(x) \, ,

$$

where $\exp(x)$ is defined as the inverse of $f(x)=\int_{1}^{x} \frac{1}{t} \, dt$. For obvious reasons, this function is called the natural exponentional function. There seems to be clear parallels between $\exp$ and functions of the form $g(x)=a^x$. If we return to our examples of exponential modelling—the colony of bacteria that doubles in size every hour—it certainly seems that $2^x$ is an exponential function. After all, the growth of a bacterial colony is proportional to its population at any given point in time. This suggests that

$$

2^x = \exp(kx+C)

$$

for some values of $k$ and $C$. (So far, we haven't actually defined what $2^x$ means when $x$ equals $\sqrt{2}$, say, but we'll leave to the side for the moment, and use an 'intuitive' definition of irrational exponents, where $2^{\sqrt{2}} \approx 2^{1.41421}$.) Suspecting that $a^x$ and $\exp(x)$ are somehow linked, we try to recall the essential properties of $a^x$, hoping that $\exp(x)$ shares these properties. The most important of these properties is that

$$

a^x \cdot a^y = a^{x+y} \, .

$$

And it turns out that $\exp(x)$ also has this property! In other words,

$$

\exp(p) \cdot \exp(q) = \exp(p+q) \, .

$$

However, proving this takes a little work. Since $\exp(x)$ is defined as the inverse of $f(x)=\int_{1}^{x}\frac{1}{t} \, dt$, it seems sensible to use this integral in our proof. Showing that $\exp(p) \cdot \exp(q) = \exp(p+q)$ is actually equivalent to showing that $f(r) + f(s) = f(rs)$, since

\begin{align}

&f(r) + f(s) = f(rs) \\

\iff &f^{-1}(f(r)+f(s)) = rs \\

\iff &f^{-1}(f(r)+f(s)) = f^{-1}(f(r)) \cdot f^{-1}(f(s)) \\

\iff &\exp(p+q) = \exp(p) \cdot \exp(q)

\end{align}

(And this should make intuitive sense, too. Notice how $f$ changes multiplication into addition, and so it is only natural that $f^{-1}$ changes addition into multiplication.) There is also a slick way to prove that $f(r)+f(s)=f(rs)$:

$$

\int_{1}^{rs}\frac{1}{t} \, dt = \int_{1}^{r}\frac{1}{t} \, dt + \int_{r}^{rs}\frac{1}{t} \, dt

$$

Let $z=t/r$, meaning that $dz=\frac{1}{r}dt=\frac{z}{t}dt$. Then we have

\begin{align}

\int_{1}^{rs}\frac{1}{t} \, dt &= \int_{1}^{r}\frac{1}{t} \, dt + \int_{1}^{s}\frac{1}{z} \, dz \\

f(rs) &= f(r) + f(s) \, .

\end{align}

Now that we have established that $\exp(p) \cdot \exp(q)=\exp(p+q)$, it seems reasonable to conjecture that $\exp(x)$ is actually equal to $a^x$ for some base $a$. To find that base, we plug in $x=1$, yielding $\exp(1)$, which we will abbreviate as $e$. This means that

$$

e^x = \exp(x) \, .

$$

This notation, apart from being very convenient, is also entirely valid. To reiterate, $\exp(p) \cdot \exp(q)=\exp(p+q)$, and so

$$

e^p \cdot e^q = e^{p+q}

$$

as expected. Geometrically, $e$ represents the number $a$ for which $\int_{1}^{a}\frac{1}{t} \, dt = 1$. This property follows directly if one recalls the definition of $\exp$. And since $\log_a(x)$ is defined as the inverse of $a^x$, it is evident that $\log_e(x)$ is the inverse of $e^x$. But we know that the inverse of $\exp(x)$ is $f(x)=\int_{1}^{x}\frac{1}{t} \, dt$, and putting these statements together, we find that

$$

\log_{e}(x) = \int_{1}^{x}\frac{1}{t} \, dt \, .

$$

In fact, this logarithm is so important, so natural, that we often dispense with the base $e$ entirely and write

$$

\log(x) = \int_{1}^{x}\frac{1}{t} \, dt \, .

$$

Earlier, we mentioned the difficulty of defining $a^x$ when $x$ is an irrational number. The logarithm solves this problem. For rational $x$, we know that

$$

a^x = e^{x\log(a)} \, .

$$

But the RHS makes sense for all $x$, and so we may define

$$

a^x = e^{x\log(a)} \, ,

$$

for $a>0$. And defining exponents in this way means that

$$

a^x \cdot a^y = a^{x+y} \, ,

$$

still holds even for irrational exponents! The proof of this fact follows smoothly if we unravel the definition of $a^x$:

$$

a^x = e^{x\log(a)} = \exp(x\log(a)) \, ,

$$

where $\exp(x)=\log^{-1}(x)$ and $\log(x) = \int_{1}^{x}\frac{1}{t} \, dt$. Armed with this knowledge, we return to the differential equation we were trying to solve earlier, and finally obtain the solution we were expecting.

\begin{align}

\frac{dy}{dx} &= ky \\

\int k \, dx &= \int\frac{1}{y} \, dy \\

kx + C &= \log(y) \\

y&=e^{kx+C} = e^{kx} \cdot e^{C} = Ae^{kx} \text{ where $A=e^C$}

\end{align}

Since $a^x$ simply represents $e$ being raised to another base, functions such as $2^x$ are also included in the solution set. In particular,

$$

\frac{d}{dx}(a^x) = \frac{d}{dx}(e^{x\log(a)}) = e^{x\log(a)} \cdot \log(a) = a^x \cdot \log(a) \, ,

$$

and so $k=\log(a)$. Note that the derivative of $e^x$ is simply a special case of this where $\log(e)=1$. Hopefully, this should provide the motivation behind defining the logarithm as $\int_{1}^{x}\frac{1}{t} \, dt$.

There is another way of understanding why functions of the form $f(x)=a^x$ grow in the way they do. If we differentiate $a^x$ with respect to $x$ from first principles, we get

\begin{align}

\lim_{\Delta x \to 0}\frac{a^{x+\Delta x}-a^x}{\Delta x} &= a^x \cdot \lim_{\Delta x \to 0}\frac{a^{\Delta x} - 1}{\Delta x} \\

&= a^x \cdot f'(0) \, .

\end{align}

In other words, the growth rate is proportional to the value of $a^x$ at any given point, with the gradient at $x=0$ being the proportionality constant. The natural logarithm comes in handy here:

$$

f'(0) = \lim_{\Delta x \to 0}\frac{a^{\Delta x} - 1}{\Delta x} \, .

$$

Exercise: prove that this limit is equal to $\log(a)$. This is tricky if you want to avoid using L'Hôpital's rule.