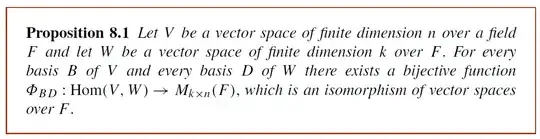

Part of the problem is that Proposition 8.1 is not a definition. It doesn't tell you what $\Phi_{BD}$ is, or how to compute it. It simply asserts existence.

It's also not particularly well-stated as a proposition, since it asserts the existence of a family of isomorphisms based on pairs of bases $(B, D)$ on $V$ and $W$ respectively, but doesn't specify any way in which said isomorphisms differ. If you could find just one (out of the infinitely many) isomorphisms between $\operatorname{Hom}(V, W)$ and $M_{k \times n}(F)$ (call it $\phi$), then letting $\Phi_{BD} = \phi$ would technically satisfy the proposition, and constitute a proof!

Fortunately, I do know what the proposition is getting at. There is a very natural map $\Phi_{BD}$, taking a linear map $\alpha : V \to W$, to a $k \times n$ matrix.

The fundamental, intuitive idea behind this map is the idea that linear maps are entirely determined by their action on a basis. Let's say you have a linear map $\alpha : V \to W$, and a basis $B = (v_1, \ldots, v_n)$ of $V$. That is, every vector $v \in V$ can be expressed uniquely as a linear combination of the vectors $v_1, \ldots, v_n$. If we know the values of $\alpha(v_1), \ldots, \alpha(v_n)$, then we essentially know the value of $\alpha(v)$ for any $v$, through linearity. The process involves first finding the unique $a_1, \ldots, a_n \in F$ such that

$$v = a_1 v_1 + \ldots + a_n v_n.$$

Then, using linearity,

$$\alpha(v) = \alpha(a_1 v_1 + \ldots + a_n v_n) = a_1 \alpha(v_1) + \ldots + a_n \alpha(v_n).$$

As an example of this principle in action, let's say that you had a linear map $\alpha : \Bbb{R}^2 \to \Bbb{R}^3$, and all you knew about $\alpha$ was that $\alpha(1, 1) = (2, -1, 1)$ and $\alpha(1, -1) = (0, 0, 4)$. What would be the value of $\alpha(2, 4)$?

To solve this, first express

$$(2, 4) = 3(1, 1) + 1(1, -1)$$

(note that this linear combination is unique, since $((1, 1), (1, -1))$ is a basis for $\Bbb{R}^2$, and we could have done something similar for any vector, not just $(2, 4)$). Then,

$$\alpha(2, 4) = 3\alpha(1, 1) + 1 \alpha(1, -1) = 3(2, -1, 1) + 1(0, 0, 4) = (6, -3, 7).$$

There is a converse to this principle too: if you start with a basis $(v_1, \ldots, v_n)$ for $V$, and pick an arbitrary list of vectors $(w_1, \ldots, w_n)$ from $W$ (not necessarily a basis), then there exists a unique linear transformation $\alpha : V \to W$ such that $\alpha(v_i) = w_i$. So, you don't even need to assume an underlying linear transformation exists! Just map the basis vectors wherever you want in $W$, without restriction, and there will be a (unique) linear map that maps the basis in this way.

That is, if we fix a basis $B = (v_1, \ldots, v_n)$ of $V$, then we can make a bijective correspondence between the linear maps from $V$ to $W$, and lists of $n$ vectors in $W$. The map

$$\operatorname{Hom}(V, W) \to W^n : \alpha \mapsto (\alpha(v_1), \ldots, \alpha(v_n))$$

is bijective. This is related to the $\Phi$ maps, but we still need to go one step further.

Now, let's take a basis $D = (w_1, \ldots, w_m)$ of $W$. That is, each vector in $W$ can be uniquely written as a linear combination of $w_1, \ldots, w_m$. So, we have a natural map taking a vector

$$w = b_1 w_1 + \ldots + b_n w_n$$

to its coordinate column vector

$$[w]_D = \begin{bmatrix} b_1 \\ \vdots \\ b_n \end{bmatrix}.$$

This map is an isomorphism between $W$ and $F^m$; we lose no information if we choose to express vectors in $W$ this way.

So, if we can express linear maps $\alpha : V \to W$ as a list of vectors in $W$, we could just as easily write this list of vectors in $W$ as a list of coordinate column vectors in $F^m$. Instead of thinking about $(\alpha(v_1), \ldots, \alpha(v_n))$, think about

$$([\alpha(v_1)]_D, \ldots, [\alpha(v_n)]_D).$$

Equivalently, this list of $n$ column vectors could be thought of as a matrix:

$$\left[\begin{array}{c|c|c} & & \\ [\alpha(v_1)]_D & \cdots & [\alpha(v_n)]_D \\ & & \end{array}\right].$$

This matrix is $\Phi_{BD}$! The procedure can be summed up as follows:

- Compute $\alpha$ applied to each basis vector in $B$ (i.e. compute $\alpha(v_1), \ldots, \alpha(v_n)$), then

- Compute the coordinate column vector of each of these transformed vectors with respect to the basis $D$ (i.e. $[\alpha(v_1)]_D, \ldots, [\alpha(v_n)]_D$), and finally,

- Put these column vectors into a single matrix.

Note that step 2 typically takes the longest. For each $\alpha(v_i)$, you need to find (somehow) the scalars $b_{i1}, \ldots, b_{im}$ such that

$$\alpha(v_i) = b_{i1} w_1 + \ldots + b_{im} w_m$$

where $D = (w_1, \ldots, w_m)$ is the basis for $W$. How to solve this will depend on what $W$ consists of (e.g. $k$-tuples of real numbers, polynomials, matrices, functions, etc), but it will almost always reduce to solving a system of linear equations in the field $F$.

As for why we represent linear maps this way, I think you'd better read further in your textbook. It essentially comes down to the fact that, given any $v \in V$,

$$[\alpha(v)]_D = \Phi_{BD}(\alpha) \cdot [v]_B,$$

which reduces the (potentially complex) process of applying an abstract linear transformation on an abstract vector $v \in V$ down to simple matrix multiplication in $F$. I discuss this (with different notation) in this answer, but I suggest looking through your book first. Also, this answer has a nice diagram, but different notation again.

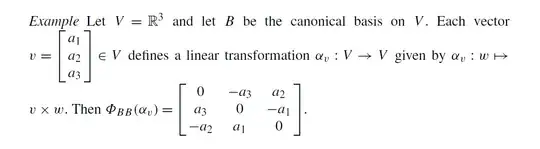

So, let's get into your example. In this case, $B = D = ((1, 0, 0), (0, 1, 0), (0, 0, 1))$, a basis for $V = W = \Bbb{R}^3$. We have a fixed vector $w = (w_1, w_2, w_3)$ (which is $v$ in the question, but I've chosen to change it to $w$ and keep $v$ as our dummy variable). Our linear map is $\alpha_w : \Bbb{R}^3 \to \Bbb{R}^3$ such that $\alpha_w(v) = w \times v$. Let's follow the steps.

First, we compute $\alpha_w(1, 0, 0), \alpha_w(0, 1, 0), \alpha_w(0, 0, 1)$:

\begin{align*}

\alpha_w(1, 0, 0) &= (w_1, w_2, w_3) \times (1, 0, 0) = (0, w_3, -w_2) \\

\alpha_w(0, 1, 0) &= (w_1, w_2, w_3) \times (0, 1, 0) = (-w_3, 0, w_1) \\

\alpha_w(0, 0, 1) &= (w_1, w_2, w_3) \times (0, 0, 1) = (w_2, -w_1, 0).

\end{align*}

Second, we need to write these vectors as coordinate column vectors with respect to $B$. Fortunately, $B$ is the standard basis; we always have, for any $v = (a, b, c) \in \Bbb{R}^3$,

$$(a, b, c) = a(1, 0, 0) + b(0, 1, 0) + c(0, 0, 1) \implies [(a, b, c)]_B = \begin{bmatrix} a \\ b \\ c\end{bmatrix}.$$

In other words, we essentially just transpose these vectors to columns, giving us,

$$\begin{bmatrix} 0 \\ w_3 \\ -w_2\end{bmatrix}, \begin{bmatrix} -w_3 \\ 0 \\ w_1\end{bmatrix}, \begin{bmatrix} w_2 \\ -w_1 \\ 0\end{bmatrix}.$$

Last step: put these in a matrix:

$$\Phi_{BB}(\alpha_w) = \begin{bmatrix} 0 & -w_3 & w_2 \\ w_3 & 0 & -w_1 \\ -w_2 & w_1 & 0 \end{bmatrix}.$$