Viewing the determinant as the amount of space enclosed by the columns of a matrix, it's geometrically clear to see why column operations don't change the determinant.

All adding one column to another does is skew the parallelepiped, but doesn't change its height nor its base.

$$\det(\vec{a}, \vec{b}, \vec{c}) = \det(\vec{a}, \vec{b}, (\vec{c}+\vec{b})).$$

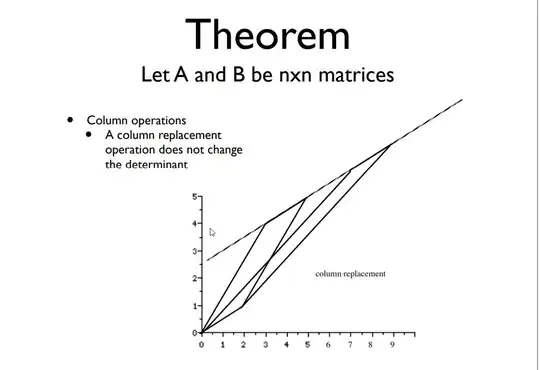

Here's a nice graphic showing it for parallelograms:

However, row operations don't change the determinant either.

Now, the three most common answers I've seen for why row operations don't change the determinant are these:

Since the determinant of a matrix is equal to the determinant of its transpose, (which gets proven in many different ways, sometimes geometrically as was the case with a few beautiful answers here: Geometric interpretation of $\det(A^T) = \det(A)$) if column operations don't change the determinant, neither will row operations, since those are column operations on the transpose.

If I have two matrices, $R$ and $A$, then $\det(RA)=\det(R)\det(A)$. Geometrically, $\det(A)$ corresponds to the amount by which $A$ will scale the space enclosed by a whole bunch of vectors when we multiply them all by it, and correspondingly $\det(R)$ is the amount that $R$ will scale the space enclosed by a bunch of vectors when we multiply them by it. Since $R$ will scale space in the same way regardless of whether the vectors were first transformed by $A$ or not, multiplying by $A$ and then $R$ scales space by the products of what $A$ scaled it by and $R$ scaled it by. A row on operation on $A$ corresponds to multiplying by a matrix $R$ that has a determinant of $1$ on the left, thus, $\det(RA)=\det(R)\det(A)=\det(A)$.

"Why are you considering the columns to be the vectors? You're crazy man, the ROWS are the vectors!"

All three are fine explanations (I hope I understood them correctly) but none are satisfying to me geometrically.

Admittedly, its probably my fault for not yet understanding them intuitively enough.

The first one because it depends entirely on the property of the transpose of a matrix having the same determinant as the original matrix. But, I don't want to talk about the transpose, I want to keep considering the columns of our original matrix the vectors - not its rows.

The second because it stops referring to the determinant as the space enclosed by the columns of the matrix, an instead refers to it as the amount by which space gets scaled by when we use $A$ as a transformation. Although the connection seems to be obvious to everyone, I'm still having a little bit of a hard time grasping why those two (the space enclosed by the columns of $A$ and the amount by which $A$ space scales by when we use it as a transformation on a whole bunch of vectors) are the same.

And finally, the third because if I were to consider the rows to be the vectors, then I would still have the same question but for column operations instead.

So, here I'll repeat my question:

Is there a satisfying geometric reason (such as one column being added to another corresponding to skewing the parallelogram for column operations) why the determinant doesn't change after row operations on a matrix?

And, I want the answer to consider the determinant solely to be the space enclosed by the columns of a matrix (so no connections to transformations, or transposes, or anything - all we have is a parallelepiped or parallelogram, and we're going to add components of some of its sides in one direction to other of its sides).

Thank you for reading. I know that I'm asking a question that's been asked before on this site, so I really appreciate that you've read through.

It's just I still feel like the geometric type of answer I'm looking for has not yet been given.