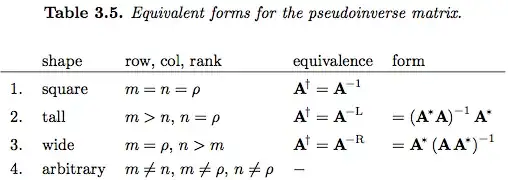

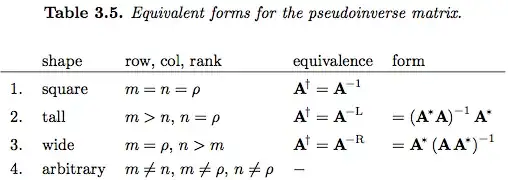

Details in What forms does the Moore-Penrose inverse take under systems with full rank, full column rank, and full row rank?.

Summary

Given a matrix $\mathbf{A} \in \mathbb{C}^{m\times n}_{\rho}$ where $\rho\ge 1$, the singular value decomposition exists, and can be used to construct the pseudoinverse matrix $\mathbf{A}^{\dagger}$.

Block decomposition: general case

The block decompositions for the target matrix and the Moore-Penrose pseudoinverse are

$$

\begin{align}

\mathbf{A} &= \mathbf{U} \, \Sigma \, \mathbf{V}^{*}

=

% U

\left[ \begin{array}{cc}

\color{blue}{\mathbf{U}_{\mathcal{R}(\mathbf{A})}} & \color{red}{\mathbf{U}_{\mathcal{N}(\mathbf{A}^{*})}}

\end{array} \right]

% Sigma

\left[ \begin{array}{cc}

\mathbf{S} & \mathbf{0} \\

\mathbf{0} & \mathbf{0}

\end{array} \right]

% V

\left[ \begin{array}{l}

\color{blue}{\mathbf{V}_{\mathcal{R}(\mathbf{A}^{*})}^{*}} \\

\color{red}{\mathbf{V}_{\mathcal{N}(\mathbf{A})}^{*}}

\end{array} \right]

\\

%%

\mathbf{A}^{\dagger} &= \mathbf{V} \, \Sigma^{\dagger} \, \mathbf{U}^{*}

=

% U

\left[ \begin{array}{cc}

\color{blue}{\mathbf{V}_{\mathcal{R}(\mathbf{A}^{*})}} &

\color{red}{\mathbf{V}_{\mathcal{N}(\mathbf{A})}}

\end{array} \right]

% Sigma

\left[ \begin{array}{cc}

\mathbf{S}^{-1} & \mathbf{0} \\

\mathbf{0} & \mathbf{0}

\end{array} \right]

% V

\left[ \begin{array}{l}

\color{blue}{\mathbf{U}_{\mathcal{R}(\mathbf{A})}^{*}} \\

\color{red}{\mathbf{U}_{\mathcal{N}(\mathbf{A}^{*})}^{*}}

\end{array} \right]

\end{align}

$$

Blue entities live in range spaces, red in null spaces.

Sort the least squares solutions into special cases according to the null space structures.

Special cases

Square: Both null spaces are trivial: full row rank, full column rank

$$ m = n = \rho: \qquad \mathbf{A}^{\dagger} = \mathbf{A}^{-1}$$

Block structures:

$$

\begin{array}{ccccc}

\mathbf{A} &=

&\color{blue}{\mathbf{U_{\mathcal{R}}}}

&\mathbf{S}

&\color{blue}{\mathbf{V_{\mathcal{R}}^{*}}} \\

\mathbf{A}^{\dagger} &=

&\color{blue}{\mathbf{V_{\mathcal{R}}}}

&\mathbf{S}^{-1}

&\color{blue}{\mathbf{U_{\mathcal{R}}^{*}}}

\end{array}

$$

Verify the classic inverse:

$$\mathbf{A}^{\dagger}\mathbf{A} = \mathbf{A}\mathbf{A}^{\dagger} = \mathbf{I}_{n}$$

Tall: Only $\color{red}{\mathcal{N}_{\mathbf{A}}}$ is non trivial: full column rank

$$ m > n, n = \rho: \qquad \mathbf{A}^{\dagger} = \mathbf{A}^{-L}$$

Block structures:

$$

\begin{align}

%

\mathbf{A} & =

%

\left[ \begin{array}{cc}

\color{blue}{\mathbf{U_{\mathcal{R}}^{*}}} &

\color{red}{\mathbf{U_{\mathcal{N}}}}

\end{array} \right]

%

\left[ \begin{array}{c}

\mathbf{S} \\

\mathbf{0}

\end{array} \right]

%

\color{blue}{\mathbf{V_{\mathcal{R}}}}

\\

% Apinv

\mathbf{A}^{\dagger} & =

%

\color{blue}{\mathbf{V_{\mathcal{R}}}} \,

\left[ \begin{array}{cc}

\mathbf{S}^{-1} &

\mathbf{0}

\end{array} \right]

%

\left[ \begin{array}{c}

\color{blue}{\mathbf{U_{\mathcal{R}}^{*}}} \\

\color{red}{\mathbf{U_{\mathcal{N}}^{*}}}

\end{array} \right]

\end{align}

$$

Verify the left inverse:

$$\mathbf{A}^{\dagger}\mathbf{A} = \mathbf{A}^{-L}\mathbf{A} = \left( \mathbf{A}^{*}\mathbf{A} \right)^{-1} \mathbf{A}^{*}\mathbf{A} = \mathbf{I}_{m}$$

Wide: Only $\color{red}{\mathcal{N}_{\mathbf{A}^{*}}}$ is non trivial: full row rank

$$ m < n, m = \rho: \qquad \mathbf{A}^{\dagger} = \mathbf{A}^{-L}$$

Block structures:

$$

\begin{align}

%

\mathbf{A} & =

%

\color{blue}{\mathbf{U_{\mathcal{R}}}}

\,

\left[ \begin{array}{cc}

\mathbf{S} &

\mathbf{0}

\end{array} \right]

%

\left[ \begin{array}{c}

\color{blue}{\mathbf{V_{\mathcal{R}}^{*}}} \\

\color{red} {\mathbf{V_{\mathcal{N}}^{*}}}

\end{array} \right]

%

\\

% Apinv

\mathbf{A}^{\dagger} & =

%

\left[ \begin{array}{cc}

\color{blue}{\mathbf{V_{\mathcal{R}}}} &

\color{red} {\mathbf{V_{\mathcal{N}}}}

\end{array} \right]

\left[ \begin{array}{c}

\mathbf{S}^{-1} \\

\mathbf{0}

\end{array} \right]

%

\color{blue}{\mathbf{U_{\mathcal{R}}^{*}}}

%

\end{align}

$$

Verify the right inverse:

$$\mathbf{A}\mathbf{A}^{\dagger} =

\mathbf{A}\mathbf{A}^{-R} =

\mathbf{A}\mathbf{A}^{*} \left( \mathbf{A} \, \mathbf{A}^{*} \right)^{-1} = \mathbf{I}_{n}$$

– Naryxus May 13 '19 at 18:05I get the intuition why the mapping from $\mathbb{R}^3$ to $\mathbb{R}^2$ cannot be injective (consider a projection on a plane etc.). But I have no idea how the formal proof of this looks like, because we have two infinite sets, so there could possibly be an unique element in $\mathbb{R}^2$ for each element in $\mathbb{R}^3$.

I don't understand the step from the non-injectivity to the non existence of the left pseudoinverse.