When I was first introduced to the concept of average (mean), I was confused. What does average mean? How does one number $\sum_{i=1}^{n} a_{i}$ represent the "central tendency" of a set of data points $a_i$. Then I found a way to deal with this concept. I thought that the average (mean) is "the closest to all the data points at the same time".

Now, I want to prove this. Concisely:

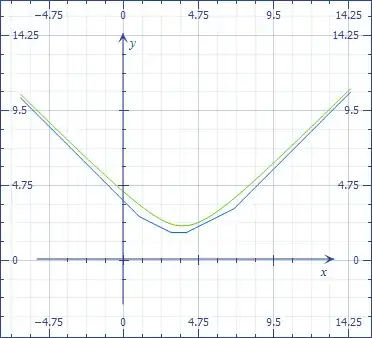

Let $f$ $:$ $\Bbb{R}$$\to$$\Bbb{R}$ be defined by:

$$f(x) = \sum_{i=1}^{n}|x-a_i|$$

To prove: $f(x)$ hits a minimum at $x=\bar a$, where $\bar a$ is the mean of the discrete data points $a_i$. This would mean that the sum of the distances of the mean from the various data points is minimum as compared to any other number.

My attempt: Clearly $f(x)$ is continuous since it is a sum of continuous functions and piece-wise differentiable since it is a sum of such functions.

So, I find $f'(x)$. Before that, let's assume $a_1<a_2<\ldots<a_n$ [clearly, no loss of generality, here]:

$$ f'(x) = \begin{cases} -n, & \text {$x<a_1$} \\ -n+2, & \text{$a_1<x<a_2$} \\ -n+4, & \text{$a_2<x<a_3$} \\ . & . \\ . & . \\ . & . \\ -n+2n = n, & \text{$x>a_n$} \\ \end{cases} $$

Now, the problem arises: $f'(x)=0$ has no solutions for $n$ is odd. For n is even, it has the solution as an entire interval:

$$x \in (a_{n/2},a_{n/2+1})$$

This means i failed, my intuition was wrong from the very beginning. It can be proven [i think] that for $n$ is even, $\bar a$ lies in the above interval, but still: It means that there are more real numbers that are as much the "mean" of the data points as the mean itself [if my "definition" was right].

So, two questions:

- Why was my intuition wrong?

- Which intuition is right for averages (mean)?