Problem statement

Start with the matrix

$$

\mathbf{A} \in\mathbb{C}^{m\times n}_{\rho}, \quad m > n.

$$

and the data vector $b\in\mathbb{C}^{m}$. Classify the solution of the linear system

$$

\mathbf{A} x = b

$$

Unique solution, exact: $\lVert \mathbf{A}x - b\rVert = \mathbf{0}$

The data vector $b$ is entirely within the column space of $\mathbf{A}$:

$$

\color{blue}{b} \in \color{blue}{\mathcal{R} \left( \mathbf{A} \right)}

$$

Example:

$$

\begin{align}

%

\mathbf{A} x &= b\\

%

\left[

\begin{array}{ccc}

1 & 0 & 0 \\

0 & 0 & 0 \\

0 & 0 & 0 \\

0 & 0 & 0 \\

0 & 0 & 0 \\

\end{array}

\right]

\left[

\begin{array}{c}

x_{1} \\

x_{2} \\

x_{3} \\

\end{array}

\right]

&=

\left[

\begin{array}{c}

1 \\

0 \\

0 \\

0 \\

0 \\

\end{array}

\right]

%

\end{align}

$$

Exact solution:

$$

\left[

\begin{array}{c}

x_{1} \\

x_{2} \\

x_{3} \\

\end{array}

\right]

=

\left[

\begin{array}{c}

1 \\

0 \\

0 \\

\end{array}

\right]

$$

There are no restrictions on matrix shape or rank. The touchstone is whether the data is in the $\color{blue}{range}$ space.

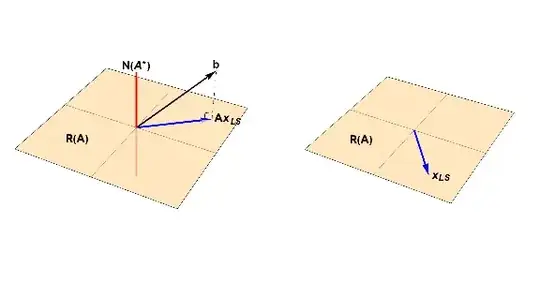

Geometric view:

• If the data vector has a $\color{red}{null}$ space component, there is no direct solution, and we must use least squares.

• If the data vector is in the column space, an exact solution exists; there is a direct solution.

Unique solution, not exact: $\lVert \mathbf{A}x - b\rVert > 0$

The data vector has components in both the $\color{blue}{range}$ and $\color{red}{null}$ spaces:

$$

b =

\color{blue}{b_{\mathcal{R}}} +

\color{red}{b_{\mathcal{N}}}

$$

The data vector cannot be expressed entirely in terms of combinations of the columns of the matrix $\mathbf{A}.$ The method of least squares is used to find the best approximation. This is the orthogonal projection of the data vector onto $\color{blue}{\mathcal{R} \left( \mathbf{A} \right)}$; the "shadow" of the data vector. In one of the follow on posts mentioned below, we see that the sum of the squares of the residual errors measures the amount of the data vector in $\color{red}{\mathcal{N} \left( \mathbf{A}^{*} \right)}$.

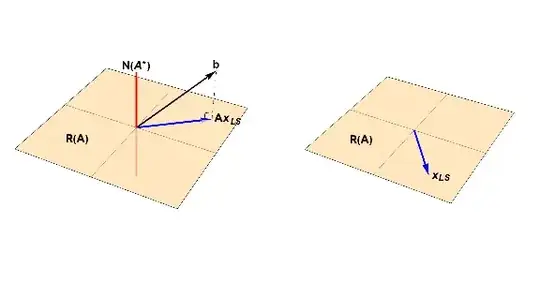

The least squares solution is

$$

\color{blue}{x_{LS}} = \color{blue}{\mathbf{A}^{+}b}

$$

Infinite solutions, not exact: $\lVert \mathbf{A}x - b\rVert > 0$

The posts mentioned below explore this issue. The upshot is that $\color{red}{\mathcal{N} \left( \mathbf{A} \right)}$ is not trivial. The least squares solution is the affine space

$$

x_{LS} =

\color{blue}{\mathbf{A}^{+}b} +

\color{red} {\left(

\mathbf{I}_{n} + \mathbf{A}^{+} \mathbf{A} \right) y}, \quad y\in\mathbb{C}^{n}

$$

No solution

When the data vector is in the $\color{red}{null}$ space,

$$

b \in \color{red}{\mathcal{N} \left( \mathbf{A}^{*} \right)}

$$

there is no least squares solution.

Explore Stack Exchange:

Looking at the data vector to classify existence and uniqueness: Query about the Moore Penrose pseudoinverse method

This post shows that the sum of the squares of the residual errors is the magnitude of the component of the data vector in the $\color{red}{null}$ space.

How does the SVD solve the least squares problem?

For your post, you wanted to classify solution based on the data vector. Here is the classification scheme when the data vector is not known:

What forms does the Moore-Penrose inverse take under systems with full rank, full column rank, and full row rank?, generalized inverse of a matrix and convergence for singular matrix