By inspection, no the least squares is not unique. The nullspace $\mathcal{N}\left( \mathbf{A}^{*} \right)$ is not trivial. The column space has dimension $m=5$, and we only have 2 linearly independent vectors.

To continue the problem, a guess was made about the notation and true problem. Start with the $m=5$ data points $\left\{ x_{k}, y_{k} \right\}_{k=1}^{m}$ and the trial function.

$$

y(x) = a_{0} + a_{1} x

$$

The linear system is

$$

\begin{align}

\mathbf{A} x &= y \\

%

\left[

\begin{array}{rr}

1 & -5 \\

1 & -2 \\

1 & -1 \\

1 & 2 \\

1 & 5 \\

\end{array}

\right]

%

\left[

\begin{array}{c}

a_{0} \\ a_{1}

\end{array}

\right]

%

&=

%

\left[

\begin{array}{r}

-2 \\

0 \\

0 \\

3 \\

4 \\

\end{array}

\right]

%

\end{align}

$$

The general least squares problem is defined as

$$

x_{LS} = \left\{ x\in\mathbb{C}^{n} \colon \lVert \mathbf{A} x_{LS} - b \rVert_{2}^{2} \text{ is minimized} \right\}

$$

which has the general solution

$$

x_{LS} = \mathbf{A}^{\dagger} b + \left( \mathbf{I}_{n} - \mathbf{A}^{\dagger}\mathbf{A} \right) z, \quad z\in\mathbb{C}^{n}

$$

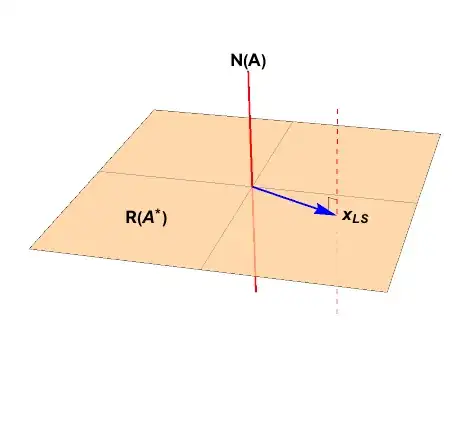

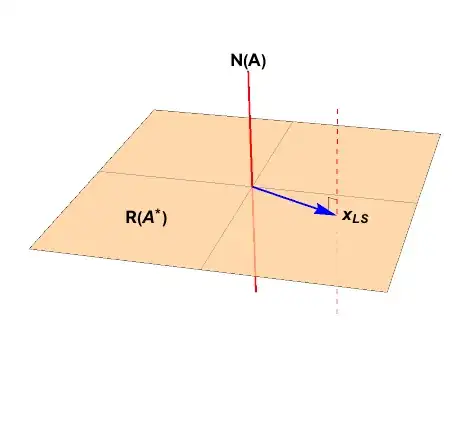

which is in general an affine space shown with the dashed red line below.

Pose the normal equations

$$

\begin{align}

\mathbf{A}^{*} \mathbf{A} x &= \mathbf{A}^{*} b \\

\left[

\begin{array}{cc}

\mathbf{1} \cdot \mathbf{1} & \mathbf{1} \cdot x \\

x \cdot \mathbf{1} & x \cdot x

\end{array}

\right]

%

\left[

\begin{array}{c}

a_{0} \\

a_{1}

\end{array}

\right]

%

&=

%

\left[

\begin{array}{c}

\mathbf{1} \cdot y \\

x \cdot y

\end{array}

\right] \\[3pt]

%

\left[

\begin{array}{rr}

5 & -1 \\

-1 & 59

\end{array}

\right]

%

\left[

\begin{array}{c}

a_{0} \\

a_{1}

\end{array}

\right]

%

&=

%

\left[

\begin{array}{r}

1 \\

-59

\end{array}

\right].

%

%

\end{align}

%

$$

The solution is

$$

\begin{align}

x_{LS}

%

&= \left( \mathbf{A}^{*}\mathbf{A} \right)^{-1} \mathbf{A}^{*} y \\[3pt]

%

&=

%

\frac{1}{294}

\left[

\begin{array}{rr}

59 & 1 \\

1 & 5 \\

\end{array}

\right]

%

\left[

\begin{array}{r}

1 \\

-59

\end{array}

\right] \\[3pt]

%

&=

%

\frac{1}{294}

\left[

\begin{array}{r}

331 \\

185

\end{array}

\right]

%

\end{align}

$$

As shown in Difference between orthogonal projection and least squares solution, the normal equations solution in the pseudoinverse solution. but we need the rest of the minimizers on the dashed line.

Knowing that

$$

\mathcal{N}\left( \mathbf{A}^{*} \right) = \text{span }

\left\{ \

%

\left[

\begin{array}{r}

7 \\

-10 \\

0 \\

0 \\

3

\end{array}

\right], \

%

\left[

\begin{array}{r}

4 \\

-7 \\

0 \\

3 \\

0

\end{array}

\right],\

%

\left[

\begin{array}{r}

1 \\

-4 \\

3 \\

0 \\

0

\end{array}

\right]\

\right\}

%

$$

the full least squares solution is

$$

x_{LS} =

%

\frac{1}{294}

\left[

\begin{array}{r}

331 \\

185

\end{array}

\right]

%

+ \alpha

%

\left[

\begin{array}{r}

7 \\

-10 \\

0 \\

0 \\

3

\end{array}

\right]

%

+ \beta

%

\left[

\begin{array}{r}

4 \\

-7 \\

0 \\

3 \\

0

\end{array}

\right]

%

+ \gamma

%

\left[

\begin{array}{r}

1 \\

-4 \\

3 \\

0 \\

0

\end{array}

\right]

$$

where $\alpha$, $\beta$, and $\gamma$ are arbitrary complex constants.

For more insight: Is the unique least norm solution to Ax=b the orthogonal projection of b onto R(A)?