Let $X$ be a nonnegative random variable with distribution function $F_X$. Then, by Fubini's Theorem, we have $$E[X]=\int_{[0,\infty)}\,x\,\text{d}F_X(x)=\int_{[0,\infty)}\,\int_{[0,\infty)}\,\chi_{[0,x)}(t)\,\text{d}t\,\text{d}F_X(x)=\int_{[0,\infty)}\,\int_{[0,\infty)}\,\chi_{[0,x)}(t)\,\text{d}F_X(x)\,\text{d}t\,,$$

where $\chi_E$ is the characteristic function on a set $E$.

That is,

$$E[X]=\int_{[0,\infty)}\,\int_{[0,\infty)}\,\chi_{(t,\infty)}(x)\,\text{d}F_X(x)\,\text{d}t=\int_{[0,\infty)}\,\text{Prob}(X> t)\,\text{d}t\,.$$

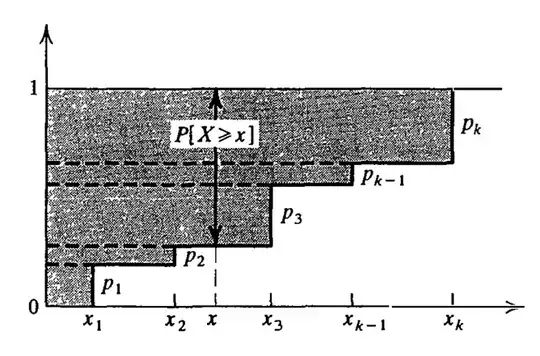

In particular, if $X$ is discrete, say, $X(\omega)\in\left\{x_1,x_2,\ldots\right\}$ for all $\omega$ with $0\leq x_1<x_2<\ldots$ and $\lim\limits_{n\to\infty}\,x_n=\infty$ (this ordering doesn't always exist, for example, when the possible values of $X$ are in $\mathbb{Q}_{>0}$), then

$$\begin{align}

E[X]&=\int_{[0,x_1)}\,\text{Prob}(X>t)\,\text{d}t+\sum_{i=1}^\infty\,\int_{[x_{i},x_{i+1})}\,\text{Prob}(X>t)\,\text{d}t

\\&=\int_{[0,x_1)}\,1\,\text{d}t+\sum_{i=1}^\infty\,\int_{[x_{i},x_{i+1})}\,\text{Prob}(X>x_{i})\,\text{d}t

\\

&=x_1+\sum_{i=1}^\infty\,\left(x_{i+1}-x_i\right)\,\text{Prob}\left(X>x_{i}\right)\,.

\end{align}$$

If $\left\{x_1,x_2,\ldots\right\}=\{1,2,\ldots\}$, then we get $$E[X]=1+\sum_{i=1}^\infty\,\text{Prob}(X>i)\,,$$ as required. As Zachary Selk mentioned, if $\left\{x_1,x_2,\ldots\right\}=\{0,1,2,\ldots\}$, then $$E[X]=\sum_{i=0}^\infty\,\text{Prob}(X>i)\,.$$