I saw that your last comments suggested that you still are having trouble with these so let's talk about it.

What's the general theory?

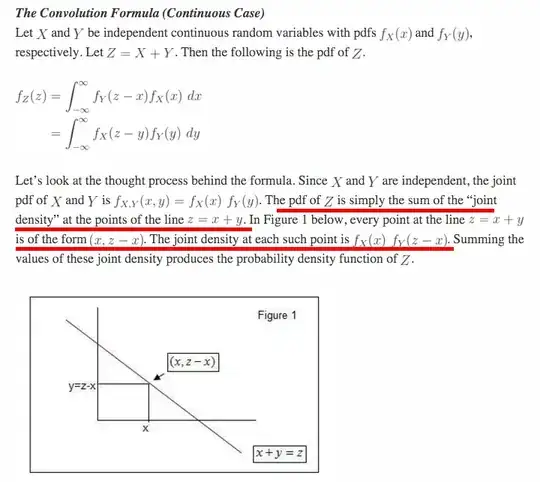

So let's say that you have some $X, Y$ with joint probability density $$j(x,\, y) = \lim_{dx,dy\to0}\frac1{dx~dy}\text{Pr}\big[X \in (x,\, x+dx) ~\land~ Y \in (y,\, y+dy)\big]$$For independent random variables with PDFs $f(x),\;g(y)$ of course we have $j(x, y) = f(x)~g(y).$ If you want to now compute the PDF of $Z = \alpha(X,\, Y)$ then you therefore need to integrate $$h(z) =

\lim_{dz\to0}\frac{1}{dz}\int_{\alpha(x,\,y) \in (z, z + dz)}dx~dy~j(x,\,y).$$There will be a family of partial inverses $\bar\alpha_i(x,\,z),\;i\in\{1,\,\dots n\}$ such that $\alpha(x,\,\bar\alpha_i(x,\,z)) = z.$ (We'd need other conditions on them in a moment like continuity, monotonicity, and probably differentiability.) For simplicity let's just take $n=1$ -- but if it's not then we'll just sum over $i.$

So we can write this integration domain by writing

$$h(z) =

\lim_{dz\to0}\frac{1}{dz}\int_{-\infty}^\infty dx~\int_{\bar\alpha(x,\,z)}^{\bar\alpha(x,\,z+dz)}dy~j(x,\,y).$$

Then we can apply the "first mean value theorem for integrals" which states that $\int_a^bdu~f(u) = f(c)\cdot(b - a)$ for some $a < c < b.$ So the second integral turns out to be

$$h(z) =

\int_{-\infty}^\infty dx~\lim_{dz\to0}~j\big(x,\,c(x,\,z,\,dz)\big)~\frac{\bar\alpha(x,\,z + dz) - \bar\alpha(x,\,z)}{dz}$$

for some $\bar\alpha(x,\,z) < c(x,\,z,\,dz) < \bar\alpha(x,\,z+dz),$ limiting to $$h(z) =

\int_{-\infty}^\infty dx~j\big(x,\,\bar\alpha(x,\,z)\big)~\left|\frac{\partial\bar\alpha}{\partial z}\right|.$$You could of course interchange $x\leftrightarrow y$ if the function was simpler for one or the other. (The absolute value brackets come from something which I didn't explain above, but which is hopefully easy to appreciate: we can't actually apply the mean value theorem in the above form unless $a < b$ so we have to use $\int_a^bdx~f(x)=-\int_b^adx~f(x)$ for decreasing functions $\partial_z \bar\alpha < 0.$ So it comes from $\operatorname{sgn}(f)~f = |f|.$

In your case...

So we've got these uniform distributions $U(a,\,b)$ with constant-over-the-interval PDFs $u_{[a,b]}(x) = \frac{1}{b-a} \cdot\{1 \text{ if } a < x < b \text { else } 0 \}.$ Furthermore for $\alpha(x, y) = x \pm y$ we have $\bar\alpha(x,\,z) = \pm (z - x)$ and so the integral is $$h(z) =

\int_{-\infty}^\infty dx~f(x)~g\big(\pm(z - x)\big)~|\pm 1|.$$If $X \sim U(-w, w)$ and $Y \sim U(-w, w)$ are independent, this result above means that both $x$ and $\pm (z - x)$ must be between $-w$ and $w$. We subtract $\pm z$ from the latter to say $-w \mp z < \mp x < w \mp z.$ It turns out that when we multiply through by $-1$ in the top case we flip the inequalities and these both turn out to be the same, $z - w < x < z + w.$ So we could write this as $$h(z) = \int_{-\infty}^{\infty} dx~u_{[-w,~w]}(x)~u_{[z-w,~z+w]}(x).$$

But how do we evaluate this crazy interval? Well, think about these two zones where the function is one "overlapping" as you move $z$ from $-2w$ (before they overlap) to $+2w$ (after), and you'll see there are two regimes: where the overlap is "increasing" $ z <0$ and where it is "decreasing" $z > 0$.

That should be enough of a hint to help you complete this yourself. The last reasoning is thus hidden behind a spoiler section:

If you split this out into those two situations, you'll be able to find $\int_{-w}^{z + w} dx = z + 2w$ in the first case, and $\int_{z-w}^{w} dx = 2w - z$ in the second case, so it's a triangle with height $h(0) = 2w/(4 w^2) = (2w)^{-1}$ and base $z \in (-2w, +2w),$ satisfying the condition that the total area $\frac12 (4w)~(2w)^{-1} = 1,$ true for both the sum and difference of such variables.