In terms of marginal density, how does one know that summing over the $x$ (or rather along the linear line) values for the joint density of $(x,z-x)$ give us the density function of $z$? More importantly, can someone explain this intuitively? (aside from proofs)

-

My answer to this question may be useful: http://math.stackexchange.com/questions/1533145/why-is-the-convolution-output-in-terms-of-t-not-tau/1533414#1533414 – Math1000 Nov 27 '15 at 15:49

2 Answers

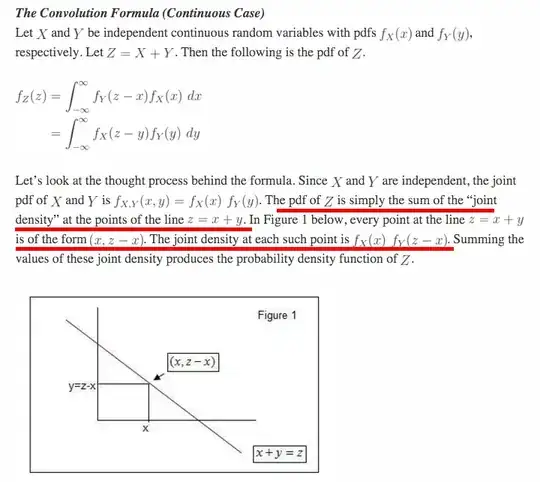

You are looking at the final formula and seeking intuition rather than thinking about how the formula was arrived at. Indeed, the "thought process" that your book offers as an explanation of the formula leaves much to be desired (e.g. it puts joint density in eyebrow-wiggling quotes instead of sum). Here is what I wrote in a previous answer:

Let $Z = X+Y$. For any fixed value of $z$, $$F_Z(z) = P\{Z \leq z\} = P\{X+Y \leq z\}\\ = \int\int_{x+y\le z} f_{X,Y}(x,y)\,\mathrm d(x,y) =\int_{-\infty}^{\infty}\left[ \int_{-\infty}^{z-x} f_{X,Y}(x,y)\,\mathrm dy\right]\,\mathrm dx$$ and so, using the rule for differentiating under the integral sign (see the comments following this answer if you have forgotten this) $$\begin{align*} f_Z(z) &= \frac{\partial}{\partial z}F_Z(z)\\ &= \frac{\partial}{\partial z}\int_{-\infty}^{\infty}\left[ \int_{-\infty}^{z-x} f_{X,Y}(x,y)\,\mathrm dy\right] \,\mathrm dx\\ &= \int_{-\infty}^{\infty}\frac{\partial}{\partial z}\left[ \int_{-\infty}^{z-x} f_{X,Y}(x,y)\,\mathrm dy\right]\,\mathrm dx\\ &= \int_{-\infty}^{\infty} f_{X,Y}(x,z-x)\,\mathrm dx\tag{1} \end{align*}$$ When $X$ and $Y$ are independent random variables, the joint density is the product of the marginal densities and we get the convolution formula $$f_{X+Y}(z) = \int_{-\infty}^{\infty} f_{X}(x)f_Y(z-x)\,\mathrm dx ~~ \text{for independent random variables} ~X~\text{and}~Y.\tag{2}$$

So much so for the math. What about the "thought process" that can give an intuitive feel for why the convolution integral gives the density of $Z = X+Y$? Well, remember that $f_Z(z)$ is a density (measured in units of probability mass/length) and not a probability. In fact, $P\{Z = z\} = 0$ for all $z$, while the probability of the event $A$ that $Z$ has value inside a small interval of length $\Delta z$ centered at $z$ is $$P(A) = P\left\{z - \frac{\Delta z}{2} < Z < z + \frac{\Delta z}{2}\right\} \approx f_Z(z)\Delta z.$$ Now, in terms of $(X,Y)$, we have that the event occurs $A$ occurs whenever the random point $(X,Y)$ lies inside a diagonal strip bounded by the lines $x+y = z + \frac{\Delta z}{2}$ and $x+y = z - \frac{\Delta z}{2}$. These lines cross the vertical axis at the points $(0, z + \frac{\Delta z}{2})$ and $(0, z - \frac{\Delta z}{2})$, that is, the vertical separation of the lines is $\Delta z$.

Now, we can find $P(A)$ by dividing up this diagonal strip using vertical lines spaced $\Delta x$ apart, thus creating narrow

parallelograms whose vertical sides are of length $\Delta z$

and are spaced $\Delta x$ apart and whose center is

$(x_i,z-x_i)$. Thus,

$$P\{(X,Y) \in \text{parallelogram}\}

\approx f_{X,Y}(x_i,z-x_i)\times \{\text{area of parallelogram}\}

= f_{X,Y}(x_i,z-x_i) \Delta x \Delta z$$

so that

$$P(A) = f_Z(z)\Delta z =

\left[\sum_i f_{X,Y}(x_i,z-x_i) \Delta x\right]\times \Delta z.

\tag{3}$$

The quantity in square brackets in $(3)$ is a sum

(in fact a Riemann sum) and by taking the limit as the

parallelograms grow ever narrower, that sum becomes an integral,

that is, $(3)$ leads to

$$f_Z(z)\Delta z =

\int_{-\infty}^\infty f_{X,Y}(x,z-x) \, \mathrm dx\times \Delta z$$

thus proving $(1)$. It is in this sense thatThe pdf of

$Z$ is simply the sum of the "joint density" at the points of the line

$z = x+y$ as your book claims.

Personally, I vastly prefer the CDF approach over such messy calculations and inelegant "thought processes"

- 3,634

- 25,197

-

-

1Since $Z-X=Y$ and $X$ and $Y$ are defined as being independent random variables, yes, in this problem, $X $ and $Z-X$ are independent random variables. For arbitrary independent random variables $X$ and $Z$, $X$ and $Z-X$ are not independent. – Dilip Sarwate Nov 29 '15 at 15:08

-

i find it rather strange though as $Z-X$ involves the RV $X$...? But i see your point as $Y$ is independent and is defined as $Z-X$. – Brofessor Nov 29 '15 at 16:22

-

How did we know to use the $\int_{-\infty}^{\infty}\left[ \int_{-\infty}^{z-x} f_{X,Y}(x,y),\mathrm dy\right],\mathrm dx$ to find the probability of $Z$? In particular the joint density? – Brofessor Dec 03 '15 at 13:18

-

In addition, WOULD $f_{X,Y}(x_i,z-x_i)\times {\text{area of parallelogram}}$ be analogous to density times area = Probability mass for 2-dimensional RVs versus density times length = Probability mass for 1-dimensional RVs? – Brofessor Dec 03 '15 at 13:50

-

1"How did we know to use the $\int_{-\infty}^{\infty}\left[ \int_{-\infty}^{z-x} f_{X,Y}(x,y),\mathrm dy\right],\mathrm dx$ to find the probability of $Z$?" Please look at the diagram in your question. – Dilip Sarwate Dec 03 '15 at 17:11

if the variables are independent, you have $$f_{XY}(x,y) = f_X(x)f_y(y).$$ Hence $$P(X + Y \le x) = \iint_{(s,t)|s+t\le x} f_X(s)f_y(t)\,ds\,dt = \int_{-\infty}^\infty\int_{-\infty}^{x - t} f_X(s)f_Y(t)\,ds\,dt$$ Now differentiate and invoke Leibnitz's rule to get $$f_{X+Y}(x) = \int_{-\infty}^\infty f_X(x-t)f_Y(t)\,dt = (f_X*f_Y)(x).$$

- 49,383