Let's forget about the basis of eigenvectors and just concentrate on change of basis.

Say we're given the matrix $T_{\mathcal B}$ which represents some linear transformation $T: \Bbb R^n \to \Bbb R^n$ relative to some basis $\mathcal B = \{b_1, \dots, b_n\}$. But our vectors (column matrices) are given with respect to another basis $\mathcal C = \{c_1, \dots, c_n\}$. So what we'd like to do is to find the matrix which represents $T$ with respect to this basis $\mathcal C$ -- we'll call that matrix $T_{\mathcal C}$.

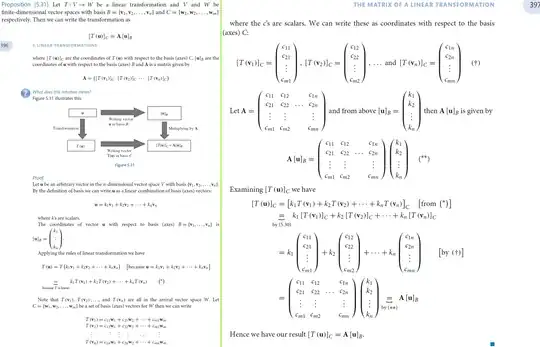

Here's essentially what we want to do:

$$\begin{matrix} \Bbb R^n_{\mathcal C} & \stackrel{I_{\mathcal C \to \mathcal B}}\longrightarrow & \begin{matrix}\Bbb R^n_{\mathcal B} & \stackrel{T_{\mathcal B}}\longrightarrow & \Bbb R^n_{\mathcal B}\end{matrix} & \stackrel{I_{\mathcal B \to \mathcal C}}\longrightarrow & \Bbb R^n_{\mathcal C} \\ & \large\searrow & &\large\nearrow\\ & & \stackrel{T_{\mathcal C}}{\large\longrightarrow}\end{matrix}$$

Hopefully from this diagram you can see that the matrix $T_{\mathcal C}$ should be the same as the product $I_{\mathcal B \to \mathcal C}T_{\mathcal B}I_{\mathcal C \to \mathcal B}$.

The matrices $I_{\mathcal C \to \mathcal B}$ and $I_{\mathcal B \to \mathcal C}$ are inverses of each other because clearly this relationship should hold: $I_{\mathcal C \to \mathcal B}I_{\mathcal B \to \mathcal C} = I_{\mathcal B \to \mathcal C}I_{\mathcal C \to \mathcal B} = I$, where $I$ is the identity matrix (Note that $I_{\mathcal C \to \mathcal B}$ is also a type of identity matrix as it transforms the coordinates of a vector between different bases, but doesn't actually change the vector itself).

From here on, to match your document's notation, I'll denote $V=I_{\mathcal C \to \mathcal B}$. Then we have $V^{-1} = I_{\mathcal B \to \mathcal C}$.

I now claim that $V$ will simply be the matrix where the $i$th column is the coordinates of $c_i$ is the $\mathcal B$ basis. Thus $$V = \begin{bmatrix} [c_1]_{\mathcal B} & [c_n]_{\mathcal B} & \cdots & [c_n]_{\mathcal B}\end{bmatrix}$$

Why does this work? Let's see:

$$V[c_1]_{\mathcal C} = V\begin{bmatrix} 1 \\ 0 \\ \vdots \\ 0\end{bmatrix} = \text{the first column of $V$} = [c_1]_{\mathcal B}$$

Therefore the matrix $T_{\mathcal C} = V^{-1}T_{\mathcal B}V$ first (remember that we start transforming a vector from the rightmost matrix) transforms the coordinates of a vector from the $\mathcal C$ basis to the $\mathcal B$ basis. Then it does the transformation $T$. And finally it transforms the coordinates of the vector back to the $\mathcal C$ basis.

From here the only other thing is that if your matrix happens to have a $n$ linearly independent eigenvectors, you could use those as your basis $\mathcal C$.