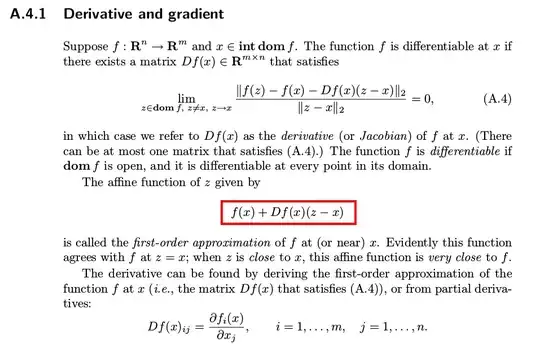

I am studying Boyd & Vandenberghe's Convex Optimization and encountered a problem on page 642. According to the definition, the derivative $Df(x)$ has the form:

$$f(x)+Df(x)(z-x)$$

and when $f$ is real-valued (i.e., $f : \Bbb R^n \to \Bbb R$), the gradient is

$$\nabla{f(x)}=Df(x)^{T}$$

See the original text below:

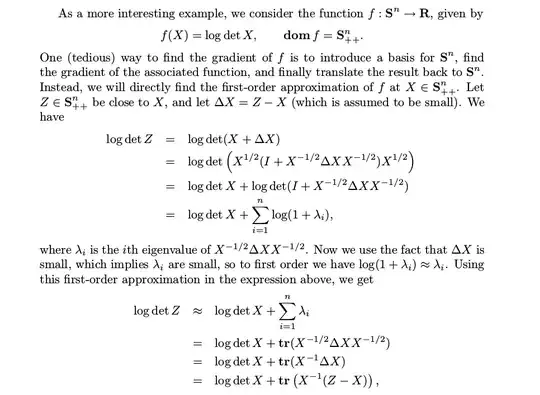

But when discussing the gradient of function $f(X)=\log{\det{X}}$, author said "we can identify $X^{-1}$ as the gradient of $f$ at $X$", please see below:

Where did trace $\mbox{tr}(\cdot)$ go?