The best I can do here is an inductive argument:

Clearly, after $0$ draws, there is always exactly $1$ red ball with a probability of $1 = \frac1{0+1}$ i.e. certainty of being there.

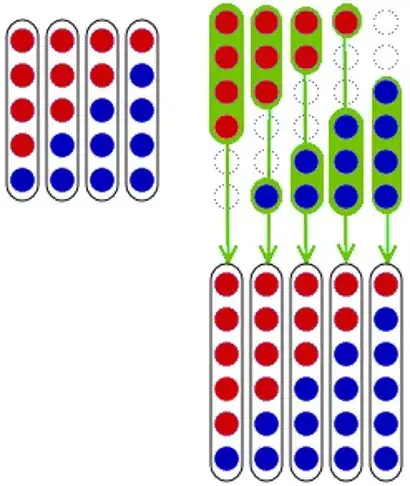

For the inductive case, we notice that drawing $n + 1$ balls first begins with drawing $n$ balls, then another. After drawing the $n$ balls, there is uniform chance of any number of red balls in the urn from $1$ to $n+1$, by induction. That is, for any $1 \leq k \leq n+1$, the probability of $k$ red balls is $\frac1{n+1}$. Now, what is the probability of having $k$ red balls after $n + 1$ draws? Let's consider some cases:

If $2 \leq k \leq n + 1$, we have two more cases to consider with non-zero probability: $k$ balls after $n$ draws, and then

draw a blue ball, or we have $k - 1$ after $n$ draws, and then we

draw a red ball. Now, after we have already drawn $n$ times, there

are $n+2$ balls in total in the urn. The chances then of drawing a

blue ball, given $k$ red balls in the urn is $\frac{n + 2 - k}{n +

2}$. The chances of drawing a red ball given $k - 1$ red balls is

$\frac{k - 1}{n + 2}$. Both numbers of red balls after $n$ draws are equally likely by the inductive hypothesis, with probability $\frac1{n+1}$. The overall probability is then $\frac1{n +

1}(\frac{n + 2 - k}{n + 2} + \frac{k - 1}{n + 2}) = \frac1{n + 2}$.

If $k = 1$, then we must never draw a red ball. So we must have exactly $1$ red ball remaining after $n$ draws, and then draw a blue ball. By induction, the probability of $1$ ball after $n$ draws is $\frac1{n+1}$. Drawing a blue ball after this has probability $\frac{n+1}{n+2}$. Multiplying the two probabilities easily gives us the desired $\frac{1}{n+2}$.

If $k = n+2$, then the problem is symmetric, considering $k=1$ for blue balls instead of red balls and so the previous argument applies.

It seems as though Polya chose this question intentionally to challenge our very intuitions, which are so often wrong. He seems to have crafted the question also to force us to use a clever argument of induction, topped with the icing of an argument based on symmetry. Naturally, we are inclined to think of some sort of geometrically decreasing probability distribution across $n$. Once symmetry is realised, we must update this: perhaps the distribution approaches a normal distribution as $n$ gets large? These are certainly intuitions that I had when I saw this question. But I don't trust my intuition. And I am right not to. This is a good demonstration of where intuition fails, at least initially, before we really soak in the problem. So we look instead for a rigorous demonstration, and the most elegant one at that.

As Einstein once said "Everything Should Be Made as Simple as Possible, But Not Simpler". Erdos used to talk about the most elegant proofs as being "from the book". I won't blacken either of their names by claiming the above is "as simple as possible" or "from the book", but it's certainly the best I can think of. Intuitively, I don't feel you can "compress" the argument much further, without losing its essence. That intuition I can't explain, it's just a hunch.

Having said that, the key steps in the argument, I believe, are as follows. The first is hitting upon the right idea of induction: i.e. induction on $n$, not $k$. There is a conceptual "aha" moment when you realise that drawing $n + 1$ balls is the same as first drawing $n$ balls, and then drawing another. The second "aha" moment is when you follow your previous idea for induction, and notice that all values of $k$ balls after $n + 1$ draws, except the corner cases of $n + 2$ and $1$, can happen in exactly two ways: either $k$ balls are chosen from $n$, and then no more, or $k - 1$ are chosen, and $1$ more. We follow this hunch, work out the maths, and hey presto, the correct answer pops out nicely. Then we're left with the corner cases. The case for $1$ also works, hoorah! The final touch of elegance is to use a symmetric argument on the case of $n+2$, rather than work it out from scratch.

There is a shorter way of explaining this, quasi-intuitively, but it loses the crispness of the above argument. Still, it is a nice auxiliary intuition to complement the above. Again, imagine the inductive proof. Imagine all the possibly values for red balls: $1, 2, 3, \dots, n+2$. In the next step, each choice "gives" part of its probability away to the next one up. Clearly larger values give more than smaller ones: because there is more chance of drawing another red once you already have red. But they also get more, because they get a value from the number below. Still, overall they lose. Each gets $\frac{k - 1}{n + 2}$ and gives $\frac{k}{n + 2}$ So $1$ "gives away" a value of $\frac1{n + 2}$ to $2$. $2$ "gets" this, and gives away $\frac2{n + 2}$ to $3$ etc. Eventually, when all values are passed on like this, the distribution settles back at uniformity, except now with $\frac1{n + 2}$ instead of $\frac1{n + 1}$.