I am trying to understand with basic mathematical background how the $t$-Student distribution is a "natural" pdf to define. A more accessible explanation than this post, or the daunting Biometrika paper by RA Fisher.

Background:

The central limit theorem states that if ${\textstyle X_{1},X_{2},...,X_{n}}$ are each a random sample of size ${\textstyle n},$ taken from a population with mean ${\textstyle \mu }$ and finite variance ${\textstyle \sigma ^{2}}$ and if ${\textstyle {\bar {X}}}$ is the sample mean, then the limiting form of the distribution of ${\textstyle Z=\left({\frac {{\bar {X}}_{n}-\mu }{\sigma /\surd n}}\right)}$ as ${\textstyle n\to \infty },$ is the standard normal distribution.

If $X_1, \ldots, X_n$ are iid random variables $\sim N(\mu,\sigma^2)$,

$$\frac{\bar{X}\,-\,\mu}{\sigma/\sqrt{n}} \sim N(0,1)$$

This is the basis of the Z-test, $Z=\frac{\bar{X}\,-\,\mu}{\sigma/\sqrt{n}}$

[Note that the preceding opening statement is now correct after reflecting @Ian and @Michael Hardy comments to the OP in reference to the CLT.]

If the standard deviation of the population, $\sigma$, is unknown we can replace it by the estimation based on a sample, $S$, but then the expression (one-sample t-test statistic) will follow a $t$-distribution:

$$ t=\frac{\bar{X}\,-\,\mu}{S/\sqrt{n}}\sim t_{n-1}$$

with $$s=\sqrt{\frac{\sum(X_i-\bar X)^2}{n-1}}.$$

Minimal manipulations of this equation for $T$

$$\begin{align} \frac{\bar{X}\,-\,\mu}{S/\sqrt{n}} &= \frac{\bar{X}\,-\,\mu}{\frac{\sigma}{\sqrt{n}}} \frac{1}{\frac{S}{\sigma}}\\[2ex] &= Z\,\frac{1}{\frac{S}{\sigma}}\\[2ex] &= \frac{Z}{\sqrt{\frac{\color{blue}{\sum(X_i-\bar X)^2}}{(n-1)\,\color{blue}{\sigma^2}}}}\\[2ex] &\sim\frac{Z}{\sqrt{\frac{\color{blue}{\chi_{n-1}^2}}{n-1}}}\\[2ex] &\sim t_{n-1}\small \tag 1 \end{align}$$

will introduce the chi square distribution, $(\chi^2).$

The chi square is the distribution that models $X^2$ with $X\sim N(0,1)$:

Let's say that $X \sim N(0,1)$ and that $Y=X^2$ and find the density of $Y$ by using the $\text{cdf}$ method:

$$\Pr(Y \leq y) = \Pr(X^2 \leq y)= \Pr(-\sqrt{y} \leq x \leq > \sqrt{y}).$$

We cannot integrate in close form the density of the normal distribution. But we can express it:

$$ F_X(y) = F_X(\sqrt{y})- F_X(-\sqrt[]{y}).$$ Taking the derivative of the cdf:

$$ f_X(y)= F_X'(\sqrt{y})\,\frac{1}{\sqrt{y}}+ > F_X'(\sqrt{-y})\,\frac{1}{\sqrt{y}}.$$

Since the values of the normal $\text{pdf}$ are symmetrical:

$$ f_X(y)= F_X'(\sqrt{y})\,\frac{1}{\sqrt{y}}.$$

Equating this to the $\text{pdf}$ of the normal (now the $x$ in the $\text{pdf}$ will be $\sqrt{y}$ to be plugged into the $e^{-\frac{x^2}{2}}$ part of the normal $\text{pdf}$); and remembering to in include $\frac{1}{\sqrt{y}}$ at the end:

$$\begin{align} f_X(y) &= F_X'\left(\sqrt{y}\right)\,\frac{1}{\sqrt[]{y}}\\[2ex] &=\frac{1}{\sqrt{2\pi}}\,e^{-\frac{y}{2}}\, \frac{1}{\sqrt[]{y}}\\[2ex] &=\frac{1}{\sqrt{2\pi}}\,e^{-\frac{y}{2}}\, y^{\frac{1}{2}- 1} \end{align}$$

Comparing to the pdf of the chi square:

$$ f_X(x)= \frac{1}{2^{\nu/2}\Gamma(\frac{\nu}{2})}e^{\frac{-x}{2}}x^{\frac{\nu}{2}-1}$$

and, since $\Gamma(1/2)=\sqrt{\pi}$, for $\nu=1$ df, we have derived exactly the $\text{pdf}$ of the chi square.

In the case of the $t$-distribution the chi-square is suitable to model the sum of squared normals, i.e $\displaystyle \sum(X_i-\bar X)^2 $ in the set of Eq $(1),$ a well known property derived here typically with $n$ degrees of freedom, but

why is it $n\,-\,1$ here, i.e. $\color{blue}{\chi^2_{n-1}}$ in eq. $(1)$?

I don't know how to explain $\sigma^2$ in $\frac{\sum(X_i-\bar X)^2}{\sigma^2}$ in equation $(1)$ becoming "absorbed" into the $\chi_{n-1}^2$ part of the $\text{t-Student's pdf}.$

So it boils down to understanding why

$$\frac 1 {\sigma^2} \left((X_1-\bar X)^2 + \cdots + (X_n - \bar X)^2 \right) \sim \chi^2_{n-1}.$$

After that the derivation of the pdf is not that daunting.

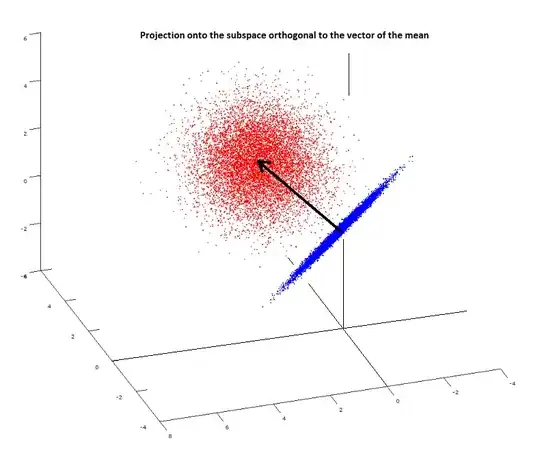

PS: The accepted answer below becomes clear after reading this Wikipedia entry. Also, a plot may be useful including the spherical cloud $(X_1,X_2,X_3)$ (in red) with the orthogonal projection, $(X_1-\bar X,\ldots,X_n-\bar X),$ on the $n-1$ subspace forming a plane (in blue) at the origin: