I finally figure this out! I post the answer and hope it might be helpful for someone else. Two other questions I asked related to this one are

- A question about co-variance of two linear combinations

- Why the principal components correspond to the eigenvalues?

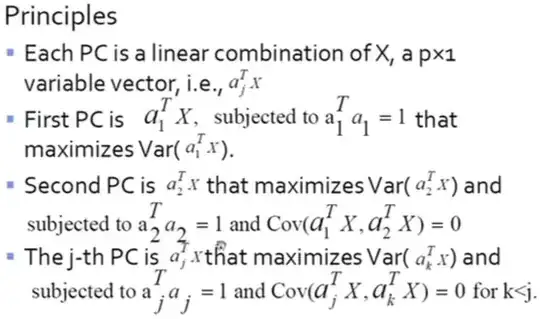

By the first question, I figured out that ${\mathop{\rm cov}} ({{\bf{a}}^{\rm{T}}}{\bf{X}},{{\bf{b}}^{\rm{T}}}{\bf{X}}) = {{\bf{a}}^{\rm{T}}}\rm{var}({\bf{X}}){\bf{b}}$.

By the second question, I figured out that the the loadings of each principal component are orthogonal eigenvectors of the co-variance matrix $\rm{var}({\bf{X}})$. Note that co-variance matrix is a real-valued symmetric matrix, which must have $n$ orthogonal eigenvectors.

As a result, suppose $\bf{a}_1^\rm{T}$ and $\bf{a}_2^\rm{T}$ are loadings of two principal components, then ${\mathop{\rm cov}} ({\bf{a}}_1^{\rm{T}}{\bf{X}},{\bf{a}}_2^{\rm{T}}{\bf{X}}) = {\bf{a}}_1^{\rm{T}}\rm{var}({\bf{X}}){{\bf{a}}_2}{\rm{ = }}{\bf{a}}_1^{\rm{T}}\lambda {{\bf{a}}_2}{\rm{ = }}\lambda {\bf{a}}_1^{\rm{T}}{{\bf{a}}_2}{\rm{ = }}0$, which completes the proof.