DISCLAIMER: These are only my opinions, and intuitions about how I understand ReLU.

ReLU has 1 point of "drastic" change, and is "otherwise linear".

Exactly, and that makes all the difference!

Let's say a neuron in the first layer outputs a negative value for $ z = w^Tx + b $. Then, with the ReLU activation function, the neuron computes the line $z = 0$.

Suppose, the corresponding neuron in the next layer gets a positive value for $z$. Then, the 2nd neuron computes the line $z = z$.

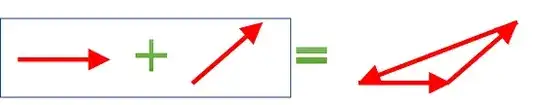

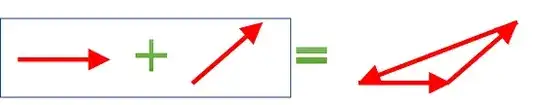

Hope this figure below illustrates how ReLU becomes useful.

With the ReLU activation and a proper adjustment of weights, the 2nd layer can probably compute a new feature (a triangle). If we add a 3rd layer, the third corresponding neuron can probably compute a feature that looks like a quadrilateral, and so on... (This can even be imagined like playing a game of building blocks with a child!)

This property of ReLU that it helps each layer to act as building blocks for the next layer, makes it extremely powerful (regardless of the dataset we train on). Also, ReLU is computationally much more efficient and simple than any other activation function (it's either a 0 or the same thing, $z$). Hence ReLU becomes the best choice for most types of data (unless the data inherently has some properties that can be better modelled by other activation functions like the sigmoid or tanh).

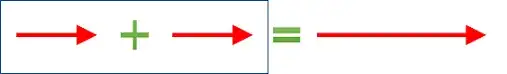

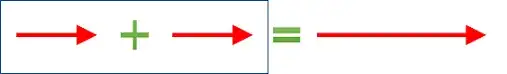

In contrast, a completely linear activation function with no point of discontinuity (like the 0 in the ReLU), can only compute the same feature over and over - it just changes the magnitude or scale.

Regarding your 2nd question - No, I don't think there is any significance to what the singularity (or the point of discontinuity) is, but there has to be at least one point, any point - just so that neurons in different layers computes different functions (here, lines). But 0 is a "good" value, it easily divides the number-line into negative and positive sides (easy to imagine), and also help in making the computations faster.

But with ReLU, there also comes the problem of dying ReLU - when the output of $z$, of corresponding neurons in each successive layer, becomes all negative or all positive. Then again, we are back to having just a linear function to our model. In that case, we use a variation of ReLU called the leaky ReLU, which computes a line with a small slope, even if $z$ is 0.

(The leaky ReLU function is given by $g(z) = max(0.01z, z)$. Usually, we don't want our activations to go larger on the negative side, so we multiply $z$ by a very small factor and 0.01 is a constant that does just that. Again, I don't think there is any significance to the value 0.01 - it just has to be any small factor, and 0.01 is the default.)