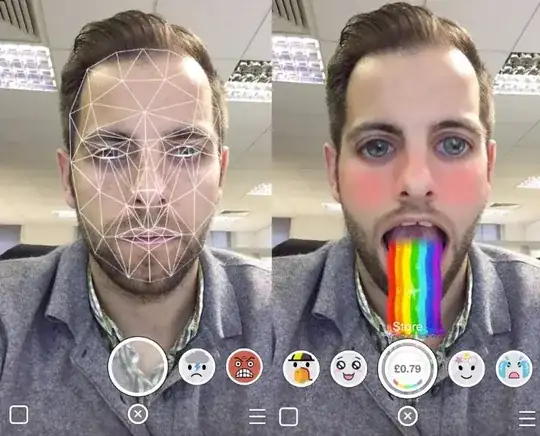

The answer is surprisingly simple: You move the vertices of the face-geometry.

To elaborate, the white wireframe mesh on the left of your image is placed so the geometry aligns with the photo. It is then texture-mapped with the photo, meaning that for each vertex in the mesh, a texture-coordinate is assigned (the point on the photo that should map to the vertex).

With every vertex assigned a texture-coordinate you can deform the mesh, doing ordinary texture-sampling at the interpolated texture-coordinates to have the image stretch - as if it was painted onto the head.

If you need to do this in realtime, you can use your graphics-card to do this; texture mapping is one of the things GPUs are really good at.

Now, if you want to do this on a video, you need to track the facial features to continuously align the geometry to the video. This is not an easy task, but you can probably find a wealth of libraries to help you out.

I am a bit uncertain which of the above would be difficult, so please feel free to ask any questions.