You mention on how if the code is specific to a CPU, why must it be specific also to an OS. This is actually more of an interesting question that many of the answers here have assumed.

CPU Security Model

The first program run on most CPU architectures runs inside what is called the inner ring or ring 0. How a specific CPU arch implements rings varies, but it stands that nearly every modern CPU has at least 2 modes of operation, one which is privileged and runs 'bare metal' code which can perform any legal operation the CPU can perform and the other is untrusted and runs protected code which can only perform a defined safe set of capabilities. Some CPUs have far higher granularity however and in order to use VMs securely at least 1 or 2 extra rings are needed (often labelled with negative numbers) however this is beyond the scope of this answer.

Where the OS comes in

Early single tasking OSes

In very early DOS and other early single tasking based systems all code was run in the inner ring, every program you ever ran had full power over the whole computer and could do literally anything if it misbehaved including erasing all your data or even doing hardware damage in a few extreme cases such as setting invalid display modes on very old display screens, worse, this could be caused by simply buggy code with no malice whatsoever.

This code was in fact largely OS agnostic, as long as you had a loader capable of loading the program into memory (pretty simple for early binary formats) and the code did not rely on any drivers, implementing all hardware access itself it should run under any OS as long as it is run in ring 0. Note, a very simple OS like this is usually called a monitor if it is simply used to run other programs and offers no additional functionality.

Modern multi tasking OSes

More modern operating systems including UNIX, versions of Windows starting with NT and various other now obscure OSes decided to improve on this situation, users wanted additional features such as multitasking so they could run more than one application at once and protection, so a bug (or malicious code) in an application could no longer cause unlimited damage to the machine and data.

This was done using the rings mentioned above, the OS would take the sole place running in ring 0 and applications would run in the outer untrusted rings, only able to perform a restricted set of operations which the OS allowed.

However this increased utility and protection came at a cost, programs now had to work with the OS to perform tasks they were not allowed to do themselves, they could no longer for example take direct control over the hard disk by accessing its memory and change arbitrary data, instead they had to ask the OS to perform these tasks for them so that it could check that they were allowed to perform the operation, not changing files that did not belong to them, it would also check that the operation was indeed valid and would not leave the hardware in an undefined state.

Each OS decided on a different implementation for these protections, partially based on the architecture the OS was designed for and partially based around the design and principles of the OS in question, UNIX for example put focus on machines being good for multi user use and focused the available features for this while windows was designed to be simpler, to run on slower hardware with a single user. The way user-space programs also talk to the OS is completely different on X86 as it would be on ARM or MIPS for example, forcing a multi-platform OS to make decisions based around the need to work on the hardware it is targeted for.

These OS specific interactions are usually called "system calls" and encompass how a user space program interacts with the hardware through the OS completely, they fundamentally differ based on the function of the OS and thus a program that does its work through system calls needs to be OS specific.

The Program Loader

In addition to system calls, each OS provides a different method to load a program from the secondary storage medium and into memory, in order to be loadable by a specific OS the program must contain a special header which describes to the OS how it may be loaded and run.

This header used to be simple enough that writing a loader for a different format was almost trivial, however with modern formats such as elf which support advanced features such as dynamic linking and weak declarations it is now near impossible for an OS to attempt to load binaries which were not designed for it, this means, even if there were not the system call incompatibilities it is immensely difficult to even place a program in ram in a way in which it can be run.

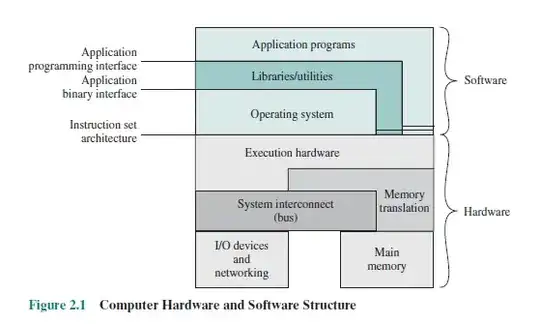

Libraries

Programs rarely use system calls directly however, they almost exclusively gain their functionality though libraries which wrap the system calls in a slightly friendlier format for the programming language, for example, C has the C Standard Library and glibc under Linux and similar and win32 libs under Windows NT and above, most other programming languages also have similar libraries which wrap system functionality in an appropriate way.

These libraries can to some degree even overcome the cross platform issues as described above, there are a range of libraries which are designed around providing a uniform platform to applications while internally managing calls to a wide range of OSes such as SDL, this means that though programs cannot be binary compatible, programs which use these libraries can have common source between platforms, making porting as simple as recompiling.

Exceptions to the Above

Despite all I have said here, there have been attempts to overcome the limitations of not being able to run programs on more than one operating system. Some good examples are the Wine project which has successfully emulated both the win32 program loader, binary format and system libraries allowing Windows programs to run on various UNIXes. There is also a compatibility layer allowing several BSD UNIX operating systems to run Linux software and of course Apple's own shim allowing one to run old MacOS software under MacOS X.

However these projects work through enormous levels of manual development effort. Depending on how different the two OSes are the difficulty ranges from a fairly small shim to near complete emulation of the other OS which is often more complex than writing an entire operating system in itself and so this is the exception and not the rule.

printffrom msvcr90.dll is not the same asprintffrom libc.so.6) – user253751 Jul 08 '14 at 06:53source codeis mostly OS independent. Not the executable. Why is that is already answered. – jnovacho Jul 08 '14 at 09:33printf". I know why they are different, but your explanation doesn't cover it. – user253751 Jul 10 '14 at 23:48