it's my first post here, so I apologize if I broke a rule!

I'm reading Introduction to Machine Learning and got stuck on VC dimension. Here's a quote from the book:

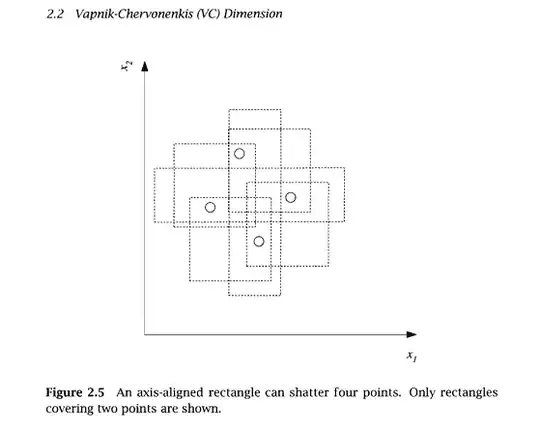

"...we see that an axis-aligned rectangle can shatter four points in two dimensions. Then VC(H), when H is the hypothesis class of axis-aligned rectangles in two dimensions, is four. In calculating the VC dimension, it is enough that we find four points that can be shattered; it is not necessary that we be able to shatter any four points..."

And I don't understand that. If it's enough to find some separable combinations, why can't we just choose a "rectangle with positive examples" from the image above, put another $n$ positive ones therein, and then say $VC(H)$ increased by $n$? And if all cases must be separable then why we don't consider 4 points placed on a line - which is in general not possible to shatter by a rectangle?

The same with the linear classifier example on wikipedia VC article - on their image four points are impossible to shatter, but we can come up with a layout where it is possible. And conversely, we can put 3 points (as "+", "-", "+") on a line and it won't be possible to separate the positives from the negatives by a linear classifier.

Can anyone explain where's my mistake?