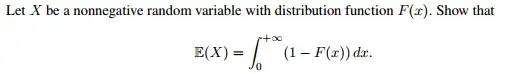

Can someone help me give me some pointers as to how to prove this relation?

Here's a discrete version (also a hint, I guess). For a non-negative $X$ with $EX = \sum_{k=1}^{\infty} k p_k$ each $k p_k = p_k + p_k + \ldots p_k$ k times, so you can rewrite the expression for expectation as $(p_1+ p_2+ \ldots) + (p_2 + p_3 + \ldots) + \ldots = P(X \geq 1) + P(X \geq 2) + \ldots = \sum_{k=1}^{\infty}P(X \geq k) =\sum_{k=1}^{\infty} (1-F_X(k))$

Let p be the probability measure. We have that $\int_{0}^{\infty}\left[1-F\left(x\right)\right]dx=\int_{0}^{\infty}\Pr\left[X>x\right]dx=\int_{0}^{\infty}\left[\int1_{X>x}dp\right]dx $ using Fubini's theorem we have $\int_{0}^{\infty}\left[\int1_{X>x}dp\right]dx=\int\left[\int_{0}^{\infty}1_{X>x}dx\right]dp=\int Xdp=E\left[X\right] $