Each event has two possible outcomes: a and b.

Each event is independent.

Outcome a has $X\%$ chance of happening.

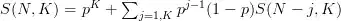

If we run $Y$ number of such events, what are the chances of getting $K$ outcomes a in a row, at least once?

To put it in practical terms imagine a series of coin tosses. Each coin toss has two possible outcomes: a = heads and b = tails. Outcome a = heads has a chance of $50\%$. If we run $Y = 100$ number of coin tosses, what are the chances of getting $K = 10$ outcomes a=heads in a row, at least once?

I'm using the practical example only so I can express the idea more clearly. I'm interested in finding out the general formulas and computations so that I can run the calculations for any $X$, $Y$, or $K$.

I would also be very interested in knowing how the probabilities would change if the events would not be independent. What if the more outcomes a we have in a row the bigger the chance of getting an outcome a in the next event? What if it's the other way around, and the more outcomes a in a row we have the lower the chance of getting another a?