Taking some inspiration from Carlo Morpurgo's answer here, but I couldn't replicate his method exactly. My results are more general and allow for construction of solutions. As a summary of results: For $c\in(-1,1)$ there exists a unique function $f(x)$ such that $f'(x) = f(f(x))$ and $f(c)=c$. This function is analytic on an interval around $c$. For $c\ge 1$, there is no analytic solution. If $c\in(0,1)$, then the interval of analyticity $(c-R,c+R)$ satisfies $c+R>1$ and $f$ has no fixed point in $(c,c+R)$ and $\lim\limits_{x\rightarrow (c+R)^-} f(x) = c+R$.

Theorem 1: For any $c\in(-1,1)$ there exists a unique real solution to $f'(x) = f(f(x))$ in a neighborhood of $c$ such that $f(c) = c$.

Proof: First we show existence. Pick $c\in(-1,1)$. Then define the operator $$

Tf(x) = c+\int_c^x f(f(t)) dt

$$

Pick $\epsilon < \min(1-|c|,\frac12)$ and let $B$ be the space of continuous functions from $[c-\epsilon,c+\epsilon]$ to itself. We first claim $T$ maps $B$ onto itself, so we have a space where it makes sense to look at $T$. Observe that $Tf$ is always differentiable, and furthermore:\begin{eqnarray}

||(Tf)'||_\infty &\le& ||f(f(x))||_\infty< |c|+\epsilon

\end{eqnarray}

Since its derivative is bounded by $|c|+\epsilon < 1$, and $Tf(c) = c$, we conclude that in fact $Tf(x)$ does map this interval onto itself, hence $B$ is closed under $T$.

We now show that $T$ is a contraction under the sup norm on $T(B)$: Observe that if $f$ in $T(B)$, then $||f'||_\infty \le |c|+\epsilon$. Then we have \begin{eqnarray}

||Tf - Tg||_\infty &=& \left|\left|\int_c^xf(f(t))-g(g(t)) dt\right|\right|_\infty\le \epsilon||f(f(t)) - g(g(t))||_\infty \\

&=&\epsilon||f(g(t)) - g(g(t)) + f(f(t))-f(g(t))||_\infty \\

&\le& \epsilon||f(g(t))-g(g(t))||_\infty + \epsilon||f(f(t)) - f(g(t))||_\infty\\

&\le& \epsilon ||f-g||_\infty + \epsilon||f'||_\infty ||f-g||_\infty\\

&\le& \epsilon (1+|c|+\epsilon) ||f-g||_\infty\\

\end{eqnarray}

Observe that $\epsilon(1+|c|+\epsilon) < \frac12(1+|c|+1-|c|) = 1$, hence $T$ is a contraction under the sup norm. Thus Banach's fixed point theorem implies existence of a unique solution to $Tf = f$ in $B$. ◼

Theorem 2: The solutions to $f'(x) = f(f(x))$ given by Theorem 1 are analytic, and the Taylor coefficients at $x=c$ satisfy the recurrence relation $$

f^{(n+1)}(c) = \sum_{k=1}^n f^{(k)}(c) B_{n,k}(f'(c),\dots,f^{(n-k+1)}(c))

$$

where $B_{n,k}$ are the incomplete Bell polynomials.

Proof: The delay differential equation clearly implies that $f$ is infinitely differentiable at $c$. Faa di Bruno's formula implies the claimed recurrence relation. Now, let us suppose $c>0$. One can clearly see that on $[c-\epsilon,c+\epsilon]$, $f^{(k)}(x) > 0$ for all $k\in\mathbb{N}$. Picking $x_0\in (c-\epsilon,c+\epsilon)$, we can use Taylor's theorem to obtain for any $x\in (x_0,c+\epsilon)$

$$

f(x) = \sum_{k=0}^N \frac{f^{(k)}(x_0) (x - x_0)^k}{k!} + \int_{x_0}^x \frac{f^{(N+1)}(t)}{N!} (x-t)^N dt

$$

(see the integral form of the remainder on Wikpedia) The remainder term is clearly positive for all $N$. Since $f(x)$ is finite, this implies that the Taylor series converges at $x$, since the partial sums are all bounded above by $f(x)$. Therefore $f$ has convergent Taylor series around any $x_0$ on $(c-\epsilon,c+\epsilon)$, and in fact, the series around $c$ must have radius of convergence at least $\epsilon$. Since this series is convergent and formally solves the equation, it must equal $f$ by uniqueness of the solution.

Now, what if $c< 0$. Let $g(x)$ be the solution with $g(|c|) = |c|$. Observe that we still have the recurrence$$

f^{(n+1)}(c) = \sum_{k=1}^n f^{(k)}(c) B_{n,k}(f'(c),\dots,f^{(n-k+1)}(c))

$$

Because all the coefficients of the Bell polynomials are non-negative, we can prove inductively using the recurrence that $|f^{(n)}(c)|\le g^{(n)}(|c|)$. As we have seen, $g$ is analytic about $|c|$ and its series about $|c|$ has radius of convergence at least $\epsilon$, which implies that $f$'s series must have radius of convergence at least $\epsilon$ around $c$, whence we can again use uniqueness to argue that this series converges to $f$. ◼

The positivity of the coefficients when $c>0$ can actually give us a lot more information about each $f$:

Theorem 3: Let $c\in(0,1)$ and let $f(x)$ be the function such that $f'(x) = f(f(x))$ and $f(c) = c$. Let $R$ be the radius of convergence of $f$'s power series at $c$. Then $R\in(1-c,\infty)$ and furthermore $f$ has no fixed points in $(0,c+R)$ other than $c$ and

$$\lim_{x\rightarrow (c+R)^-}f(x) = c+R$$

Proof: Because the coefficients of $f$ at $c$ are all positive, when the series fails to be analytic, it must fail to be analytic at the point $c+R$, and furthermore $f$ is strictly increasing on $(c,c+R)$. In particular, $f$ is invertible on the largest possible interval that we can extend it to.

First, we show that $f$ cannot have another fixed point in the interval $(0,1)$, since if it did, at least one of these other fixed points would have to have derivative $>1$, which is not possible as $f'(x) = f(f(x))$. Furthermore, $f'(c) = c<1$ implies $f(x) < x$ for $x\in(c,1)$. To see that $f$ cannot have any fixed points in $[1,c+R)$, we note that $f(x) -x$ is a concave-up function, hence it can have at most two fixed points in $[c,c+R]$, which we see are the end points of this interval.

Next, we show that $\lim_{x\rightarrow (c+R)^-} f(x) < c+R$ is impossible: Suppose we can analytically extend $f$ to $(c,b)$ and $f$ continuously extends to $b$, with $f(b)<b$. Let $G(z) = c + \int_c^z \frac1{f(t)} dt$. Then we can define $G$ analytically on $(c,b)$ as well. However observe that $G'(z) = \frac1{f(z)} = \frac1{f'(f^{-1}(z))} = \frac{d}{dz} f^{-1}(z)$, and hence $G(z) = f^{-1}(z)$ on $(c,f(b))$. Furthermore, $G$ is increasing on $(c,b)$, which implies that we can extend $f$ further by definite $f(z) = G^{-1}(z)$ for $z\in[ b, G^{-1}(b))$. Thus, we must have $f(c+R) \ge c+R$, otherwise we would be able to analytically extend $f$ beyond $(c,c+R)$.

Similarly, we show that $\lim_{x\rightarrow (c+R)^-} f(x) > c+R$ is impossible: Suppose that $f$ analytically extends up to $(c,b)$ and $f(b) > b$ ($f(b)$ is potentially infinite here). Then we can define $f^{-1}(z)$ analytically up to $(c,f(b))$, and hence $H(z) = \frac1{{f^{-1}}'(z)}$ is analytic on $(c,f(b))$. However, observe that for $x\in(c,b)$, we have $H(x) = f(x)$ by use of the functional equation for $f$, hence we can extend $f$ up to the interval $(c,f(b))$ by defining $f(z) = \frac1{{f^{-1}}'(z)}$ for $z\in[b,f(b))$. Thus, we cannot have $\lim_{x\rightarrow (c+R)^-} f(x)> c+R$.

To complete the proof, we must show the claim that $R\in (1-c,\infty)$:

If $R=\infty$, then $f$ would be entire. Because its power series at $c$ has all positive coefficients, $f$ must grow faster than any polynomial, and in particular $f$ must have another fixed point in the interval $(c,\infty)$. Let $c'$ be this fixed point. As we have seen, necessarily $c'\ge1$. Using the same argument applied above to show that the power series converges for negative $c$, we can see that for any $c''\in\mathbb{C}$ such that $|c''|\le c'$, the formal power series corresponding to $f'(z) = f(f(z))$ and $f(c'') = c''$ in fact defines an entire function. Furthermore, the formal power series must define the unique solution that is analytic in a neighborhood of $c''$. However, JJacquelin's answer provides two examples of solutions to the equation of the form $\alpha z^\beta$ that are not entire (they aren't analytic at $0$) but have fixed points with absolute value $1$ (of the form $\alpha^{1/\beta}$), and these functions are analytic in a neighborhood of those points, hence we cannot have an entire function with $f'(z) = f(f(z))$ and $f(c') = c'$ for some $c'\ge 1$.

If $R < 1-c$, we have $c+R<1$, but in order for $f(c+R)$ to equal $c+R$, we would have to have $f'(c+R)\ge 1$ (since it is approaching this point from below the line $y=x$), which is a contradiction.

Finally, we must show that $R\ne 1-c$. Let $R(c)$ be $R$ seen as a function of $c$, and $f_c(x)$ be the solution corresponding to $c$. We can see immediately that $R(c)$ must be a nonincreasing function of $c$, as $f_c^{(n)}(c)$ is always an increasing function of $c$ for each $n$. In fact, we can do much better, because $f_c(x)$ increases with $c$ for fixed $x$, hence if $f_c(x_0) = x_0$, we would have $f_{c'}(x_0) > x_0$ for $c'>c$, which would imply that $x_0$ must be outside of $f_{c'}$'s region of analyticity (since $f_c(x) < x$ for any $x\in(c,c+R(c))$), hence $x_0 = c + R(c) > c' + R(c')$ which implies $R(c) + c$ is a decreasing function of $c$. Thus, if $R(c) = 1-c$ for some $c$, we would have $R(c') + c' < R(c) + c = 1$ for all $c'>c$, but this would imply $R(c') < 1-c'$, which we have already proven is impossible. ◼

Theorem 4: For $c\ge 1$, there is no function satisfying $f'(x) = f(f(x))$, $f(c) = c$ and $f$ is analytic in a neighborhood of $c$.

Proof: Suppose $c=1$. We can use the recurrence relation from Theorem 2 to show formally find $f^{(n)}(1)$. We have already seen that $f$ cannot be entire. One can show inductively that $f^{(n)}(1) \ge (n-1)!$, which implies that $f$ must diverge to infinity at the right endpoint of its radius of convergence, since each coefficient of its Talyor series at $z=1$ is bounded below by $\frac1n$. However, as we have seen, $f(x) = \frac1{{f^{-1}}'(x)}$. However, if $f(x)$ is analytic on some $(1,b)$, with $f(b) = \infty$, we have a contradiction. Note that $f'(x) > 1$ for $x$ in $(1,b)$, but we also have $f(x) = \frac1{{f^{-1}}'(x)}$, which is finite-valued at $b$, since $f'(f^{-1}(b)) \ne 0$. Hence $f$ cannot be analytic at $1$.

Again using the fact that the formal terms $f_c^{(n)}(c)$ are increasing functions of $c$, we can see that if $f_1(x)$ has a power series with radius of convergence $0$ at $1$, then $f_c(x)$ must have radius of convergence $0$ at $c$ for any $c>1$ as well.

The recurrence from Theorem 2 gives us an effective way to compute the Taylor series of $f$. Furthermore, the same recurrence applies to the derivatives at $c+R$; if we define $f^{(n)}(c+R) = \lim\limits_{x\rightarrow (c+R)^-} f^{(n)}(x)$, we'll have $$

f^{(n+1)}(c+R) = \sum_{k=1}^n f^{(k)}(c+R) B_{n,k}(f'(c+R),\dots,f^{(n-k+1)}(c+R))

$$

which gives a formal power series of $f$ around $c+R$, but by theorem 4, this series has radius of convergence $0$.

As an example of some solutions: By my calculations, for $c = \frac12$, we would get \begin{eqnarray}

f(1/2) &=& 0.5\\

f'(1/2) &=& 0.5\\

f''(1/2) &=& 0.25\\

f^{(3)}(1/2) &=& 0.1875\\

f^{(4)}(1/2) &=& 0.2109375\\

f^{(5)}(1/2) &=& 0.32958984...\\

f^{(6)}(1/2) &=& 0.66581726...\\

f^{(7)}(1/2) &=& 1.65652156...\\

f^{(8)}(1/2) &=& 4.90900657...\\

&\vdots&

\end{eqnarray}

and for $c= -\frac12$, we get \begin{eqnarray}

f(1/2) &=& -0.5\\

f'(1/2) &=& -0.5\\

f''(1/2) &=& 0.25\\

f^{(3)}(1/2) &=& -0.0625\\

f^{(4)}(1/2) &=& -0.0546875\\

f^{(5)}(1/2) &=& 0.07861328...\\

f^{(6)}(1/2) &=& 0.00950626...\\

f^{(7)}(1/2) &=& -0.16376846...\\

f^{(8)}(1/2) &=& 0.17180684...\\

&\vdots&

\end{eqnarray}

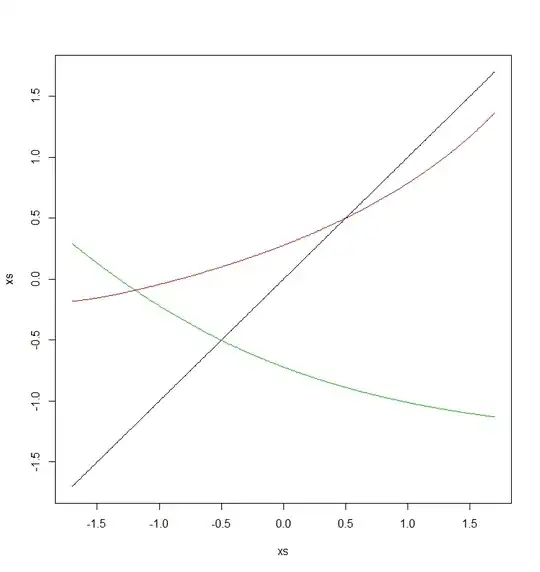

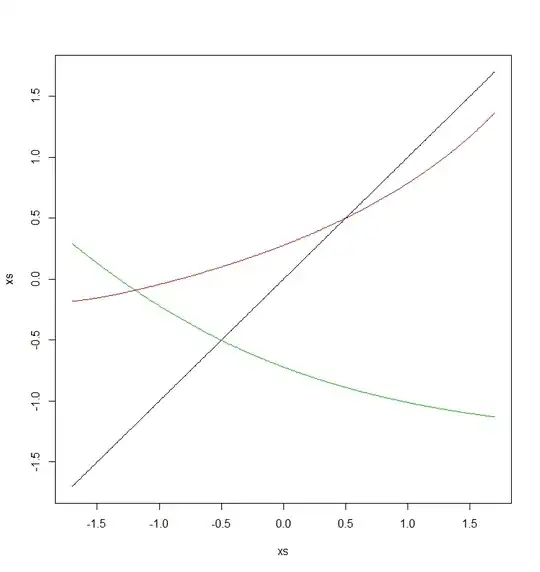

Here's a graph of $f_{1/2}(x)$ in red and $f_{-1/2}(x)$ in green, the line $y=x$ in black: