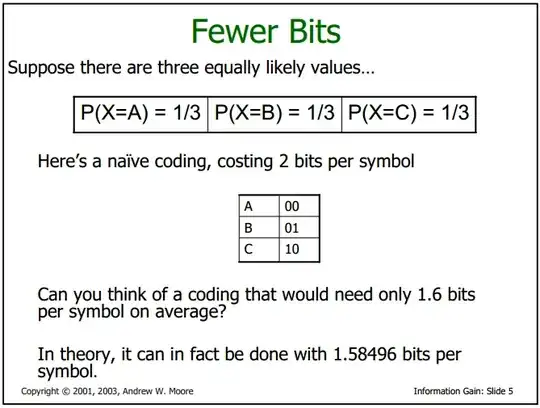

The optimal length is given by the Huffman code. In this case, that corresponds to your proposed coding, with average code length 1.6667. So, this is optimal... if you restrict to a single symbol=>single codeword coding. But you can do better if, instead of coding each symbol alone, you code the pairs, or triplets, or... That is, if you encode the "extended source".

For example, taking the extension of order $2$, you'd have $3\times 3=9$ symbols, each with probability $1/9$, and you could code them thus:

AA 000

AB 001

AC 010

BA 011

BB 100

BC 101

CA 110

CB 1110

CC 1111

This gives an average length of $(3 \times 7/9 + 4 \times 2/9)/2=1.6111\cdots$. This is better, but still does not reach the Shannon limit.

For extension three, we'd have 27 symbols and the Huffman code would be

n L

00** (4) 4

0100 (1) 4

0101* (2) 5

011** (4) 5

1**** (16) 5

with average length $[ 4 \times (4+1)/27 + 5 \times (2+4+16)/27]/3=1.605...$

You can do still better -and approximate asymptotically the theoretical value- by taking higher and higher order extensions.

Or you can use arithmetic coding, which, essentially, does precisely that.

A simple coding -suboptimal but simple, and that achieves the goal in the original exercise - would be the extension of order 5, which gives $3^5=243$ symbols. This can be trivially coded by 8 bits each ($2^8=256 < 243$), what gives $8$ bits/$5$ symbols = 1.6 bits per symbol.