You used an upper bound on the absolute value of the derivative to find an upper bound on the number of iterations required for the desired precision.

The Mean Value Theorem says that if $x_n$ is the $n$-th estimate, and $r$ is the root, then $|x_{n+1}-r|$ is equal to $|x_n-r|$ times the absolute value of $g'(x)$ somewhere between $x_n$ and $r$.

It is true that the derivative has absolute value $\lt \frac{2}{(1.3)^3}$. But that only gives us an upper bound on the "next" error in terms of the previous error.

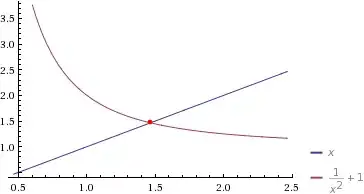

Furthermore, as $x_n$ gets close to $r$, say around $1.4$ or closer, the relevant derivative has significantly smaller absolute value than your $k$.

A further huge factor in this case is that the derivative is negative. That means that estimates alternate between being too small and being too big. When $x_n\gt r$, the derivative has significantly smaller absolute value than your estimate $k$.

Even at the beginning, the convergence rate is faster than the one predicted from the pessimistic estimate of the derivative, particularly since half the time $x_n\gt r$. After a while, the disparity, for $x_n\lt r$, gets greater.

Remark: You know the root $r$ to high precision. It might be informative to modify the program so that at each stage it prints out $\frac{x_{n+1}-r}{x_n-r}$. That way, you can make a comparison between the upper bound $\frac{2}{(1.3)^3}$ on the ratio, and the actual ratio. Even not very large differences, under repeated compounding, can result in much quicker convergence than the one predicted from the upper bound.

"

– clarkson Nov 15 '13 at 18:41