This is really just another way of stating nullUser's intuitive explanation, particularly focusing on the second half (Tonelli/Fubini).

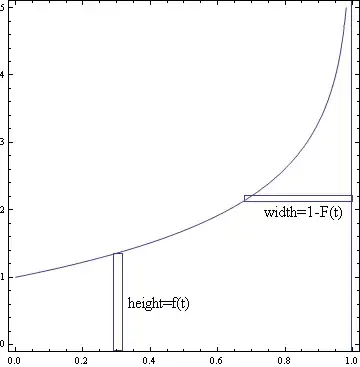

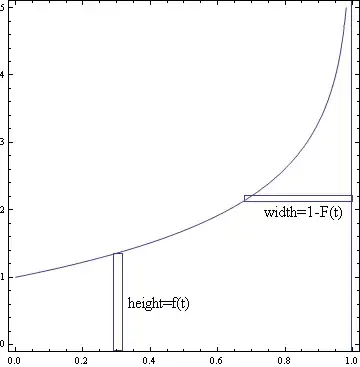

Suppose for now that our variable has the specific form $X=f(t)$, where $t$ is chosen uniformly between $0$ and $1$ and $f$ is an increasing function of $t$. In that case $E(X)$ has a natural interpretation: It just corresponds to the average value of $f$, which is just the area under $f$.

There's two ways of thinking about this area. One is "vertically", as $\int_0^1 f(t) dt$. The other is "horizontally": integrate the width of the horizontal rectangle instead of the height of the vertical one. And the width of a horizontal rectangle here just corresponds to the part of the $f(t)$ which is above $t$, i.e. the probability $X$ is at least $t$.

This picture was for when $X$ had this particular $f(t)$ form. For the general case, you should think of $t$ as representing a sort of percentile for $X$ (e.g. $t=0.5$ represents the median value of $X$, and $t=0.9$ a value which is only reached $10\%$ of the time). This can also be thought of in terms of a change of variables where $t=\Phi^{-1}(X)$.