Here I will try and expand a justification for the symmetry of the $y$ variables:

$y_1,y_2,\dots y_{n+1}$ can be considered as independent variables, except for the fact that they sum up to $1$. Unlike the $s$ variables, there is no ordering relation between them.

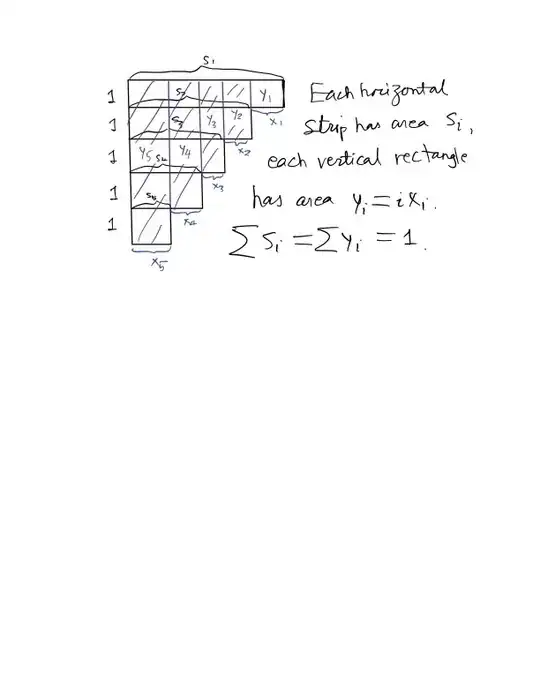

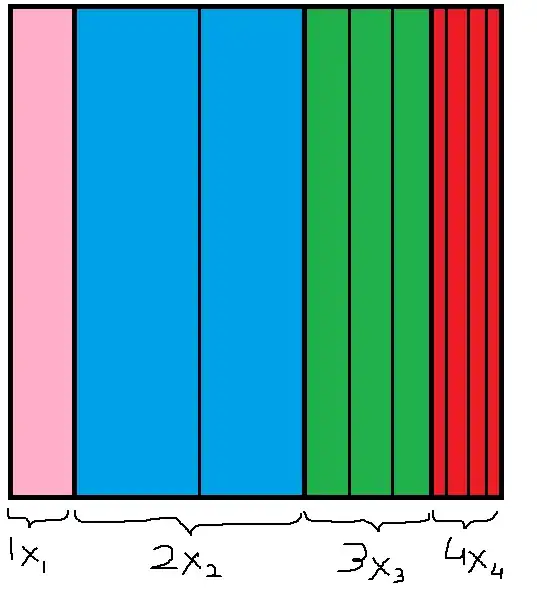

Apart from that, they can be given any set of $n+1$ values in the $[0,1]$ interval. And any such assignment corresponds uniquely and linearly to a valid assignment of the $s_1,s_2,\dots s_{n+1}$ variables. The linearity of the transformation between $s$ and $y$ shows "probability volume" is preserved in the change of variable. Given this correspondence, and uniform distribution of the initial variables, we can assume the assigned values of $y$ can be permuted, leading to equiprobable events in the $s$ variables. Therefore $\forall r\neq p, E(y_r) = E(y_p)$. Due to their sum being $1$, we get $E(y_r) = \frac{1}{n+1}$.

To get more intuition on this, I will work out an example for $n= 1$,

Consider an event which gives the values $(v_1,v_2)$ to our variables $(s_1,s_2)$.

We get values

$(v_1-v_2,v_2)$ for the variables $(x_1,x_2)$ and

$(v_1-v_2,2 v_2)$ for $(y_1,y_2)$.

Given that initial distributions were uniform, the transformations linear, and the $y$s have no constraints apart from being in the interval $[0,1]$ and summing to $1$, we can say the for the variables $(y_1,y_2)$ the values $(v_1-v_2,2v_2)$ are as probable as the values $(2v_2,v_1-v_2)$ (permutation is a volume-preserving transformation). This means (working backwards) that we can equiprobably assign values

$(2v_2,(v_1-v_2)/2)$ to $(x_1,x_2)$ and

$(2v_2 + (v_1-v_2)/2,(v_1-v_2)/2)$ to $(s_1,s_2)$.

Knowing that $v_1 + v_2$ is constrained to be $1$ we can rewrite that as $(\frac{3}{2}-v_{1},\frac{1}{2}-v_{2})$. Ie. for $(s_1,s_2)$, the event $(v_1,v_2)$ is as equiprobable as the event $(\frac{3}{2}-v_1,\frac{1}{2}-v_{2})$. Knowing these events as equiprobable we can compute the expected value of a variable as the average its assigned value in equiprobable events. Ie. $E(s_1) = (E(v_1) + E(\frac{3}{2}-v_1))/2 =\frac{3}{4}$ . For $y_1$ we get $((v_1-v_2) + 2v_2)/2 = 1/2$.

The same trick can be applied to $n=2$ or higher:

Assign an event to $(s_1,s_2,s_3)$, compute the corresponding values to $(y_1,y_2,y_3)$, cycle their values, then work backwards to get equiprobable events for $(s_1,s_2,s_3)$ (for $n=2$, we need $2$ more events, corresponding to the cycles values of $(y_1,y_2,y_3)$). Then we may compute the expected values as the average of such grouped equiprobable events.