Given two integers $N \geq M \gt 0$ and $0<p<1$. Suppose I keep tossing a coin with a head probability $p$ until I get $M$ heads in the most recent $N$ tosses. What is the expected number of tosses?

The game is stopped immediately if $M$ heads are reached before $N$ tosses are attempted.

The question is not from my class or exercise; It just appears in various occasions in my everyday life that pique my curiosity. An example is the "mastery" in Minesweeper online, which is defined to be the number of wins out of consecutive 100 games. In the case of a global quest, players are asked to reach certain mastery from scratch, e.g., to win 70 out of 100 consecutive intermediate games. A skilled player with a winning probability of 0.8 is likely to finish the quest in less than 100 games, while a newbie with a winning probability of 0.6 is likely to make hundreds or thousands of attempts to complete the quest. Some players would like to estimate the expected number of games they need to play, hence it comes the above question.

Intuitively, when $M\ll pN$, the expected number of games should be very close to $M/p$. As $M$ approaches or exceeds $pN$, the expected number of games grows very quickly. So far I haven't find a way to solve the question for a general $M$. But the question does reduce to some well-known problems for some specific $M$.

Let $E(M, N)$ denote the expectation:

first head $$ E(1,N) = \frac{1}{p}$$

first N-consecutive heads $$E(N, N) = \frac{p^{-N}-1}{1-p}$$

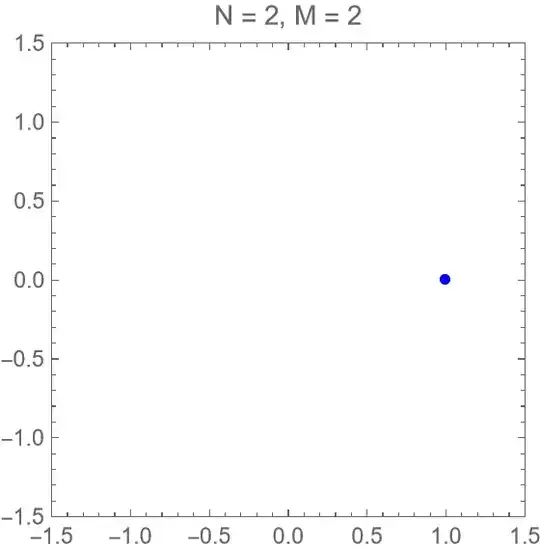

$M=2$ seems to be another case where a relation can be built without too much effort. Let $X_{M,N}$ denote the number of tosses to stop the game and $Y$ denote the number of tosses to reach the first head, then

$$E(2,N) = \sum_{y} E(X_{2,N}|Y=y) P(Y=y)$$

The conditional probability can be calculated by considering the next $N-1$ tosses. Let $q=1-p$,

\begin{align} E(X_{2,N}|Y=y) &= p\sum_{k=0}^{N-2} q^k (y+k+1) + q^{N-1} [y+N-1+E(2,N)] \\ & = y + \frac{1-q^{N-1}}{p} + q^{N-1}E(2,N) \end{align}

which yields $$ E(2,N) = \frac{2-q^{N-1}}{p(1-q^{N-1})} $$

But I don't think the above approach for $M=2$ can be generalized to $M\geq 3$.

$M=N-1$ is another case where we only need to consider a state with $N$ possibilities, instead of $2^N$. Let $Z_{r}^{N}$ denote the rest number of tosses to finish the game of $M=N-1$ with a most recent history of tail-($r$ consecutive heads)-tail:

$$ T\underbrace{H...H}_{r}T | (Z_{r}^N ~ \text{tosses to finish}) $$

We have

$$ E(Z_{r}^{N}) = p^{N-r-1}(N-r-1) + q\sum_{k=0}^{N-r-2}p^k\left[k+1+E(Z_{k}^{N})\right] $$

Note that $E(Z_{0}^{N})=E(N-1, N)$ is the quantity we are looking for. Unfortunately I haven't found an explicit expression, though it's just a system of $N-1$ linear equations:

$$ (I - qA) \pmb{E} = \pmb{b} $$

where, for $i,j=0,\ldots,N-2$,

$$ A_{ij} = \left\{\begin{matrix} p^j & i+j\leq N-2 \\ 0 & i+j\gt N-2 \end{matrix} \right. $$

and $$b_i = \frac{1-p^{N-1-i}}{q} $$

With the help of sympy I got the following results for $p=2/3$: \begin{align} E(2,3) &= 51/16 & \approx 3.19 \\ E(3,4) &= 1551/296 & \approx 5.24\\ E(4,5) &= 156615/19936 & \approx 7.86\\ E(5,6) &= 38234649/3388448 & \approx 11.28 \\ E(6,7) &= 31121052081/1963106560 & \approx 15.85 \end{align}

As pointed out by @VarunVejalla, a small reduction from $2^N$ can be achieved by considering where the heads are in the sequence of the last $N$ flips. This can be done by generalizing the method for $M=N-1$. Let $Z_{r_1 \ldots r_{N-M}}^{N}$ denote the rest number of tosses to stop the game with a most recent history of $r_i$ consecutive heads separated apart by a tail each, i.e.,

$$ T\underbrace{H...H}_{r_1}T\underbrace{H...H}_{r_2}T\ldots T \underbrace{H...H}_{r_{N-M}}T | (Z_{r_1\ldots r_{N-M}}^{N} ~ \text{tosses to finish}) $$ where $0\leq r_i\leq M-1$ and $\sum_{i=1}^{N-M}r_i\leq M-1$. We have

$$E(Z_{r_1\ldots r_{N-M}}^{N}) - q\sum_{k=0}^{M-1-\sum r_i} p^k E(Z_{r_2\ldots r_{N-M}k}^{N}) = \frac{1-p^{M-\sum r_i}}{q} $$

The above system of linear equations works for any $M<N$, but its size equals the number of non-negative integer solutions to $\sum_{i=1}^{N-M}r_i\leq M-1$, which roughly scales as $O(N^{\textrm{min}(M, N-M)})$. Does anyone have any thoughts on its closed-form solution (if it exists)?