I am a little confused as to which bit you are unsure of in the comments above. I am guessing we just need to connect the dots between the specific form of each $\operatorname{ad}X(E_{i,j})$ and the matrix being triangular.

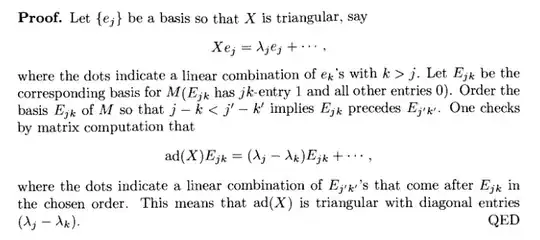

The fact that $\operatorname{ad}(X)(E_{i,j}) = (\lambda_i - \lambda_j)E_{i,j} + \sum a_{i',j'}E_{i',j'}$ where each $E_{i',j'}$ comes later than $E_{i,j}$ means that the column corresponding to $E_{i,j}$ in the matrix of $\operatorname{ad}X$ has $\lambda_i - \lambda_j$ on the diagonal (the row corresponding to $E_{i,j}$) and $a_{i',j'}$ in the row corresponding to $E_{i',j'}$. Since those come after $E_{i,j}$ in the ordering, those are to the below the diagonal. So the matrix is lower triangular which things of the form $\lambda_i - \lambda_j$ on the diagonal.

If instead it is unclear why $\operatorname{ad}(X)(E_{i,j})$ would have this form you should do some examples. The form of $\operatorname{ad}(X)(E_{i,j})$ is quite specific and when you see it you should be able to prove why it is the case.

Edit: sorry I mixed up my rows and columns above so I have edited it to match the following explanation (the former version made sense if we reversed the order of the basis and would give an upper triangular matrix rather than lower).

So the sticking point seems to be how to translate information written out algebraically into a matrix.

Let $A$ be a linear transformation and $x_1,\dots, x_k$ a basis. Writing $A$ a matrix with respect to the $x_i$ is precisely the process of setting $A_{i,j}$ to make $Ax_j=\sum_i A_{i,j}x_i$ true (so $Ax_j$ as a column vector is the $j$th column of the matrix). Now apply that in our scenario. Our basis consists of the $E_{i,j}$ and our transformation is $\operatorname{ad} X$ so if we compute $\operatorname{ad} X(E_{i,j})$ the coefficients of that witten in the basis of the $E_{i,j}$ gives us a column of our matrix. So if the only non-zero coefficients come later than the $E_{i,j}$ one the only non-zero elements in the column come lower than the $E_{i,j}$ one and so on.

Now why do we have this form of $\operatorname{ad} X(E_{i,j})$? I do seriously invite you to actually just compute some examples of the Lie bracket $[X,E_{i,j}]$ as simply the commutator of matrices $XE_{i,j} - E_{i,j}X$ where $X$ is a random lower triangular matrix (doesn't hurt to see what happens on an upper triangular matrix or even any old matrix, either).

You will find that you get a something that looks like:

$$ \left[\begin{pmatrix} *& 0& 0& 0&0 &0\\ *& *& 0& 0& 0&0\\ *& *& * & 0& 0& 0\\ *& *& *& *& 0&0\\ *& *&* & *& *&0\\*& *&* & *&*&*\\ \end{pmatrix}, \begin{pmatrix} 0& 0& 0& 0&0 &0\\ 0& 0& 0& 0& 0&0\\ 0& 0& * & 0& 0& 0\\ 0& 0& 0& 0& 0&0\\ 0& 0&0 & 0& 0&0\\0& 0&0 & 0& 0&0\\ \end{pmatrix}\right] = \begin{pmatrix} 0& 0& 0& 0&0 &0\\ 0& 0& 0& 0& 0&0\\ *& *& * & 0& 0& 0\\ 0& 0& *& 0& 0&0\\ 0& 0&* & 0& 0&0\\0& 0&* & 0& 0&0\\ \end{pmatrix}$$

Specifically, $[X,E_{i,j}]$ is in the span of $E_{i,j}, E_{i+1,j},E_{i+2,j},\dots E_{n,j}, E_{i,j-1}, E_{i,j-2}\dots E_{i,1}$ and $(i+1) - j < i-j$ and so on. You will hopefully notice even more to the pattern such as the entries will be entries pulled right from the matrix $X$ up to sign except for the top right entry which is exactly $X_{j,j} - X_{i,i}$.

So transitioning to our $n^2$ dimensional space $\operatorname{ad} X(E_{i,j}) = (X_{j,j} - X_{i,i})E_{i,j} + a_{i+1,j}E_{i+1,j} + a_{i+2,j}E_{i+2,j} + \cdots$ and so on which as a column vector has $X_{j,j} - X_{i,i}$ as its first non-zero element and that vector is exactly one of the columns of our matrix so that $X_{j,j} - X_{i,i}$ ends up on the diagonal.